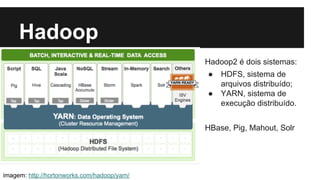

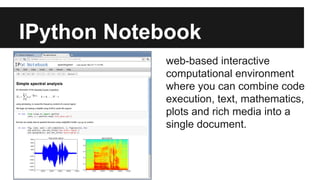

O documento propõe a criação de um ambiente interativo para análise de big data na Globo.com utilizando Spark e IPython. O sistema permitiria acessar todos os dados da empresa, rodar algoritmos de machine learning para identificar informações relevantes, formular hipóteses e experimentos para tomada de decisões baseadas em dados.