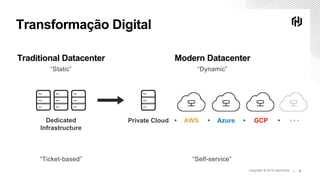

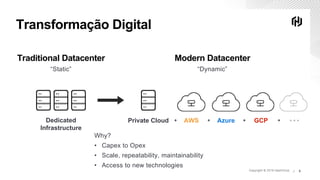

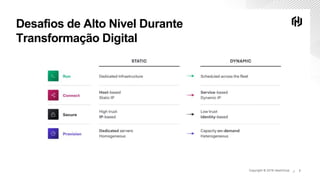

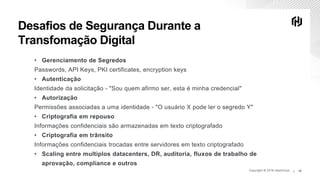

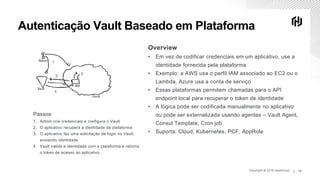

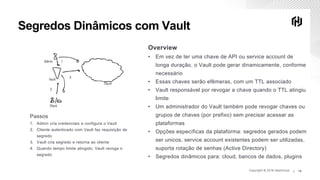

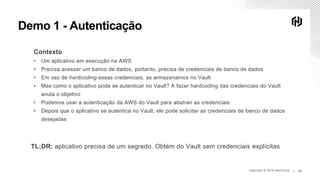

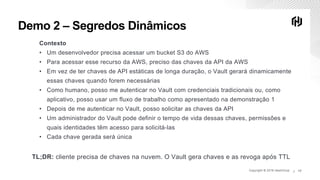

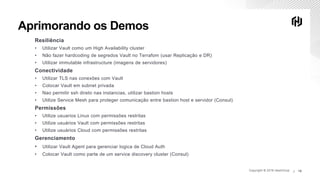

O documento discute os desafios de segurança na nuvem durante a transformação digital e apresenta demos do Vault para autenticação e gerenciamento de segredos na nuvem. A agenda inclui transformação digital, desafios de segurança na nuvem, autenticação segura na nuvem com Vault, acesso seguro aos serviços na nuvem com segredos dinâmicos do Vault e próximos passos.