Solr Extracting Data

•Transferir como ODP, PDF•

3 gostaram•3,184 visualizações

A presentation showing how to extract data from the solr tool, it is part 3 of a three part series. Originally from my youtube channel.

Denunciar

Compartilhar

Denunciar

Compartilhar

Recomendados

Recomendados

Mais conteúdo relacionado

Mais de Mike Frampton

Mais de Mike Frampton (20)

Último

Último (20)

Agentic RAG What it is its types applications and implementation.pdf

Agentic RAG What it is its types applications and implementation.pdf

PLAI - Acceleration Program for Generative A.I. Startups

PLAI - Acceleration Program for Generative A.I. Startups

Connector Corner: Automate dynamic content and events by pushing a button

Connector Corner: Automate dynamic content and events by pushing a button

Kubernetes & AI - Beauty and the Beast !?! @KCD Istanbul 2024

Kubernetes & AI - Beauty and the Beast !?! @KCD Istanbul 2024

Optimizing NoSQL Performance Through Observability

Optimizing NoSQL Performance Through Observability

From Siloed Products to Connected Ecosystem: Building a Sustainable and Scala...

From Siloed Products to Connected Ecosystem: Building a Sustainable and Scala...

De-mystifying Zero to One: Design Informed Techniques for Greenfield Innovati...

De-mystifying Zero to One: Design Informed Techniques for Greenfield Innovati...

UiPath Test Automation using UiPath Test Suite series, part 1

UiPath Test Automation using UiPath Test Suite series, part 1

AI for Every Business: Unlocking Your Product's Universal Potential by VP of ...

AI for Every Business: Unlocking Your Product's Universal Potential by VP of ...

Unpacking Value Delivery - Agile Oxford Meetup - May 2024.pptx

Unpacking Value Delivery - Agile Oxford Meetup - May 2024.pptx

In-Depth Performance Testing Guide for IT Professionals

In-Depth Performance Testing Guide for IT Professionals

Software Delivery At the Speed of AI: Inflectra Invests In AI-Powered Quality

Software Delivery At the Speed of AI: Inflectra Invests In AI-Powered Quality

Free and Effective: Making Flows Publicly Accessible, Yumi Ibrahimzade

Free and Effective: Making Flows Publicly Accessible, Yumi Ibrahimzade

Search and Society: Reimagining Information Access for Radical Futures

Search and Society: Reimagining Information Access for Radical Futures

Designing Great Products: The Power of Design and Leadership by Chief Designe...

Designing Great Products: The Power of Design and Leadership by Chief Designe...

Introduction to Open Source RAG and RAG Evaluation

Introduction to Open Source RAG and RAG Evaluation

"Impact of front-end architecture on development cost", Viktor Turskyi

"Impact of front-end architecture on development cost", Viktor Turskyi

Solr Extracting Data

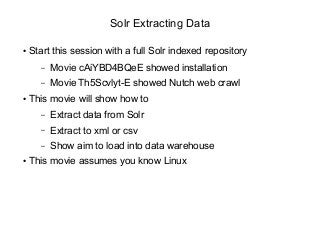

- 1. Solr Extracting Data ● Start this session with a full Solr indexed repository – Movie cAiYBD4BQeE showed installation – Movie Th5Scvlyt-E showed Nutch web crawl ● This movie will show how to – Extract data from Solr – Extract to xml or csv – Show aim to load into data warehouse ● This movie assumes you know Linux

- 2. Solr Extracting Data ● Progress so far, greyed out area yet to be examined

- 3. Checking Solr Data ● Data should have been indexed in Solr ● In Solr Admin window – Set 'Core Selector' = collection1 – Click 'Query' – In Query window set fl field = url – Click Execute Query ● The result ( next ) shows the filtered list of urls in Solr

- 5. How To Extract ● How could we get at Solr data ? – In admin console via query – Via http solr select – Via curl -o call using solr http select ● What format of data – that suits this purpose – Xml – Comma separated variable (csv)

- 6. How To Extract ● We want to extract two columns from Solr – tstamp, url ● We want to extract as csv ( csv in call below could be xml ) ● We want to extract to a file ● So we will use an http call – http://localhost:8983/solr/select?q=*:*&fl=tstamp,url&wt=csv ● We will also use a curl call – curl -o <csv file> '<http call>'

- 7. How To Extract ● Ceate a bash file in Solr install directory – cd solr-4-2-1/extract ; touch solr_url_extract.bash – chmod 755 solr_url_extract.bash ● Add contents to bash file – #!/bin/bash – curl -o result.csv 'http://localhost:8983/solr/select?q=*:*&fl=tstamp,url&wt=csv' – mv result.csv result.csv.$(date +”%Y%m%d.%H%M%S”) ● Now run the bash script – ./solr_url_extract.bash

- 8. Check Output ● Now we check whether we have data ● ls -l shows – result.csv.20130506.124857 ● Check the content , wc -l shows 11 lines ● Check the content , head -2 shows – tstamp, url – 2013-05-04T01:56:58.157Z,http://www.mysite.co.nz/Search? DateRange=7& ... ● Congratulations, you have extracted data from Solr ● It's in CSV format ready to be loaded into a data warehouse

- 9. Possible Next Steps ● Choose more fields to extract from data ● Allow Nutch crawl to go deeper ● Allow Nutch crawl to collect a lot more data ● Look at facets in Solr data ● Load CSV files into Data Warehouse Staging schema ● Next movie will show next step in progress

- 10. Contact Us ● Feel free to contact us at – www.semtech-solutions.co.nz – info@semtech-solutions.co.nz ● We offer IT project consultancy ● We are happy to hear about your problems ● You can just pay for those hours that you need ● To solve your problems