Managing Successful Test Automation

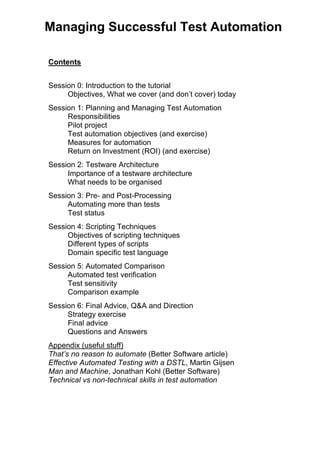

- 1. Managing Successful Test Automation Contents Session 0: Introduction to the tutorial Objectives, What we cover (and don’t cover) today Session 1: Planning and Managing Test Automation Responsibilities Pilot project Test automation objectives (and exercise) Measures for automation Return on Investment (ROI) (and exercise) Session 2: Testware Architecture Importance of a testware architecture What needs to be organised Session 3: Pre- and Post-Processing Automating more than tests Test status Session 4: Scripting Techniques Objectives of scripting techniques Different types of scripts Domain specific test language Session 5: Automated Comparison Automated test verification Test sensitivity Comparison example Session 6: Final Advice, Q&A and Direction Strategy exercise Final advice Questions and Answers Appendix (useful stuff) That’s no reason to automate (Better Software article) Effective Automated Testing with a DSTL, Martin Gijsen Man and Machine, Jonathan Kohl (Better Software) Technical vs non-technical skills in test automation

- 3. 0-1 Managing Successful Test Automation Prepared and presented by Dorothy Graham www.DorothyGraham.co.uk email: info@dorothygraham.co.uk Twitter: @DorothyGraham © Dorothy Graham 2013 0-1 Objectives of this tutorial • help you achieve better success in automation – independent of any particular tool • mainly management and some technical issues – objectives for automation – showing Return on Investment (ROI) – importance of testware architecture – practical tips for a few technical issues – what works in practice (case studies) • help you plan an effective automation strategy 0-2 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 4. 0-2 Tutorial contents 1) planning & managing test automation 2) testware architecture 3) pre and post processing 4) scripting techniques 5) automated comparison 6) final advice, Q&A and direction 0-3 Shameless commercial plug Part 1: How to do automation - still relevant today, though we plan to update it at some point New book! www.DorothyGraham.co.uk info@dorothygraham.co.uk 0-4 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 5. 0-3 What is today about? (and not about) • test execution automation (not other tools) • I will NOT cover: – demos of tools (time, which one, expo) – comparative tool info (expo, web) – selecting a tool* • at the end of the day – understand technical and non-technical issues – have your own automation objectives – plan your own automation strategy * I will email you Ch 10 of the STA book on request – info@dorothygraham.co.uk 0-5 Test automation survey 2004 - 2010 – survey by Erik van Veenendaal in Professional Tester magazine (Nov/Dec 2010) – – have test execution tools – have shelfware tools 2004 29% 26% 2010 48% 28% • most common shelfware – test execution tools (33%) – achieving many benefits 27% 50% 0-6 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 6. 0-4 Current tool adoption (Australia) • K.J. Ross & Associates survey 2010 – 19% – 7% good benefits using tools but not anticipated ROI 26% success (partial) 74% failure! – 35% – 14% – 12% – 10% – 3% don’t have skills to adopt or maintain automation just an added burden, no time tools abandoned – too much maintenance tools bought, no resources to adopt no budget to buy or adopt tools Source: www,kjross.com.au (good webinar on automation) 0-7 About you • your Summary and Strategy document – where are you now with your automation? – what are your most pressing automation problems? – why are you here today? There is small group work throughout the day (maximum of 5 per group); introduce yourselves within your group. 0-8 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 7. 1-Managing Successful Test Automation Planning & Managing Test Automation 1 Managing 2 Architecture 3 Pre- and Post 4 Scripting 5 Comparison 6 Advice 1-1 Managing Successful Test Automation 1 2 3 4 5 6 Contents Responsibilities Pilot project Test automation objectives Measures for automation Return on Investment (ROI) 1-2 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 8. 1-Managing What is an automated test? • a test! – designed by a tester for a purpose • test is executed – implemented / constructed to run automatically using a tool – could be run manually also • who decides what tests to run? • who decides how a test is run? 1-3 Existing perceptions of automation skills • many books & articles don’t mention automation skills – or assume that they must be acquired by testers • test automation is technical in some ways • using the test execution tool directly (script writing) • designing the testware architecture (framework / regime) • debugging automation problems – this work requires technical skill – most people now realise this (but many still don’t) See article: “Technical vs non-technical skills in test automation” presented by Dorothy Graham info@dorothygraham.co.uk 1-4 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 9. 1-Managing Responsibilities Testers • test the software – design tests – select tests for automation • requires planning / negotiation Automators • automate tests (requested by testers) • support automated testing – allow testers to execute tests – help testers debug failed tests – provide additional tools (homegrown) • execute automated tests – should not need detailed technical expertise • analyse failed automated tests – report bugs found by tests – problems with the tests may need help from the automation team • predict – maintenance effort for software changes – cost of automating new tests • improve the automation – more benefits, less cost 1-5 Test manager’s dilemma • who should undertake automation work – not all testers can automate (well) – not all testers want to automate – not all automators want to test! • conflict of responsibilities – automate tests vs. run tests manually • get additional resources as automators? – contractors? borrow a developer? tool vendor? 1-6 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 10. 1-Managing Recent poll Testers as automators? Every tester should be able to write code Testers can be automators if they want to Testers shouldn't have to become automators Testers should never be automators 0 10 20 30 40 50 60 70 80 EuroStar Webinar, Intelligent Mistakes in Test Automation, 20 Sept 2012 1-7 Roles within the automation team • Testware architect – designs the overall structure for the automation • Champion – “sells” automation to managers and testers • Tool specialist / toolsmith – technical aspects, licensing, updates to the tool • Automated test (& script) developers – write new keyword scripts as needed – debug automation problems 1-8 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 11. 1-Managing Agile automation: Lisa Crispin – starting point: buggy code, new functionality needed, whole team regression tests manually – testable architecture: (open source tools) • want unit tests automated (TDD), start with new code • start with GUI smoke tests - regression • business logic in middle level with FitNesse – 100% regression tests automated in one year • selected set of smoke tests for coverage of stories – every 6 mos, engineering sprint on the automation – key success factors • management support & communication • whole team approach, celebration & refactoring 1-9 Chapter 1, pp 17-32, Experiences of Test Automation Automation and agile • agile automation: apply agile principles to automation – multidisciplinary team – automation sprints – refactor when needed • fitting automation into agile development – ideal: automation is part of “done” for each sprint • Test-Driven Design = write and automate tests first – alternative: automation in the following sprint -> • may be better for system level tests See www.satisfice.com/articles/agileauto-paper.pdf (James Bach) presented by Dorothy Graham info@dorothygraham.co.uk 1-10 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 12. 1-Managing Automation in agile/iterative development A manual testing of this release (testers) A B regression testing (automators automate the best tests) A B C run automated tests (testers) A B C D E F 1-11 Requirements for agile test framework • Support manual and automated testing – using the same test construction process • Support fully manual execution at any time – requires good naming convention for components • Support manual + automated execution – so test can be used before it is 100% automated • Implement reusable objects • Allow “stubbing” objects before GUI available Source: Dave Martin, LDSChurch.org, email presented by Dorothy Graham info@dorothygraham.co.uk 1-12 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 13. 1-Managing Managing Successful Test Automation 1 2 3 4 5 6 Contents Responsibilities Pilot project Test automation objectives Measures for automation Return on Investment (ROI) 1-13 A tale of two projects: Ane Clausen – Project 1: 5 people part-time, within test group • no objectives, no standards, no experience, unstable • after 6 months was closed down – Project 2: 3 people full time, 3-month pilot • worked on two (easy) insurance products, end to end • 1st month: learn and plan, 2nd & 3rd months: implement • started with simple, stable, positive tests, easy to do • close cooperation with business, developers, delivery • weekly delivery of automated Business Process Tests – after 6 months, automated all insurance products Chapter 6, pp 105-128, Experiences of Test Automation presented by Dorothy Graham info@dorothygraham.co.uk 1-14 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 14. 1-Managing Pilot project • reasons – you’re unique – many variables / unknowns at start • benefits – find the best way for you (best practice) – solve problems once – establish confidence (based on experience) – set realistic targets • objectives – demonstrate tool value – gain experience / skills in the use of the tool – identify changes to existing test process – set internal standards and conventions – refine assessment of costs and achievable benefits 1-15 What to explore in the pilot • build / implement automated tests (architecture) – different ways to build stable tests (e.g. 10 – 20) • maintenance – different versions of the application – reduce maintenance for most likely changes • failure analysis – support for identifying bugs – coping with common bugs affecting many automated tests Also: naming conventions, reporting results, measurement presented by Dorothy Graham info@dorothygraham.co.uk 1-16 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 15. 1-Managing After the pilot… • having processes & standards is only the start – 30% on new process – 70% on deployment Source: Eric Van Veenendaal, successful test process improvement • marketing, training, coaching • feedback, focus groups, sharing what’s been done • the (psychological) Change Equation – change only happens if (x + y + z) > w x = dissatisfaction with the current state y = shared vision of the future z = knowledge of the steps to take to get from here to there w = psychological / emotional cost to change for this person 1-17 Managing Successful Test Automation 1 2 3 4 5 6 Contents Responsibilities Pilot project Test automation objectives Measures for automation Return on Investment (ROI) 1-18 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 16. 1-Managing An automation effort • is a project – with goals, responsibilities, and monitoring – but not just a project – ongoing effort is needed • not just one effort – different projects – when acquiring a tool – pilot project – when anticipated benefits have not materialized – different projects at different times • with different objectives • objectives are important for automation efforts – where are we going? are we getting there? 1-19 A manual vs an automated test an automated test Effective Economic Economic first Run of an automated test an interactive (manual) test Evolvable Exemplary 1-20 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 17. 1-Managing Good objectives for automation? – run regression tests evenings and weekends – give testers a new skill / enhance their image – run tests tedious and error-prone if run manually – gain confidence in the system – reduce the number of defects found by users 1-21 Objectives Exercise 1-22 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 19. Test Automation Objectives Exercise Test Automation Objectives Exercise The following are some possible test automation objectives. Evaluate each objective – is it a suitable objective for automation? If not, why not? Which are already in place in your own organisation? Possible test automation objectives Achieve faster performance for the system Good automation objective? (If not, why not) Already in place? NO – this is not an objective for test execution automation, nor is it an objective for performance testing! Performance test tools may help by giving the measurements to see whether the system is faster. Achieve good results and quick payback with no additional resources, effort or time Automate all tests Build a long-lasting automation regime that is easy to maintain Easy to add new automated tests Ensure repeatability of regression tests Ensure that we meet our release deadlines Find more bugs Find defects in less time Free testers from repeated (boring) test execution to spend more time in test design © Dorothy Graham STA1110126 Page 1 of 5

- 21. Test Automation Objectives Exercise Possible test automation objectives Good automation objective? (If not, why not) Already in place? Improve our testing Reduce elapsed time for testing by x% Reduce the cost and time for test design Reduce the number of test staff Run more tests Run regression tests more often Run tests every night on all PCs Achieve a positive Return on Investment in no more than <x> test interations (where x = ?) Other objectives: © Dorothy Graham STA1110126 Page 2 of 5

- 23. 1-Managing Reduce test execution time edit tests (maintenance) set-up execute analyse failures clear-up Manual testing Same tests automated More mature automation 1-23 Automate x% of the tests • are your existing tests worth automating? – if testing is in chaos, automating gives you faster chaos • which tests to automate (first)? • what % of manual tests should be automated? – “100%” sounds impressive but may not be wise • what else can be automated – automation can do things not possible or practical in manual testing! 1-24 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 24. 1-Managing Manual vs automated manual tests automated tests tests not automated yet tests not worth automating tests (& verification) not possible to do manually manual tests automated (% manual) exploratory test automation 1-25 Exploratory Test Automation - 1 • sounds like an oxymoron? • when you are exploring, you might say – “that’s weird – might there be any more like that?” • is there a way to check a result automatically? – a heuristic oracle • can you generate lots of different (random) inputs? • have you got lots of computer power available? Source: Harry Robinson tutorial at CAST 2010, August 2010 presented by Dorothy Graham info@dorothygraham.co.uk 1-26 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 25. 1-Managing Exploratory Test Automation - 2 • set off lots of tests that produce something that can be checked with an automated oracle • alerts a human when something unusual occurs • it’s exploratory – we don’t know what we will find, we don’t have a planned route through the system • it’s automated – a “shotgun” approach – lots of different (random) inputs • can find a class of bug too hard to find manually – because so many tests can be run 1-27 Success = find lots of bugs? • tests find bugs, not automation • automation is a mechanism for running tests • the bug-finding ability of a test is not affected by the manner in which it is executed • this can be a dangerous objective – especially for regression automation! 1-28 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 26. 1-Managing What is automated? most often automated likelihood of finding bugs regression tests exploratory testing 1-29 When is “find more bugs” a good objective for automation? • objective is “fewer regression bugs missed” • when the first run of a given test is automated – MBT, Exploratory test automation, automated test design – keyword-driven (e.g. users populate spreadsheet) • find bugs in parts we wouldn’t have tested? 1-30 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 27. 1-Managing Good objectives for test automation • measurable – EMTE (Equivalent Manual Test Effort) – tests run, coverage (e.g. features tested) • • • • realistic and achievable short and long term regularly re-visited and revised should be different objectives for testing and for automation • automation should support testing activities 1-31 Trying to get started: Tessa Benzie – consultancy to start automation effort • project, needs a champion – hired someone • training first, something else next, etc. – contract test manager – more consultancy • bought a tool – now used by a couple contractors • TM moved on, new QA manager has other priorities – just wanting to do it isn’t enough • needs dedicated effort • now have “football teams” of manual testers Chapter 29, pp 535, Experiences of Test Automation presented by Dorothy Graham info@dorothygraham.co.uk 1-32 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 28. 1-Managing Managing Successful Test Automation 1 2 3 4 5 6 Contents Responsibilities Pilot project Test automation objectives Measures for automation Return on Investment (ROI) 1-33 Useful measures • a useful measure: “supports effective analysis and decision making, and that can be obtained relatively easily.” Bill Hetzel, “Making Software Measurement Work”, QED, 1993. • easy measures may be more useful even though less accurate (e.g. car fuel economy) • ‘useful’ depends on objectives, i.e. what you want to know 1-34 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 29. 1-Managing Automation measures? • aspects of automation – number of times tests are run – time to run the automated tests (& manual equiv) – effort in automation and running automated tests • effort to build new automated tests • effort to run automated tests (by people) • effort to analyse failed automated tests • effort to maintain automated tests – number of test failures caused by one s/w fault – number of automation scripts 1-35 EMTE – what is it? • Equivalent Manual Test Effort – given a set of automated tests, – how much effort would it take • IF those tests were run manually • note – you would not actually run these tests manually – EMTE = what you could have tested manually • and what you did test automatically – used to show test automation benefit 1-36 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 30. 1-Managing EMTE – how does it work? a manual test Manual testing Automate the manual testing? the manual test now automated doesn’t make sense – can run them more only time to run the tests 1.5 times 1-37 EMTE – how does it work? (2) Automated testing EMTE 1-38 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 31. 1-Managing EMTE example • example – automated tests take 2 hours – if those same tests were run manually, 4 days • frequency – automated tests run every day for 2 weeks (including once at the weekend), 11 times • calculation – EMTE = 1-39 Examples of good (measurable) objectives for test automation • achieve positive ROI in less than 6 test iterations – measured by comparing to manual testing • run most important tests using spare resource – top 10% of usefulness rating, run out of hours • reduce elapsed time of tool-supported activities – measured for maintenance & failure analysis time • improve automation support for testers – testers rate usefulness of automation support – how often utilities/automation features are used 1-40 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 32. 1-Managing Managing Successful Test Automation 1 2 3 4 5 6 Contents Responsibilities Pilot project Test automation objectives Measures for automation Return on Investment (ROI) 1-41 Why measure automation ROI? • to justify and confirm starting automation – business case for purchase/investment decision, to confirm ROI has been achieved e.g. after pilot – both compare manual vs automated testing • to monitor on-going automation – for increased efficiency, continuous improvement – build time, maintenance time, failure analysis time, refactoring time • on-going costs – what are the benefits? 1-42 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 33. 1-Managing What can you show as a benefit? • number of additional times tests are run – how many times run manually & automated • how long tests take to run – execution time for manual and automated, EMTE • how much effort to run tests – human effort for manual testing and kicking off/ dealing with automated tests • data variety and/or coverage – different sets of data run manually & automated – different parts of the system now tested 1-43 An example comparative benefits chart 80 70 60 50 40 man aut 30 20 10 0 exec speed 14 x faster times run data variety tester work 5 x more often 4 x more data 12 x less effort ROI spreadsheet – email me for a copy 1-44 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 34. 1-Managing EMTE over releases EMTE per release 800 700 600 500 400 300 200 100 0 1 2 3 4 5 2708 Total “manual” hours of testing done automatically 339 Days of testing performed 1-45 Return on Investment (ROI) • ROI = (benefit – cost) / cost – Investment: costs can be expressed as effort • which can be converted to money – Return: benefits • reduced tester time effort • but what about things like “faster execution”, “run more often”, “greater coverage” “quicker time to market”? • how to convert these to money? – possible calculation based on tester time – the other things are bonuses? • comparing to manual testing 1-46 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 35. 1-Managing ROI example Human Time to run Savings per automated automated No. automated Total run runs per release savings tests Manual time testing 66 7 Average Build time for new aut Maintenance time tests 15 59 9 531 Failure Other Total ROI = analysis automation automation (Gain time time spent investment Cost) / Cost 50 35 22 122 3.4 Free ROI spreadsheet available 1-47 MBT @ ESA: Stefan Mohacsi, Armin Beer – home-grown tool interfaced to commercial tools • Model-Based Testing and Test Case Generation • layers of abstraction for maintainability – define model before software is ready • capture and assign GUI objects later • developers build in testability – ROI calculations • invest 460 hours in automation infrastructure • break-even after 4 test cycles Chapter 9, pp 155-175, Experiences of Test Automation presented by Dorothy Graham info@dorothygraham.co.uk 1-48 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 36. 1-Managing Example ROI graph using MBT 1400 1200 1000 People Hours 800 Manual hrs Automated hrs 600 400 200 0 1 2 3 4 5 6 1-49 Source: Stefan Mohacsi & Armin Beer Database testing: Henri van de Scheur – tool developed in-house (now open source) • agreed requirements with relevant people up front • 9 months, 4 developers in Java (right people) • good architecture, start with quick wins – flexible configuration, good reporting, metrics used to improve – results: 2400 times more efficient • from: 20 people run 40 tests on 6 platforms in 4 days • to: 1 person runs 200 tests on 10 platforms in 1 day • quick dev tests, nightly regression, release tests • life cycle of automated tests • little maintenance, machines used 24x7, better quality Chapter 2, pp 33-48, Experiences of Test Automation presented by Dorothy Graham info@dorothygraham.co.uk 1-50 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 37. 1-Managing Large S Africa bank: Michael Snyman • was project-based, too late, lessons not learned – “our shelves were littered with tools..” • 2006: automation project, resourced, goals – formal automation process • ROI after 3 years – US$4m on testing project, automation $850K – savings $8m, ROI 900% • 20 testers for 4 weeks to 2 in 1 week – automation ROI justified the testing project • only initiative that was measured accurately Chapter 29, pp 562-567, Experiences of Test Automation 1-51 Example ROI graph Savings % vs Tests 100% 50% 0% 0 500 1000 1500 2000 2500 -50% -100% -150% -200% monthly weekly daily Source: Lars Wahlberg, Chapter 18 in “Experiences of Test Automation” presented by Dorothy Graham info@dorothygraham.co.uk 1-52 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 38. 1-Managing Why is measuring ROI dangerous? • focus on what’s easy to measure (tester time) • other factors may be much more important – time to market, greater coverage, faster testing • defining ROI only by tester time may give the impression that the tools replace the testers – this is dangerous – tools replace some aspects of some of what testers do, with increased cost elsewhere – we want a net benefit, even if hard to quantify 1-53 How important is ROI? • “automation is an enabler for success, not a cost reduction tool” (Yoram Mizrachi*) • many achieve lasting success without measuring ROI (depends on your context) – need to be aware of benefits (and publicize them) *“Planning a mobile test automation strategy that works, ATI magazine, July 2012 presented by Dorothy Graham info@dorothygraham.co.uk 1-54 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 39. 1-Managing Sample ‘starter kit’ for metrics for test automation (and testing) • some measure of benefit – e.g. EMTE or coverage • average time to automate a test (or set of related tests) • total effort spent on maintaining automated tests (expressed as an average per test) • also measure testing, e.g. Defect Detection Percentage (DDP) – test effectiveness – more info on DDP on my web site & blog 1-55 Recommendations • don’t measure everything! • choose three or four measures – applicable to your most important objectives • monitor for a few months – see what you learn • change measures if they don’t give useful information • be careful with ROI if based on tester time – may give impression tools can replace people! 1-56 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 40. 1-Managing Managing Successful Test Automation 1 2 3 4 5 6 Summary: key points • • • • • Assign responsibility for automation (and testing) Use a pilot project to explore the best ways of doing things Know your automation objectives Measure what’s important to you Show ROI from automation 1-57 Good objectives for automation? – run regression tests evenings and weekends not a good objective, unless they are worthwhile tests! – give testers a new skill / enhance their image not a good objective, could be a useful by-product – run tests tedious and error-prone if run manually good objective – gain confidence in the system an objective for testing, but automated regression tests help achieve it – reduce the number of defects found by users not a good objective for automation, good objective for testing! 1-58 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 41. Test Automation Objectives Solution Test Automation Objectives Solution We have given some ideas as to which objectives are good and why the others are not. Good automation objective? (If not, why not) Possible test automation objectives Achieve faster performance for the system NO – this is not an objective for test execution automation, nor is it an objective for performance testing! Performance test tools may help by giving the measurements to see whether the system is faster. Achieve good results and quick payback with no additional resources, effort or time NO – this is totally unrealistic – expecting a miracle with no investment! Automate all tests NO – automating ALL tests is not realistic nor sensible. Automate only those tests that are worth automating. Build a long-lasting automation regime that is easy to maintain YES – this is an excellent objective for test automation, and it is measurable. Easy to add new automated tests YES. with a good automation regime, it can be easier to add a new automated test than to run that test manually. Ensure repeatability of regression tests YES. The tools will run the same test in the same way every time. Ensure that we meet our release deadlines NO. Automation may help to run some tests that are required before release, but there are many more factors that go into a release decision. Find more bugs NO. Automation just runs tests. It is the tests that find the bugs, whether they are run manually or are automated. Find defects in less time Not really. Some types of defects (regression bugs) will be found more quickly by automated tests, but it may actually take longer to analyse the failures found. Free testers from repeated (boring) test YES. This is a good objective for test execution execution to spend more time in test automation. design © Dorothy Graham STA110126 Page 3 of 5

- 43. Test Automation Objectives Solution Good automation objective? (If not, why not) Possible test automation objectives Improve our testing NO. Better testing practices and better use of techniques will improve testing. Reduce elapsed time for testing by x% NO. Elapsed time depends on many factors, and not much on whether tests are automated (see further explanation in the slides). Reduce the cost and time for test design NO. Test design is independent from automation – the time spent in design is not affected by how those tests are executed. Reduce the number of test staff NO. You will need more staff to implement the automation, not less. It can make existing staff more productive by spending more time on test design. Run more tests YES but only long term. Short term, you may actually run fewer tests because of the effort taken to automate them. Run regression tests more often YES – this is what the test execution tools do best. Run tests every night on all PCs NO. It may look impressive, but what tests are being run? Are they useful? If not, this is a waste of electricity. Achieve a positive Return on Investment in no more than <6> test interations YES. This is a good objective, if the number of iterations is a reasonable number (e.g. 6). Other objectives: © Dorothy Graham STA110126 Page 4 of 5

- 45. Test Automation Objectives Solution Test Automation Objectives: Selection and Measurement On this page, record the test objectives that would be most appropriate for your organisation (and why), and how you will measure them (what to measure and how to measure it). I suggest that you include at least one about showing Return on Investment. If you currently have automation objectives in place in your organisation that are not good ones, make sure that they are removed and replaced by the better ones below! Proposed test automation objective (with justification) What to measure and how to measure it Add any comments or thoughts here or on the back of this page. © Dorothy Graham STA110126 Page 5 of 5

- 47. 2-Architecture Successful Test Automation Testware Architecture 1 Managing 2 Architecture 3 Pre- and Post 4 Scripting 5 Comparison 6 Advice Ref. Chapter 5: Testware Architecture “Software Test Automation” 2-1 Architecture 1 2 3 4 5 Successful Test Automation 6 Contents Importance of a testware architecture What needs to be organised 2-2 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 48. 2-Architecture Testware architecture testware'architecture' Testers'' write'tests'(in'DSTL)' abstraction here: easier to write automated tests -> widely used High Level Keywords structured' testware' Test Automator(s) Structured Scripts Test'Execu/on'Tool' runs'scripts' abstraction here: easier to maintain, and change tools -> long life 2-3 Architecture – abstraction levels • most critical factor for success – worst: close ties between scripts, tool & tester • separate testers’ view from technical aspects – so testers don’t need tool knowledge • for widespread use of automation • scripting techniques address this • separate tests from the tool – modular design – likely changes confined to one / few module(s) – re-use of automation functions – for minimal maintenance and long-lived automation 2-4 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 49. 2-Architecture Easy way out: use the tool’s architecture • tool will have its own way of organising tests – where to put things (for the convenience of the tool!) – will “lock you in” to that tool – good for vendors! • a better way (gives independence from tools) – organise your tests to suit you – as part of pre-processing, copy files to where the tool needs (expects) to find them – as part of post-processing, copy back to where you want things to live 2-5 Tool-specific script ratio Testers'' Testers'' Not Toolspecific Tool-specific scripts Test'Execu/on'Tool' High maintenance and/or tooldependence Test'Execu/on'Tool' 2-6 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 50. 2-Architecture Learning is incremental: Molly Mahai – book learning – knew about investment, not replace people, don’t automate everything, etc. – set up good architecture? books not enough – picked something to get started • after a while, realised limitations • too many projects, library cumbersome – re-designed architecture, moved things around – didn’t know what we needed till we experienced the problems for ourselves • like trying to educate a teenager 2-7 Chapter 29, pp 527-528, Experiences of Test Automation Architecture 1 2 3 4 5 Successful Test Automation 6 Contents Importance of a testware architecture What needs to be organised 2-8 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 51. 2-Architecture A test for you • show me one of your automated tests running – how long will it take before it runs? • typical problems – fails: forgot a file, couldn’t find a called script – can’t do it (yet): • Joe knows how but he’s out, • environment not right, • haven’t run in a while, • don’t know what files need to be set up for this script • why not: run up control script, select test, GO 2-9 Key issues • scale – the number of scripts, data files, results files, benchmark files, etc. will be large and growing • shared scripts and data – efficient automation demands reuse of scripts and data through sharing, not multiple copies • multiple versions – as the software changes so too will some tests but the old tests may still be required • multiple environments / platforms 2-10 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 52. 2-Architecture Terms - Testware artefacts Testware Test Materials Test Results Products inputs By-Products scripts doc (specifications) data env utilities logs actual results expected results status differences differences summary summary 2-11 Testware for example test case open.scp saveas.scp Shared Shared script Test script: script - test input countries.scp log.txt Log countries.dcm Initial Document testspec.txt Test Specification presented by Dorothy Graham info@dorothygraham.co.uk Scribble Edited Document countries2.dcm Expected Output countries2.dcm Compare diffs.txt Differences 2-12 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 53. 2-Architecture Testware by type Testware Test Materials countries.scp open.scp saveas.scp testdef.txt countries.dcm Test Results Products By-Products log.txt status.txt countries2.dcm countries2.dcm diff.txt 2-13 Benefits of standard approach • tools can assume knowledge (architecture) – they need less information; are easier to use; fewer errors will be made • can automate many tasks – checking (completeness, interdependencies); documentation (summaries, reports); browsing • portability of tests – between people, projects, organisations, etc. • shorter learning curve 2-14 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 54. 2-Architecture Testware Sets • Testware Set: – a collection of testware artifacts – four types: • Test Set - one or more test cases • Script Set - scripts used by two or more Test Sets • Data Set - data files used by two or more Test Sets • Utility Set - utilities used by two or more Test Sets – good software practice: look for what is common, and keep it in only one place! – Keep your testware DRY! 2-15 Testware library – repository of master versions of all Testware Sets – uncategorised scripts worse than no scripts – Onaral & Turkmen – CM is critical – “If it takes too long to update your test library, automation introduces delay instead of adding efficiency” – Linda Hayes, AST magazine, Sept 2010 presented by Dorothy Graham info@dorothygraham.co.uk d_ScribbleTypical d_ScribbleTypical d_ScribbleVolume s_Logging s_ScribbleDocument s_ScribbleDocument s_ScribbleDocument s_ScribbleNavigate t_ScribbleBreadth t_ScribbleCheck t_ScribbleFormat t_ScribbleList t_ScribbleList t_ScribbleList t_ScribblePrint t_ScribbleSave t_ScribbleTextEdit t_ScribbleTextEdit u_ScribbleFilters u_GeneralCompare v1 v2 v1 v1 v1 v2 v3 v1 v1 v1 v1 v1 v2 v3 v1 v1 v1 v2 v1 v1 Version numbers 2-16 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 55. 2-Architecture Separate test results Test suite Software under test Test results 2-17 Incremental approach: Ursula Friede – large insurance application – first attempt failed • no structure (architecture), data in scripts – four phases (unplanned) • parameterized (dates, claim numbers, etc) • parameters stored in single database for all scripts • improved error handling (non-fatal unexpected events) • automatic system restart – benefits: saved 200 man-days per test cycle • €120,000! Chapter 23, pp 437-445, Experiences of Test Automation presented by Dorothy Graham info@dorothygraham.co.uk 2-18 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 56. 2-Architecture Architecture 1 2 3 4 5 Successful Test Automation 6 Summary: key points • • • Structure your automation testware to suit you Testware comprises many files, etc. which need to be given a home Use good software development standards 2-19 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 57. 3-Pre and Post Processing Successful Test Automation Pre- and Post-Processing 1 Managing 2 Architecture 3 Pre- and Post 4 Scripting 5 Comparison 6 Advice Ref. Chapter 6: Automating Pre- and Post-Processing “Software Test Automation” 3-1 Pre and Post 1 2 3 4 5 Successful Test Automation 6 Contents Automating more than tests Test status 3-2 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 58. 3-Pre and Post Processing What is pre- and post-processing? • Pre-processing – automation of setup tasks necessary to fulfil test case prerequisites • Post-processing – automation of post-execution tasks necessary to complete verification and house-work • These terms are useful because: – there are lots of tasks, they come in packs, many are the same, and they can be easily automated 3-3 Automated tests/automated testing Automated tests Select / identify test cases to run Set-up test environment: • create test environment • load test data Repeat for each test case: • set-up test pre-requisites • execute • compare results • log results • analyse test failures • report defect(s) • clear-up after test case Clear-up test environment: • delete unwanted data • save important data Summarise results Manual process presented by Dorothy Graham info@dorothygraham.co.uk Automated testing Select / identify test cases to run Set-up test environment: • create test environment • load test data Repeat for each test case: • set-up test pre-requisites • execute • compare results • log results • clear-up after test case Clear-up test environment: • delete unwanted data • save important data Summarise results Analyse test failures Report defects Automated process 3-4 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 59. 3-Pre and Post Processing Examples • pre-processing – copy scripts from a common script set (e.g. open, saveas) – delete files that shouldn’t exist when test starts – set up data in files – copy files to where the tool expects to find them – save normal default file and rename the test file to the default (for this test) • post-processing – copy results to where the comparison process expects to find them – delete actual results if they match expected (or archive if required) – rename a file back to its normal default 3-5 Outside the box: Jonathan Kohl – task automation (throw-away scripts) • entering data sets to 2 browsers (verify by watching) • install builds, copy test data – support manual exploratory testing – testing under the GUI to the database (“side door”) – don’t believe everything you see • 1000s of automated tests pass too quickly • monitoring tools to see what was happening • “if there’s no error message, it must be ok” – defects didn’t make it to the test harness – overloaded system ignored data that was wrong Chapter 19, pp 355-373, Experiences of Test Automation presented by Dorothy Graham info@dorothygraham.co.uk 3-6 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 60. 3-Pre and Post Processing Automation + DSTL structured testware architecture Dis po scr sabl ipts e execution manual comparison testing s litie d Uti ta loa da eg loosen your oracles ost & p ing Pre ess c pro traditional test automation Me tric e.g s EM . TE ETA, monkeys Pre and Post 1 2 5 Successful Test Automation 3 4 3-7 6 Contents Automating more than tests Test status 3-8 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 61. 3-Pre and Post Processing Test status – pass or fail? • tool cannot judge pass or fail – only “match” or “no match” – assumption: expected results are correct • when a test fails (i.e. the software fails) – need to analyse the failure • true failure? write up bug report • test fault? fix the test (e.g. expected result) • known bug or failure affecting many automated tests? – this can eat a lot of time in automated testing – solution: additional test statuses 3-9 Test statuses for automation Compare to (true) expected outcome expected fail outcome don’t know / missing No differences found Differences found Pass Fail Expected Fail Unknown Unknown Unknown • other possible additional test statuses – environment problem (e.g. network down, timeouts) – set-up problems (files missing) – things that affect the tests that aren’t related to the assessment of matching expected results – test needs to be changed but not done yet 3-10 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 62. 3-Pre and Post Processing Pre and Post 1 2 3 4 5 Successful Test Automation 6 Summary: key points • • Pre- and post processing to automate setup and clear-up tasks Test status is more than just pass / fail 3-11 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 63. 4-Scripting Successful Test Automation 1 Managing 2 Architecture 3 Pre- and Post 4 Scripting 5 Comparison 6 Advice Scripting Techniques Ref. Chapter 3: Scripting Techniques “Software Test Automation” 4-1 1 2 4 5 Successful Test Automation 3 6 Scripting Contents Objectives of scripting techniques Different types of scripts Domain specific test language 4-2 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 64. 4-Scripting Objectives of scripting techniques • implement your testware architecture • to reduce costs – make it easier to build automated tests • avoid duplication • greater return on investment – better testing support – greater portability • environments & hardware platforms • enhance capabilities – avoid excessive maintenance costs • greater reuse of functional, modular scripting – achieve more testing for same (or less) effort • testing beyond traditional manual approaches best achieved by keywords or DSTL 4-3 1 2 4 5 Successful Test Automation 3 6 Scripting Contents Objectives of scripting techniques Different types of scripts Domain specific test language 4-4 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 65. 4-Scripting Capture/replay = linear script test scripts record /edit software under test (manual) test procedures Test Tool instructions and test data testers automators 4-5 About capture/replay • fair amount of effort to produce scripts – similar for each test procedure – subsequent editing may also be necessary • dominated by maintenance costs – scripts are exact mould for software – one software change can mean many scripts change – script is linear – sequence of every step 4-6 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 66. 4-Scripting Example captured script captured individual interactions with software duplicated actions result in duplicated instructions FocusOn ‘Open File’ Type ‘countries’ LeftMouseClick ‘Open’ FocusOn ‘Scribble’ SelectOption ‘List/Add Item’ FocusOn ‘Add Item’ Type ‘Sweden’ LeftMouseClick ‘OK’ FocusOn ‘Scribble’ SelectOption ‘List/Add Item’ FocusOn ‘Add Item’ Type ‘USA’ LeftMouseClick ‘OK’ FocusOn ‘Scribble’ SelectOption ‘List/Move Item’ duplicated actions result in duplicated instructions 4-7 Structured scripting low-level “how to” instructions create Test Tool testers script library automators software under test high-level instructions and test data test (manual) test scripts procedures 4-8 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 67. 4-Scripting About structured scripting • script library for re-used scripts – part of testware architecture implementation • shared scripts interface to software under test • all other scripts interface to shared scripts • reduced costs – maintenance • fewer scripts affected by software changes – build • individual control scripts are smaller and easier to read (a ‘higher’ level language is used) 4-9 Structured scripting example test script LeftMouseDouble ‘Scribble’ Call OpenFile(‘countries’) Call AddItem(‘Sweden’) Call AddItem(‘USA’) Call MoveItem(4,1) Call AddItem(‘Norway’) Call DeleteItem(2) Call DeleteItem(7) FocusOn ‘Delete Error’ LeftMouseClick ‘OK’ FocusOn ‘Scribble’ Call CloseSaveAs(‘countries2’) SelectOption ‘File/Exit’ shared scripts OpenFile DeleteItem :::::::::::::::::::::: AddItem :::::::::::::::::::::: :::::::::::::::::::::: MoveItem :::::::::::::::::::::: CloseSaveAs :::::::::::::::::::::: 4-10 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 68. 4-Scripting Usable (re-usable) scripts • To re-use a script, need to know: – what does this script do? – what does it need? – what does it deliver? – what state when it starts? – what state when it finishes? • Information in a standard place for every script – can search for the answers to these questions 4-11 Data driven test data data files control scripts create Test Tool create testers script library automators low-level “how to” instructions software under test (manual) test procedures high-level instructions 4-12 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 69. 4-Scripting About data driven • test data extracted from scripts – placed into separate data files • control script reads data from data file – one script implements several tests by reading different data files (reduces script maintenance per test) • reduced build cost – faster and easier to automate similar test procedures – many test variations using different data • multiple control scripts required – one for each type of test (with varying data) 4-13 Data-driven example OpenDataFile(TESTCASEn) ReadDataFile(RECORD) Data file: TestCase1 FILE ADD MOVE DELETE For each record ReadDataFile(RECORD) Case (Column(RECORD)) countries Sweden Data file: TestCase2 USA FILE Europe Norway ADD 4,1 France Germany MOVE DELETE 2 7 1,3 2,2 5,3 FILE: OpenFile(INPUTFILE) ADD: AddItem(ITEM) 1 presented by Dorothy Graham info@dorothygraham.co.uk Control script For each TESTCASE MOVE: MoveItem(FROM, TO) DELETE: DeleteItem(ITEM) ….. Next record Next TESTCASE 4-14 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 70. 4-Scripting Data-driven: example data file Data file: TestCase1 FILE ADD MOVE DELETE countries Sweden USA Headings indicate actions - but may not be read by a control script (they could be just “comments”) 4,1 Norway Position represents data for the action (data in the wrong column will cause problems) 2 7 Tests are not identical – first one has 3 countries, 2 moves, next test had 2 countries, 3 moves. The test data drives the test for things it can deal with – the script responds to data in a fixed position in the data file. 4-15 Keywords (basic) single control script: “interpreter” / ITE control script create Test Tool test definitions testers presented by Dorothy Graham info@dorothygraham.co.uk script library automators software under test high-level instructions and test data (manual) test procedures low-level “how to” instructions and keywords 4-16 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 71. 4-Scripting About keywords • single control script (Interactive Test Environment) – improvements to this benefit all tests (ROI) – extracts high-level instructions from scripts • ‘test definition’ Unit test: calculate one interest payment – independent of tool scripting language – a language tailored to testers’ requirements System test: summarise interest for one customer • software design • application domain • business processes Acceptance test: end of day run, all interest payments • more tests, fewer scripts 4-17 Comparison of data files data-driven approach FILE ADD MOVE DELETE SAVE Europe France Italy 1,3 2,2 1 5,2 Test2 which is easier to read/understand? presented by Dorothy Graham info@dorothygraham.co.uk keyword approach ScribbleOpen Europe AddToList France Italy MoveItem 1 to 3 MoveItem 2 to 2 DeleteItem 1 MoveItem 5 to 2 SaveAs Test2 what happens when the test becomes large and complex? this looks more like a test 4-18 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 72. 4-Scripting 1 2 4 5 Successful Test Automation 3 6 Scripting Contents Objectives of scripting techniques Different types of scripts Domain specific test language 4-19 Merged test procedure/test/ definition Advanced keyword-driven action words single control script: “interpreter” / ITE control script create Test Tool test test procedures definitions /definitions / language for testers presented by Dorothy Graham info@dorothygraham.co.uk script library low-level “how to” instructions and keyword scripts software under test high-level instructions and test data (manual) test procedures 4-20 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 73. 4-Scripting Domain Specific Test Language (DSTL) • test procedures and test definitions similar – both describe sequences of test cases • giving test inputs and expected results • combine into one document – can include all test information – avoids extra ‘translation’ step – testers specify tests regardless manual/automated – automators implement required keywords 4-21 Keywords in the test definition language • multiple levels of keywords possible – high level for business functionality – low level for component testing • composite keywords – define keywords as sequence of other keywords – gives greater flexibility (testers can define composite keywords) but risk of chaos • format – freeform, structured, or standard notation • (e.g. XML) 4-22 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 74. 4-Scripting Example use of keywords Create a new account, order 2 items and check out Firstname Surname Email address Password Edward Brown ebrown@gmail.com apssowdr Item Num Items Check Price for Items Order Item 1579 3 15.30 Order Item 2598 Create Account 12.99 Checkout 4-23 Documenting keywords Name Purpose Parameters Preconditions Postconditions Error conditions Example Name for this keyword What this keyword does Any inputs needed, outputs produced What needs to be true before using it, where valid What will be true after it finishes What errors it copes with, what is returned An example of the use of the keyword Source: Martin Gijsen. See also Hans Buwalda book & articles presented by Dorothy Graham info@dorothygraham.co.uk 4-24 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 75. 4-Scripting Example keyword documentation - 1 Name Create account Purpose Creates a new account Parameters *First name: 2 to 32 characters *Last name: 2 to 32 characters *Email address: also serves as account id *Password: 4 - 32 characters Pre-conditions Account doesn't exist for this person Post-conditions Account created (including email confirmation) order Screen displayed Error conditions Example (see example) *mandatory 4-25 Example keyword documentation - 2 Name Purpose Parameters Pre-conditions Post-conditions Error conditions Example Order item Orders items from the online supplier *Item number: 1000 to 9999, in catalogue Number of items wanted: 1 to Max-for-item. If blank, assumes 1 Valid account logged in Items in stock (at least one) Prices available for all items in stock Total for all items ordered is available (for checkout) Number of available items decreased by number ordered Item(s) not in stock (see example) *mandatory presented by Dorothy Graham info@dorothygraham.co.uk 4-26 © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 76. 4-Scripting Implementing keywords • ways to implement keywords – scripting language (of a tool) – programming language (e.g. Java) – use what your developers are familiar with! • ways of creating an architecture to support a DSTL – commercial, open source or home-grown framework – spreadsheet or database for test descriptions 4-27 Tools / frameworks • commercial tools – ATRT, Axe, Certify, eCATT, FASTBoX, Liberation, Ranorex, TestComplete, TestDrive, Tosca Testsuite • open source – FitNesse, JET, Open2Test, Power Tools, Rasta, Robot Framework, SAFS, STAF, TAF Core • I can email you my Tool List – test execution and framework tools – info@dorothygraham.co.uk 4-28 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 77. 4-Scripting Execution-tool-independent framework some tests run manually Test Tool Another Test Tool test procedures /definitions tool independent 1 2 5 4-29 tool dependent Successful Test Automation 3 4 sut tool independent scripting language framework software under test software under test script libraries 6 Scripting Summary • Objectives of good scripting – to reduce costs and enhance capabilities • Many types of scripting – e.g. capture playback, structured, data-driven, keyword • Keyword/DSTL the most sophisticated – yields significant benefits for investment • Increased tester productivity – tailored front end, test language, tool independence 4-30 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 79. Keyword-Driven Exercise Exercise (Part 1) Using only the keywords described below, specify two or three test cases each comprising a short sequence of keywords for an online grocery store. Keywords The following keywords are available. Keyword Arguments Basket should contain “n items” where n is the number of items in the basket Checkout using credit card Card type, Card number, Security code, Expiry date Create a valid user Username, Password, Address, Postcode Last order status should be One of: “Delivery scheduled”, “Delivery due”, “Delivered” Login Username, Password Logout Put items in basket List of items Example The following is an example of short test case. Test Case Existing user can place an order Action Argument Argument Login Joe Shopper Passwurd Put items in basket Carrots Cabbage … Potatoes Basket should contain 3 items Checkout using credit card Mastercard 1234 5678 0123 … 123 01-11 Logout Note that “…” in the Action column indicate a continuation line, i.e. row contains more arguments for the previous keyword. Multiple continuation lines are allowed. Exercise (Part 2) Design 2 new additional keywords suitable for testing this online grocery store and specify one or two additional test cases that use your new keywords. © Grove Consultants ATT090117 Page 1 of 3

- 81. Keyword-Driven Exercise Test Case © Grove Consultants Action Argument ATT090117 Argument Page 2 of 3

- 83. Keyword-Driven Solution Test Case New user can create account and place order Action Argument Argument Create a valid user Joe Shopper Passwurd … 6 Lower Road Worcester WR1 2AB Put items in basket Cornflakes Milk … Tea bags Coffee … Sugar Biscuits Basket should contain 6 items Checkout using credit card Mastercard 1234 5678 0123 … 123 01-11 Login Joe Shopper Passwurd Last order status should be Delivery scheduled Logout Existing user can query order Logout © Grove Consultants ATT090117 Page 3 of 3

- 85. 5-Comparison Successful Test Automation 1 Managing 2 Architecture 3 Pre- and Post 4 Scripting 5 Comparison 6 Advice Automated Comparison Ref. Chapter 4: Automated Comparison “Software Test Automation” 5-1 1 2 4 5 Successful Test Automation 3 6 Comparison Contents Automated test verification Test sensitivity 5-2 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 86. 5-Comparison Perverse persistence: Michael Williamson – testing Webmaster tools at Google (new to testing) – QA used Eggplant (image processing tool) – new UI broke existing automation – automate 4 or 5 functions – comparing bitmap images – inaccurate and slow – testers had to do automation maintenance • not worth developers learning tool’s language – after 6 months, went for more appropriate tools – QA didn’t use the automation, tested manually! • tool was just running in the background 5-3 Chapter 17, pp 321-338, Experiences of Test Automation Checking versus testing – checking confirms that things are as we think • e.g. check that the code still works as before – testing is a process of exploration, discovery, investigation and learning • e.g. what are the threats to value to stakeholders, give information – checks are machine-decidable • if it’s automated, it’s probably a check – tests require sapience • including “are the checks good enough” Source: Michael Bolton, www.developsense.com/blog/2009/08/testing-vs-checking/ 5-4 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 87. 5-Comparison General comparison guidelines • • • • • • keep as simple as possible well documented standardise as much as possible avoid bit-map comparison poor comparisons destroy good tests divide and conquer: – use a multi-pass strategy – compare different aspects in each pass 5-5 Two types of comparison • dynamic comparison – done during test execution – performed by the test tool – can be used to direct the progress of the test • e.g. if this fails, do that instead – fail information written to test log (usually) • post-execution comparison – done after the test execution has completed – good for comparing files or databases – can be separated from test execution – can have different levels of comparison • e.g. compare in detail if all high level comparisons pass 5-6 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 88. 5-Comparison Comparison types compared scribble1.scp Test script: - test input - comparison instructions dynamic comparison Error message as expected? log.txt Log countries.dcm Initial Document Scribble countries2.dcm Edited Document countries2.dcm Expected Output Compare post-execution comparison diffs.txt Differences 5-7 Comparison process • few tools for post-execution comparison • simple comparators come with operating systems but do not have pattern matching – e.g, Unix ‘diff’, Windows ‘UltraCompare’ • text manipulation tools widely available – sed, awk, grep, egrep, Perl, Tcl, Python • use pattern matching tools with a simple comparator to make a ‘comparison process’ • use masks and filters for efficiency 5-8 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 89. 5-Comparison 1 2 4 5 Successful Test Automation 3 6 Comparison Contents Automated test verification Test sensitivity 5-9 Test sensitivity • the more data there is available: – the easier it is to analyse faults and debug • the more data that is compared: – the more sensitive the test • the more sensitive a test: – the more likely it is to fail – (this can be both a good and bad thing) 5-10 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 90. 5-Comparison Sensitive versus specific(robust) test Test is supposed to change only this field Specific test verifies this field only Test outcome Unexpected change occurs Sensitive test verifies the entire outcome 5-11 Too much sensitivity = redundancy Three tests, each changes a different field If all tests are specific, the unexpected change is missed Test outcome Unexpected change occurs for every test If all tests are sensitive, they all show the unexpected change 5-12 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 91. 5-Comparison Using test sensitivity • sensitive tests: – few, at high level – breadth / sanity checking tests – good for regression / maintenance • specific/robust tests: – many, at detailed level – focus on specific aspects – good for development 1 2 3 4 5 A good test automation strategy will plan a combination of sensitive and specific tests 5-13 Successful Test Automation 6 Comparison Summary: key points • • • Balance dynamic and post-execution comparison Balance sensitive and specific tests Use masking and filters to adjust sensitivity 5-14 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 93. Comparison Illustration Comparison Illustration The following examples illustrate some of the problems in automated comparison. Below are the expected outcome and the actual outcome for a test. Do they match? I.e. does the test pass? The expected results were taken from a previous test that was confirmed as correct. Test objective: Ensure that the core processing is correct, i.e. the correct error messages are produced, the progress of the order is correctly logged, and the right number of things have been ordered. It doesn’t matter when the test is run, what specific things are ordered, or the exact number assigned to the order. The total should be correct for the things that have been ordered, however. Test input (high level conditions): Log on as existing customer (software gets the first one in the list of existing customers). Order one thing that is not in stock (from a pre-defined table.) Order three things that are in stock (3 things at random locations in the stock database). Total the cost of items ordered. Expected outcome Order number X43578 Date: 24 Jan 2008 Time 11:02 Message: “Log-on accepted, J. Smith” Message: “Add to basket, 1 toaster” Message: “Out of stock, mountain bike” Message: “Add to basket, 1 computer mouse” Message: “Add to basket, 1 pedometer” Add to total: £15.95 Add to total: £14.99 Add to total: £4.95 Message: “Check out J Smith” Message: “Total due is £35.89” Actual outcome Message: “Log-on accepted, M. Jones” Order number X43604 Message: “Out of stock, anti-gravity boots” Message: “Add to basket, 1 nose stud” Add to total: £4.99 Message: “Add to basket, 1 car booster seat” Add to total: £69.95 Message: “Add to basket, 1 pedometer” Add to total: £4.95 Message: “Total due is £79.99” Message: “Check out J Smith” Date: 18 Feb 2008 Time 13:08 Has this test passed or failed? How long did it take you to decide? Would automated comparison be better / quicker? © Dorothy Graham STA110126 Page 1 of 4

- 94. Comparison Illustration Computer matching attempt No. 1: Expected outcome Actual outcome Test run number X43578 Message: “Log-on accepted, M. Jones” Comparison result FAIL Date: 24 Jan 2008 Test run number X43604 FAIL Time 11:02 Message: “Out of stock, anti-gravity boots” FAIL Message: “Log-on accepted, J. Smith” Message: “Add to basket, 1 nose stud” FAIL Message: “Add to basket, 1 toaster” Add to total: £4.99 FAIL Message: “Out of stock, mountain bike” Message: “Add to basket, 1 car booster seat” FAIL Message: “Add to basket, 1 computer mouse” Add to total: “£69.95” FAIL Message: “Add to basket, 1 pedometer” Message: “Add to basket, 1 pedometer” PASS Add to total: £15.95 Add to total: £4.95 FAIL Add to total: £14.99 Message: “Total due is £79.99” FAIL Add to total: £4.95 Message: “Check out J Smith” FAIL Message: “Check out J Smith” Date: 18 Feb 2008 FAIL Message: “Total due is £35.89 Time 13:08 FAIL Well, this isn’t terribly helpful, is it! The only thing that passed was just a coincidence (ordering the same item in the two tests). What filters could we use to get the computer’s simple comparison to give a more meaningful result? We can see that some things would have a better chance of matching if the results were in the same order. For example, Date and Time come last in the actual outcome but nearly first in our expected outcome. Let’s apply a filter to both sets of results to put the individual lines in alphabetical order. Note that this now destroys the sequence of steps as a test (this may be OK for the purposes of comparing the individual steps). © Dorothy Graham STA110126 Page 2 of 4

- 95. Comparison Illustration After applying the filter: Sort into alphabetical order: Expected outcome Actual outcome Add to total: £14.95 Add to total: £4.95 Comparison result FAIL Add to total: £15.95 Add to total: £4.99 FAIL Add to total: £4.99 Add to total: £69.95 FAIL Date: 24 Jan 2008 Date: 18 Feb 2008 FAIL Message: “Add to basket, 1 computer mouse” Message: “Add to basket, 1 car booster seat” FAIL Message: “Add to basket, 1 pedometer” Message: “Add to basket, 1 nose stud” FAIL Message: “Add to basket, 1 toaster” Message: “Add to basket, 1 pedometer” FAIL Message: “Check out J Smith” Message: “Check out J Smith” PASS Message: “Log-on accepted, J. Smith” Message: “Log-on accepted, M. Jones” FAIL Message: “Out of stock, mountain bike” Message: “Out of stock, anti-gravity boots” FAIL Message: “Total due is £35.89 Message: “Total due is £79.99” FAIL Test run number X43578 Test run number X43604 FAIL Time 11:02 Time 13:08 FAIL We don’t seem to be much further forward now, although a different item has now passed! There are two items that would have matched if they had been in a row higher or lower. There is also something very strange about this result – the thing that has passed is ‘Message: “Check out J Smith”’. But we logged in as two different people! So the one thing shown as a Pass is actually a bug!! What other filters could we apply to get a better result from automated comparison? © Dorothy Graham STA110126 Page 3 of 4

- 96. Comparison Illustration Here are some other filters we could apply: Filter: After {Time} change if in the correct format to <nn:nn> After {Test run number} change if in the correct format to <Xnnnnn> After {Date} change if in the correct format to <nn Aaa nn> After {Message: “Add to basket, } change to <item> all text before {”} After {Message: “Out of stock, } change to <item> all text before {“}” Here is our result after applying these filters: Expected outcome Actual outcome Add to total: £14.95 Add to total: £4.95 Comparison result FAIL Add to total: £15.95 Add to total: £4.99 FAIL Add to total: £4.99 Add to total: £69.95 FAIL Date: <nn Aaa nn> Date: <nn Aaa nn> Message: “Add to basket, <item>” Message: “Add to basket, <item>” Message: “Add to basket, <item>” Message: “Add to basket, <item>” PASS PASS PASS Message: “Add to basket, <item>” Message: “Add to basket, <item>” Message: “Check out J Smith” Message: “Check out J Smith” PASS PASS Message: “Log-on accepted, J. Smith” Message: “Log-on accepted, M. Jones” FAIL Message: “Out of stock, <item>” Message: “Out of stock, <item>” PASS Message: “Total due is £35.89 Message: “Total due is £79.99” FAIL Test run number <Xnnnnn> Test run number <Xnnnnn> Time <nn:nn> Time <nn:nn> PASS PASS We were not interested in checking what items were ordered – if we aren’t interested in the amounts either, we could apply further filters to the amounts? Note that there are two aspects where simple comparisons such as those shown here are not really adequate. We want to know that the total for the amount ordered is correct (by the way, the other bug is that the total for the Actual outcome should be £79.89). In order to check this automatically, we would need to define a variable, add the individual item amounts to it, and check that its total was the same as that calculated by the application. This can certainly be done, but it takes additional effort to program it into the comparison process! Similarly, we could store the name used at log-on and compare it to the name used at check out, and this would find that bug (but also requires additional work). Conclusion: automated comparison is not trivial! © Dorothy Graham STA110126 Page 4 of 4

- 97. 6-Advice Successful Test Automation 1 Managing 2 Architecture 3 Pre- and Post 4 Scripting 5 Comparison 6 Advice Final Advice and Direction 6-1 What next? • we have looked at a number of ideas about test automation today • what is your situation? – what are the most important things for you now? – where do you want to go? – how will you get there? • make a start on your test automation strategy now – adapt it to your own situation tomorrow 6-2 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 98. 6-Advice Strategy exercise • your automation strategy / action plan – review your objectives for today (p1) – review your “take-aways” so far (p2) – identify the top 3 changes you want to make to your automation (top of p3) – note your plans now on p3 • discuss with your neighbour or small group – exchange emails, keep in touch – form a support group for each other 6-3 6-4 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 99. 6-Advice Dealing with high level management • management support – building good automation takes time and effort – set realistic expectations • benefits and ROI – make benefits visible (charts on the walls) – metrics for automation • to justify it, compare to manual test costs over iterations • on-going continuous improvement – build cost, maintenance cost, failure analysis cost – coverage of system tested 6-5 Dealing with developers • critical aspect for successful automation – automation is development • may need help from developers • automation needs development standards to work – testability is critical for automatability – why should they work to new standards if there is “nothing in it for them”? – seek ways to cooperate and help each other • run tests for them – in different environments – rapid feedback from smoke tests • help them design better tests? 6-6 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 100. 6-Advice Standards and technical factors • standards for the testware architecture – where to put things – what to name things – how to do things • but allow exceptions if needed • new technology can be great – but only if the context is appropriate for it (e.g. Model-Based Testing) • use automation “outside the box” 6-7 On-going automation • you are never finished – don’t “stand still” - schedule regular review and refactoring of the automation – change tools, hardware when needed – re-structure if your current approach is causing problems • regular “pruning” of tests – don’t have “tenured” test suites • check for overlap, removed features • each test should earn its place 6-8 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 101. 6-Advice Information and web sites – www.AutomatedTestingInstitute.com • TestKIT Conference, Autumn, near Washington DC • Test Automation certificate (TABOK) – tool information • commercial and open source: http://testertools.com • open source tools – www.opensourcetesting.org – http://sourceforge.net – http://riceconsulting.com (search on “cheap and free tools”) – www.ISTQB.org • Expert level in Test Automation (in progress) 6-9 1 2 3 4 5 Successful Test Automation 6 Summary: successful test automation • assigned responsibility for automation tasks • realistic, measured objectives (testing ≠ automation) • technical factors – testware architecture, levels of abstraction, DSTL, scripting and comparison, pre and post processing • management support, ROI, continuous improvement 6-10 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk

- 102. 6-Advice any more questions? please email me! info@DorothyGraham.co.uk Thank you for coming today I hope this will be useful for you All the best in your automation! 6-11 presented by Dorothy Graham info@dorothygraham.co.uk © Dorothy Graham 2013 www.DorothyGraham.co.uk