Big data introduction, Hadoop in details

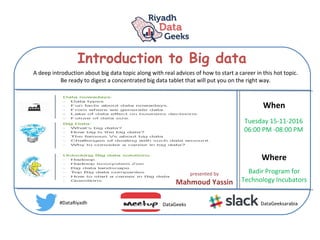

- 1. Introduction to Big data When Where Tuesday 15-11-2016 06:00 PM -08:00 PM Badir Program for Technology Incubators #DataRiyadh DataGeeks DataGeeksarabia A deep introduction about big data topic along with real advices of how to start a career in this hot topic. Be ready to digest a concentrated big data tablet that will put you on the right way. presented by Mahmoud Yassin

- 3. Agenda: Data nowadays: -Data types -Fun facts about data nowadays. -From where we generate data. -Lake of data effect on business decisions. -Future of data size. Unlocking Big data solutions: -Hadoop. -Hadoop ecosystem Zoo -Big data landscape -Top Big data companies -How to start a career in Big data -Questions Big Data: -What’s big data? -How big is the big data? -The famous Vs about big data. -Challenges of dealing with such data amount. -Why to consider a career in big data?

- 5. Data Types: information with a degree of organization that is readily searchable and quickly consolidate into facts. Examples: RDMBS, spreadsheet information with a lack of structure that is time and energy consuming to search and find and consolidate into facts Exemples: email, documents, images, reports Semi Structured data : XML data #DataRiyadh

- 6. Challenges for Unstructured data: How do you store Billions of Files? How long does it take to migrate 100’s of TB’s or data every 3-5 years Data has no structure Resources LimitationData Redundancy Data Backup #DataRiyadh

- 7. Sources of data generation: Social Media Sensors Cell Phones GPS Purchase WWW E-mails Media streaming Healthcare IOT #DataRiyadh

- 12. Facts about data: 70% of data is created by Individuals – but enterprises are responsible for storing and managing 80% of it. 52% of travelers use social media to plan for their vacations. 35% of purchases on Amazon are though recommendations 75% of what people watch on Netflix are recommendations. #DataRiyadh

- 14. Lake of data and business decisions : 2#DataRiyadh

- 15. Lake of data and business decisions : 2#DataRiyadh

- 16. Future of data generation: #DataRiyadh

- 17. Cost: Even if RDBMS is used to handle and store “big data,” it will turn out to be very expensive. Velocity: Also, “big data” is generated at a very high velocity. RDBMS lacks in high velocity because it’s designed for steady data retention rather than rapid growth. Can traditional DBMS solve this ? Data types: Second, the majority of the data comes in a semi-structured or unstructured format from social media, audio, video, texts, and emails. However, the second problem related to unstructured data is outside the purview of RDBMS because relational databases just can’t categorize unstructured data. They’re designed and structured to accommodate structured data such as weblog sensor and financial data. Size: First, the data size has increased tremendously to the range of petabytes—one petabyte = 1,024 terabytes. RDBMS finds it challenging to handle such huge data volumes. To address this, RDBMS added more central processing units (or CPUs) or more memory to the database management system to scale up vertically. #DataRiyadh

- 18. #DataRiyadh

- 19. What is Big data: Big data is a term that describes the large volume of data – both structured and unstructured – that generates on a day-to-day basis. But it’s not the amount of data that’s important. It’s what organizations do with the data that matters. Big data can be analyzed for insights that lead to better decisions and strategic business moves. Big data is high-volume, high-velocity and/or high-variety information assets that demand cost-effective, innovative forms of information processing that enable enhanced insight, decision making, and process automation Big data is a term for data sets that are so large or complex that traditional data processing applications are inadequate to deal with them. Challenges include analysis, capture, data curation, search, sharing, storage, transfer, visualization, querying, updating and information privacy. #DataRiyadh

- 20. The Vs: Velocity Volume Variety Veracity Variability Visualization Value #DataRiyadh

- 21. Big data in action: UPS stores a large amount of data – much of which comes from sensors in its vehicles - GPS ORION (On-Road Integration Optimization and Navigation) Data Analytics and Data Science Data Analytics and Data Science the world's largest operations research project the world's largest operations research project savings of more than 8.4 million 85 million miles off of daily routes Saved $30 million/Day #DataRiyadh

- 22. Big data in action: Walmart collects 2.5 petabytes of information from 1 million customers. from 6000 store Big data System (Kosmix) Pricing strategies Pricing strategies Advertising campaigns Advertising campaigns 30% on their Online sales Revenue got increased by 40% “We want to know what every product in the world is. We want to know who every person in the world is. And we want to have the ability to connect them together in a transaction.” -Neil Ashe, CEO of Global E-commerce at Walmart #DataRiyadh

- 23. Big data in action: Based on data analysis based on Big data platform: - What users made purchases in the past. - Which items do they have in their shopping cart - Which items did customers rate and like - What influence did the rating have on other customers to make purchase Personalization of the online store based on your previous searches

- 24. Big data in action: #DataRiyadh Zynga collects over 25 Terabytes / Day from FarmVille to drive higher in-game purchases.

- 25. Big data in quotes: “Without big data analytics, companies are blind and deaf, wandering out onto the web like deer on a freeway.” – Geoffrey Moore management consultant and author “Data is the new science. Big Data holds the answers.” – Pat Gelsinger Chief Executive Officer of VMware “With too little data, you won’t be able to make any conclusions that you trust. With loads of data you will find relationships that aren’t real… Big data isn’t about bits, it’s about talent.” – Douglas Merrill CEO and founder of ZestFinance.com “The world is one big data problem.” – Andrew McAfee MIT

- 27. Big data market forecast: The “big data” market is expected to cross $50 billion by 2017. #DataRiyadh

- 28. Big data jobs trend: The median advertised salary for professionals with big data expertise is $124,000 a year. IBM , Cisco and Oracle together advertised 26,488 open positions that required big data expertise in the last twelve months. 124000 usd to sar = 465012 /12 = 38751 SAR/Month #DataRiyadh

- 29. How to solve big data Hadoop: is a big data analysis engine #DataRiyadh

- 30. What is Hadoop The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. Hadoop is an open-source software framework for storing data and running applications on clusters of commodity hardware. It provides massive storage for any kind of data, enormous processing power and the ability to handle virtually limitless concurrent tasks or jobs. #DataRiyadh

- 31. Hadoop history Nutch is a well matured, production ready Web crawler. that enables fine grained configuration, relying on Apache Hadoop™ data structures, which are great for batch processing. #DataRiyadh

- 32. Why Hadoop is important ? Ability to store and process huge amounts of any kind of data, quickly. With data volumes and varieties constantly increasing, especially from social media and the Internet of Things (IoT), that's a key consideration. Computing power. Hadoop's distributed computing model processes big data fast. The more computing nodes you use, the more processing power you have. Fault tolerance. Data and application processing are protected against hardware failure. If a node goes down, jobs are automatically redirected to other nodes to make sure the distributed computing does not fail. Multiple copies of all data are stored automatically.

- 33. Why Hadoop is important ? Flexibility. Unlike traditional relational databases, you don’t have to preprocess data before storing it. You can store as much data as you want and decide how to use it later. That includes unstructured data like text, images and videos. Low cost. The open-source framework is free and uses commodity hardware to store large quantities of data. Scalability. You can easily grow your system to handle more data simply by adding nodes. Little administration is required. Scalability Horizontal scaling means that you scale by adding more machines into your pool of resources Vertical scaling means that you scale by adding more power (CPU, RAM) to an existing machine #DataRiyadh

- 34. How is Hadoop being used? Going beyond its original goal of searching millions (or billions) of web pages and returning relevant results, many organizations are looking to Hadoop as their next big data platform. Popular uses today include: #DataRiyadh

- 35. How is Hadoop being used? #DataRiyadh

- 36. How is Hadoop being used? #DataRiyadh

- 37. Hadoop family: Data Storage: Data Ingestion: Data Processing: Cluster Management: #DataRiyadh

- 38. Hadoop ecosystem Cluster monitoring, provisioning and management #DataRiyadh

- 39. Hadoop | Data Ingestion Apache Sqoop is a tool designed for efficiently transferring bulk data between Apache Hadoop and structured data stores such as relational databases. #DataRiyadh

- 40. Hadoop | Data Ingestion Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of log data. It is robust and fault tolerant with tunable reliability mechanisms and many failover and recovery mechanisms. #DataRiyadh

- 41. Hadoop | Data Ingestion Storm is real-time computation system. Storm makes it easy to reliably process unbounded streams of data, doing for real-time processing. A Storm topology consumes streams of data and processes those streams in arbitrarily complex ways, repartitioning the streams between each stage of the computation however needed. #DataRiyadh

- 42. Hadoop | Data Ingestion An easy to use, powerful, and reliable system to process and distribute data. Apache NiFi supports powerful and scalable directed graphs of data routing, transformation, and system mediation logic in a Web-based user interface #DataRiyadh

- 43. Hadoop | Data Ingestion Kafka™ is used for building real-time data pipelines and streaming apps. It is horizontally scalable, fault-tolerant, wicked fast, and runs in production in thousands of companies. #DataRiyadh

- 44. Hadoop | Data Ingestion Large scale log aggregator, and analytics. Fluentd is an open source data collector for unified logging layer. Fluentd allows you to unify data collection and consumption for a better use and understanding of data. Apache Samza is a distributed stream processing framework. It uses Apache Kafka for messaging, and Apache Hadoop YARN to provide fault tolerance, processor isolation, security, and resource management. #DataRiyadh

- 45. Hadoop ecosystem Cluster monitoring, provisioning and management #DataRiyadh

- 46. Hadoop | Data Storage Layer Hadoop Distributed File System (HDFS) offers a way to store large files across multiple machines. Hadoop and HDFS was derived from Google File System (GFS) paper. #DataRiyadh

- 47. Hadoop | Data Storage Layer #DataRiyadh

- 48. Hadoop | Data Storage Layer A distributed, column-oriented database. HBase uses HDFS for its underlying storage, and supports both batch-style computations using MapReduce and point queries (random reads) Doesn’t support SQL like RDBMS #DataRiyadh

- 49. Hadoop | Data Storage Layer A metadata and table management system for Hadoop. It shares the metadata with other tools like map reduce, Pig and Hive. It provides one constant data model for all Hadoop tools along with a shared schema. #DataRiyadh

- 50. Hadoop ecosystem Cluster monitoring, provisioning and management #DataRiyadh

- 51. Hadoop | Data Processing Layer MapReduce is the heart of Hadoop. It is this programming paradigm that allows for massive scalability across hundreds or thousands of servers in a Hadoop cluster with a parallel, distributed algorithm. #DataRiyadh

- 52. Hadoop | Data Processing Layer

- 53. Hadoop | Data Processing Layer A scripting SQL based language and execution environment for creating complex MapReduce transformations. Functions are written in Pig Latin (the language) and translated into executable MapReduce jobs. Pig also allows the user to create extended functions (UDFs) using Java. #DataRiyadh

- 54. Hadoop | Data Processing Layer In memory data analytics cluster computing framework originally developed in the AMPLab at UC Berkeley. Run programs up to 100x faster than Hadoop MapReduce in memory, or 10x faster on disk. #DataRiyadh

- 55. Hadoop ecosystem Cluster monitoring, provisioning and management #DataRiyadh

- 56. Hadoop | Data Querying Layer A distributed data warehouse built on top of HDFS to manage and organize large amounts of data. Hive provides a query language based on SQL semantic (HiveQL) which is translated by the runtime engine to MapReduce jobs for querying the data. #DataRiyadh

- 57. Hadoop | Data Querying Layer open source massively parallel processing (MPP) SQL query engine for data stored in a computer cluster running Apache Hadoop. #DataRiyadh

- 58. Hadoop ecosystem Cluster monitoring, provisioning and management #DataRiyadh

- 59. Hadoop | Management Layer intuitive, easy-to-use Hadoop management web UI. Apache Ambari was donated by Hortonworks team. It's a powerful and nice interface for Hadoop and other typical applications from the Hadoop ecosystem.

- 62. Big data existing solutions: Data Sources YARN: A framework for job scheduling and cluster resource management. A platform for manipulating data stored in HDFS via a high-level language called Pig Latin. It does data extractions, transformations and loading, and basic analysis in patch mode A data warehousing and SQL-like query language that presents data in the form of tables. Hive programming is similar to database programming. Ambari: A web interface for managing, configuring and testing Hadoop services and components. An open-source cluster computing framework with in- memory analytics. HDFS :A platform for manipulating data stored in HDFS via a high-level language called Pig Latin. It does data extractions, transformations and loading, and basic analysis in patch mode HBase:A distributed, column-oriented database. HBase uses HDFS for its underlying storage, and supports both batch-style computations using MapReduce and point queries. HCatalog A table and storage management layer for Hadoop that enables Hadoop applications (Pig, MapReduce, and Hive) to read and write data to a tabular form as opposed to files. A distributed data processing model and execution environment that runs on large clusters of commodity machines. MapReduce A Scalable machine learning and data mining library A high-performance coordination service for distributed applications. is a Java Web application used to schedule Apache Hadoop jobs data collection system for monitoring large distributed systems. A web-based tool for provisioning, managing, and monitoring Apache Hadoop clusters. Ambari also provides a dashboard for viewing cluster health such as heatmaps and ability to view MapReduce, Pig and Hive applications visually along with features to diagnose their performance. is a data serialization system

- 63. Other apache projects: Apache Flink is an open source platform for distributed stream and batch data processing. Apache Falcon Feed management and data processing platform Apache Ranger Ranger is a framework to enable, monitor and manage comprehensive data security across the Hadoop platform. Apache Tez to develop a generic application which can be used to process complex data-processing task Apache Tika toolkit detects and extracts metadata and text from over a thousand different file types Apache Parquet columnar storage format available to any project in the Hadoop ecosystem Apache Zeppelin A web-based notebook that enables interactive data analytics. Apache Drill Schema-free SQL Query Engine for Hadoop, NoSQL and Cloud Storage #DataRiyadh

- 65. Top Leading Big data companies The Apache Software Foundation (ASF) is an American non- profit corporation to support Apache projects #DataRiyadh

- 66. How to start 1. Identify business use cases tied to business outcomes, metrics and your big data roadmap 2. Identify big data champions from both the business and IT sides of your organization 3. Select infrastructure, tools and architecture for your big data POC/implementation 4. Staff the project with the right big data skills or a strategic big data implementation partner 5. Run your project/POC in sprints or short projects with tangible and measurable outcomes 6. Try to scale your success POC up to test your Logic implementation against the big dataset. #DataRiyadh

- 67. What can I do now ? #DataRiyadh

- 68. Certification Path | Administration Cloudera HortonWorks #DataRiyadh

- 69. Certification Path | Development Cloudera HortonWorks #DataRiyadh

- 70. Certification Path | Data Science Cloudera HortonWorks #DataRiyadh

- 72. Thanks For your time

Notas do Editor

- https://datafloq.com/read/3vs-sufficient-describe-big-data/166 Velocity The Velocity is the speed at which the data is created, stored, analyzed and visualized. In the past, when batch processing was common practice, it was normal to receive an update from the database every night or even every week. Computers and servers required substantial time to process the data and update the databases. In the big data era, data is created in real-time or near real-time. With the availability of Internet connected devices, wireless or wired, machines and devices can pass-on their data the moment it is created. The speed at which data is created currently is almost unimaginable: Every minute we upload 100 hours of video on YouTube. In addition, every minute over 200 million emails are sent, around 20 million photos are viewed and 30.000 uploaded on Flickr, almost 300.000 tweets are sent and almost 2,5 million queries on Google are performed. The challenge organizations have is to cope with the enormous speed the data is created and used in real-time. Volume 90% of all data ever created, was created in the past 2 years. From now on, the amount of data in the world will double every two years. By 2020, we will have 50 times the amount of data as that we had in 2011. The sheer volume of the data is enormous and a very large contributor to the ever expanding digital universe is the Internet of Things with sensors all over the world in all devices creating data every second. The era of a trillion sensors is upon us. If we look at airplanes they generate approximately 2,5 billion Terabyte of data each year from the sensors installed in the engines. Self-driving cars will generate 2 Petabyte of data every year. Also the agricultural industry generates massive amounts of data with sensors installed in tractors. Shell uses super-sensitive sensors to find additional oil in wells and if they install these sensors at all 10.000 wells they will collect approximately 10 Exabyte of data annually. That again is absolutely nothing if we compare it to the Square Kilometer Array Telescope that will generate 1 Exabyte of data per day. In the past, the creation of so much data would have caused serious problems. Nowadays, with decreasing storage costs, better storage solutions like Hadoop and the algorithms to create meaning from all that data this is not a problem at all. Variety In the past, all data that was created was structured data, it neatly fitted in columns and rows but those days are over. Nowadays, 90% of the data that is generated by organization is unstructured data. Data today comes in many different formats: structured data, semi-structured data, unstructured data and even complex structured data. The wide variety of data requires a different approach as well as different techniques to store all raw data. There are many different types of data and each of those types of data require different types of analyses or different tools to use. Social media like Facebook posts or Tweets can give different insights, such as sentiment analysis on your brand, while sensory data will give you information about how a product is used and what the mistakes are. Are you looking for Big Data Jobs or Candidates? Please go to our WORK section The Four Additional V’s Now that the context is set regarding the traditional V’s, let’s see which other V’s are important for organizations to keep in mind when they develop a big data strategy. Veracity Having a lot of data in different volumes coming in at high speed is worthless if that data is incorrect. Incorrect data can cause a lot of problems for organizations as well as for consumers. Therefore, organizations need to ensure that the data is correct as well as the analyses performed on the data are correct. Especially in automated decision-making, where no human is involved anymore, you need to be sure that both the data and the analyses are correct. If you want your organization to become information-centric, you should be able to trust that data as well as the analyses. accountability. Variability Big data is extremely variable. Brian Hopkins, a Forrester principal analyst, defines variability as the “variance in meaning, in lexicon”. He refers to the supercomputer Watson who won Jeopardy. The supercomputer had to “dissect an answer into its meaning and […] to figure out what the right question was”. That is extremely difficult because words have different meanings an all depends on the context. For the right answer, Watson had to understand the context. Variability is often confused with variety. Say you have bakery that sells 10 different breads. That is variety. Now imagine you go to that bakery three days in a row and every day you buy the same type of bread but each day it tastes and smells different. That is variability. Variability is thus very relevant in performing sentiment analyses. Variability means that the meaning is changing(rapidly). In (almost) the same tweets a word can have a totally different meaning. In order to perform a proper sentiment analyses, algorithms need to be able to understand the context and be able to decipher the exact meaning of a word in that context. This is still very difficult. Visualization This is the hard part of big data. Making all that vast amount of data comprehensible in a manner that is easy to understand and read. With the right analyses and visualizations, raw data can be put to use otherwise raw data remains essentially useless. Visualizations of course do not mean ordinary graphs or pie charts. They mean complex graphs that can include many variables of data while still remaining understandable and readable. Visualizing might not be the most technological difficult part; it sure is the most challenging part. Telling a complex story in a graph is very difficult but also extremely crucial. Luckily there are more and more big data startups appearing that focus on this aspect and in the end, visualizations will make the difference. One of them is future this will be the direction to go, where visualizations help organizations answer questions they did not know to ask. Value All that available data will create a lot of value for organizations, societies and consumers. Big data means big business and every industry will reap the benefits from big data. McKinsey states that potential annual value of big data to the US Health Care is $ 300 billion, more than double the total annual health care spending of Spain. They also mention that big data has a potential annual value of € 250 billion to the Europe’s public sector administration. Even more, in their well-regarded report from 2011, they state that the potential annual consumer surplus from using personal location data globally can be up to $ 600 billion in 2020. That is a lot of value. Of course, data in itself is not valuable at all. The value is in the analyses done on that data and how the data is turned into information and eventually turning it into knowledge. The value is in how organisations will use that data and turn their organisation into an information-centric company that relies on insights derived from data analyses for their decision-making.

- http://bridg.com/blog/walmart-big-data/

- http://bridg.com/blog/walmart-big-data/

- http://bridg.com/blog/walmart-big-data/

- One such project was an open-source web search engine called Nutch – the brainchild of Doug Cutting and Mike Cafarella. They wanted to return web search results faster by distributing data and calculations across different computers so multiple tasks could be accomplished simultaneously. During this time, another search engine project called Google was in progress. It was based on the same concept – storing and processing data in a distributed, automated way so that relevant web search results could be returned faster. In 2006, Cutting joined Yahoo and took with him the Nutch project as well as ideas based on Google’s early work with automating distributed data storage and processing. The Nutch project was divided – the web crawler portion remained as Nutch and the distributed computing and processing portion became Hadoop (named after Cutting’s son’s toy elephant). In 2008, Yahoo released Hadoop as an open-source project. Today, Hadoop’s framework and ecosystem of technologies are managed and maintained by the non-profit Apache Software Foundation (ASF), a global community of software developers and contributors.

- http://www.predictiveanalyticstoday.com/data-ingestion-tools/

- http://static.googleusercontent.com/media/research.google.com/en//archive/gfs-sosp2003.pdf

- http://static.googleusercontent.com/media/research.google.com/en//archive/gfs-sosp2003.pdf

- http://thebigdatablog.weebly.com/blog/the-hadoop-ecosystem-overview

- http://thebigdatablog.weebly.com/blog/the-hadoop-ecosystem-overview

- http://thebigdatablog.weebly.com/blog/the-hadoop-ecosystem-overview

- https://hadoopecosystemtable.github.io/