Assessment_Basics[1]

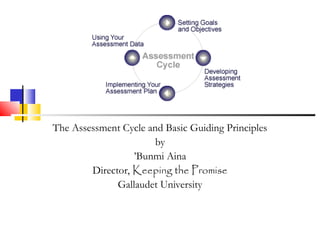

- 1. The Assessment Cycle and Basic Guiding Principles by ’Bunmi Aina Director, Keeping the Promise Gallaudet University

- 2. Presentation Objective This presentation will, hopefully, help provide a framework for establishing assessment in (a) your courses and/or (b) services you provide to students

- 3. Definitions

- 4. Assessment –What it is Collection and interpretation of information about what, how much, and how well students are learning. It is part of the instruction model of planning/teaching/assessing and refers to the assignments and tasks that provide information to improve the learning experience of current and future students.

- 5. Assessment– what it is not It is not solely an administrative activity, though University administration may assist you. It is not necessarily testing nor a series of tests, though testing can be a part of assessment. It is not a part of the University's faculty evaluation system. It intrudes neither on the faculty member's classroom nor academic freedom.

- 6. Part 1.

- 7. Nature of Assessment Cycle The Assessment Cycle is iterative, that is, repetitious. The process can be distilled into 4 steps: set goals and objectives for programs and courses; determine how to assess what, how much, and how well students learn; implement the assessment plan; and review the data to make changes during the semester or to determine what, how much, or how well students are learning.

- 8. The Assessment Cycle The George Washington University Office of Academic Planning and Assessment

- 9. Step 1 – Goals and Objectives When setting Learning Goals and Objectives – Goals are broad; objectives are narrow. Goals are general intentions; objectives are precise. Goals are intangible; objectives are tangible. Goals are abstract; objectives are concrete. Goals can't be validated as is; objectives can be validated. Source: http://edweb.sdsu.edu/courses/edtec540/objectives/Difference.html

- 10. Goals and Objectives Example – Goal: Discipline-specific knowledge Corresponding Objective: distinguish, analyze, criticize, synthesize (evince thinking, understanding, and application of core concepts)

- 11. Step 2- Assessment Strategies Formative or Direct occurs throughout the semester informs teaching with a goal to improve student learning. Examples Non-graded quizzes, one-minute written summaries, or short free-writes Learning logs Concept maps (see http://classes.aces.uiuc.edu/ACES100/Mind/CMap.html for explanation and examples)

- 12. Assessment Strategies (2) Summative or direct Used to assign grades Used to meet accountability demands (such as demonstration of sufficient knowledge in your field to permit progression to the next course in the curriculum). Examples Paper and pencil test Performance assessment of products and process Oral exam Portfolios

- 13. Step 3 – Plan Implementation Formative Assessment Plan ahead. Focus on a course that you are confident is going well. Identify the class session you will assess and reserve time for the assessment Make sure students understand the procedures; what you are going to do; why you are asking for the information and that assessing their learning is to help them improve Let students know what you learned from the assessment exercise and what adjustments or changes you will make in your teaching and the adjustments they can make in their behavior to help with their learning.

- 14. Plan Implementation (2) Consider what you want students to learn. Select tests and assignments that both teach and test the learning you value most. Construct a course outline that shows the nature and sequence of major tests and assignments. Check that tests and assignments fit your learning goals and are feasible in terms of workload. Collaborate with your students to set and achieve goals. Give students explicit instructions for the assignments.

- 15. Plan Implementation (3) The English Language Institute (ELI) model. In developing exam questions, ELI instructors follow a specific set of instructions. These instructions were developed following a checklist developed at Stanford: http://ctl.stanford.edu/teach/handbook/exam.html

- 16. Step 4– Use of the Data What does the evaluation or assessment information tell you about what and how well students are learning? How will you use the information to improve student learning? What additional information is needed? In what areas do students often have difficulty in your course? – Can you address prior knowledge or content differently or develop a different assessment tool?)

- 17. Use of the Data (2) The data translate institutional educational goals into practical, measurable objectives. The data can help guide resource allocation, strategic planning, ideas for modifying course content to maximize student engagement and learning. ________________________________________ Bottom line: The data informs a review of goals and objectives, and the cycle begins again.

- 18. Point to Note There must be clear linkage connecting University Goal (Mission and Vision) Division (AA or A&F) Goal (Mission) Program (Department) Goals (Mission) Course Goals

- 19. Part 2

- 20. Basic Principles of Assessment There are 5 basic principles in the assessment process: 1. Clarify the purpose 2. Define what is to be tested 3. Select appropriate test methods 4. Address practical and technical issues of administration and scoring 5. Set standards for performance

- 21. Purpose Clarification Feedback i.e. for formative purposes Measure progress i.e. to track individual or cohort improvement Ranking or grading students i.e. by norm or criterion referencing Quality control i.e. to assess students against a standard set internally or externally Evaluation of teaching or curriculum i.e. feedback to professors and program coordinators

- 22. Purpose Clarification (2) Assessments should be designed with a single purpose in mind, otherwise their effectiveness can be reduced. If more than one purpose exists, separate assessments should be considered for each.

- 23. Define Test Objective What knowledge do you want to assess? Create a rubric - define the range of competencies - build the rubric - decide what weight to give the different cells in your rubric

- 24. Select Appropriate Methods Let the purpose drive the choice! There are a range of assessment methods: - MC, short answer essay questions, projects, reports, portfolios, log books etc.

- 25. Practical and Technical Integrity 1. Ensure reliability Inter-rater comparison Reproducibility (depends on sample size)

- 26. Practical and Technical Integrity (2) 2. Ensure validity Method of scoring performance as accurate as possible, removing any marker-bias Rating forms Check lists Multiple answer options

- 27. Practical and Technical Integrity (3) Scoring Consider rubrics Methods of combining elements of an exam to produce a score Scoring keys

- 28. Setting Performance Standards Determine type of standard Relative (norm referenced) Absolute (criterion referenced) Choose standard setting method See http://www.cde.state.co.us/cdeassess/documents/c for ideas on standard setting methods

- 29. Setting Performance Standards (2) Setting standards involves consideration of content standards, performance levels, the test, and expectations for students. Setting standards is simply determining cut scores that correspond to performance levels. The cut scores that are determined during the Standard Setting procedure demarcate one performance level from another

- 30. That’s all, Folks! Thank You!

- 31. Credits: The George Washington University Office of Academic Planning and Assessment School of Medical Education, University of Sheffield College of Education, San Diego State University College of Agricultural, Consumer and Environmental Science at the University of Illinois at Urbana-Champaign Stanford University School of Education Colorado Department of Education