RELATIONSHIP BETWEEN VARIABLES EXPERIMENTS

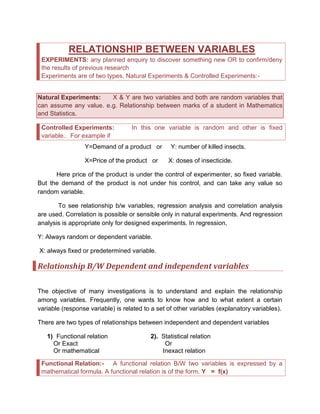

- 1. RELATIONSHIP BETWEEN VARIABLES EXPERIMENTS: any planned enquiry to discover something new OR to confirm/deny the results of previous research Experiments are of two types, Natural Experiments & Controlled Experiments:- Natural Experiments: X & Y are two variables and both are random variables that can assume any value. e.g. Relationship between marks of a student in Mathematics and Statistics. Controlled Experiments: In this one variable is random and other is fixed variable.. For example if Y=Demand of a product or Y: number of killed insects. X=Price of the product or X: doses of insecticide. Here price of the product is under the control of experimenter, so fixed variable. But the demand of the product is not under his control, and can take any value so random variable. To see relationship b/w variables, regression analysis and correlation analysis are used. Correlation is possible or sensible only in natural experiments. And regression analysis is appropriate only for designed experiments. In regression, Y: Always random or dependent variable. X: always fixed or predetermined variable. Relationship B/W Dependent and independent variables The objective of many investigations is to understand and explain the relationship among variables. Frequently, one wants to know how and to what extent a certain variable (response variable) is related to a set of other variables (explanatory variables). There are two types of relationships between independent and dependent variables 1) Functional relation 2). Statistical relation Or Exact Or Or mathematical Inexact relation Functional Relation:- A functional relation B/W two variables is expressed by a mathematical formula. A functional relation is of the form. Y = f(x)

- 2. In functional relation for every value of X there exists a unique value of Y. For example the relation B/W sales (Y) of a product sold at a fixed price and no. of units sold (X) has a functional relation. If price =2 then the above functional relation can be expressed by a equation: Y=2X 0 2 4 6 8 10 0 2 4 6 Series1 e.g. a) Area of a circle= Πr2 b) F=k(m1m2/r2) (Newton’s law of gravity) c) The relationship between dollar sales (Y) of a product sold at a fixed price and the number of units sold. d) The relation B/W centigrade and Fahrenheit scales takes the form: F= 32+9/5 C Statistical Relation: A statistical relationship unlike a functional relation is not a perfect one. In statistical relation for one value of X, there exists a population of Y values. We draw a best possible line which describes the relation of X & Y. For example if X: age of individuals & Y: Weight of individuals, Then there exists a statistical relation b/w them. 0 10 20 30 40 50 60 70 80 0 10 20 30 40 50 60 Series1 X 2 3 4 Y 4 6 8 X Y 30 40 50 45,50,61 48,54,70,75 58,65,69

- 3. Regression Analysis:- Regression analysis involves measuring statistical relationship B/W variables. The word regression is used to investigate the dependence of one variable, called the dependent variable (Y) on one or more variables, called independent variable (X). Regression analysis provides an equation to be used for predicting or estimating the average value of the dependent variable from the known values of the independent variable. The regression analysis is generally classified into two kinds. 1. Linear Regression Simple Linear Regression Multiple Linear Regression Curvi Linear Regression Linear:- The regression model is linear if the parameter in the model are in linear form (that is no parameter appears as an exponent or is multiplied or divided by any other parameter). Otherwise, non-linear model. Suppose + + + = 2 2 1 1 x X Y where α & β are parameters. It is a linear model But if Y=α X β + ε or Y= α βX +ε it is non-linear. Non Linear Model:- The non linear model that can be linearized (that is it can be converted into linear model) by an appropriate transformation is called intrinsically linear and those that can not be so transformed is called intrinsically non-linear. e.g. Y=α X β + ε Apply log on both sides Log(Y) = Log(α)+ β Log(X) Y= α βX +ε Apply log on both sides Log(Y) = Log(α)+ X Log(β) Regressor:- The variable that forms the basis of estimation or prediction is called the regressor. It is also called independent variable, or explanatory or controlled or predictor variable, usually denoted by X.

- 4. Regressand:- The variable whose resulting values depends upon the known values of independent variable, is called regressand. It is also called response, dependent, or random variable, usually denoted by Y. Scatter Diagram:- To investigate the relationship B/W two variables first of all observe a scatter diagram. X-axis Y-axis independent dependent In particular, if the points lie close to a straight line, then we say that a linear relationship exist B/W two variables (figure 1 & 2) On the other hard, if points are appear to lie along a curve then curvi-linear relationship is said to exist (figure 3 & 4). A great dispersion of points among the line implies no relationship (Figure 5). 0 1 2 3 4 5 6 7 8 9 10 0 1 2 3 4 5 6 7 8 9 10 X Y 0 1 2 3 4 5 6 7 8 9 10 0 1 2 3 4 5 6 7 8 9 10 X Y Figure 1 Figure 2

- 5. 5 0 -5 25 20 15 10 5 0 X Y 10 9 8 7 6 5 4 3 2 1 0 170 160 150 140 130 120 110 100 90 x1 y Figure 3 Figure 4 Figure 5 0 1 2 3 4 5 6 7 8 9 10 3 4 5 6 7 8 X Y Simple Linear Regression:- In simple regression, the dependence of response variable (Y) is investigated on only one regressor (X). if the relationship of these variables can be described by a straight line, it is termed as simple linear regression. After using scatter diagram to illustrate the relationship B/W independent and dependent variable, the next step is to specify the mathematical formulation of the linear regression model, which provides the basis for statistical analysis. The population simple linear regression model is defined as: Y = 0 + 1 X + , Population Regression Model Y = 0 + 1 X Population Regression Line where 0 and 1 are the population regression coefficients and i is a random error peculiar to the i-th observation. Thus, each response is expressed as the sum of a value predicted from the corresponding X, plus a random error. The sample regression equation is an estimate of the population regression equation. Like any other estimate, there is an uncertainty associated with it.

- 6. Y^ = b0 + b1 X Sample Regression Line Where b0 : Y-Intercept b1: Slope of regression line with X b0 & b1 also called regression coefficients. X1 is independent variable and Y is the dependent variable. This model is said to be simple (b/c only one independent variable) linear in parameters and linear in independent variable (as it is in first power not X2 or X3) Least squares principle, Estimates in SLR: The simple linear regression equation is also called the least squares regression equation. Its name tells us the criterion used to select the best fitting line, namely that the sum of the squares of the residuals should be least. That is, the least squares regression equation is the line for which the sum of squared residuals is a minimum. The least square estimates of regression coefficients are ) ( ) ( 1 XX S XY S b = − − − = X b Y bo 1 Interpretation of b0 & b1: b0: is the average value of y when x=o. And if range of x not contain zero, then b0 has no interpretation. b1: indicates the change in y(increase or decrease) for a unit increase in x. e.g. if y = 20-0.27 x y: temperature x: rainfall in centigrade. If rainfall(x) increases 10C then temperature will decrease 0.27 centigrade.

- 7. Example: - The following data are the rates of oxygen consumption of birds, measured at different environmental temperatures: Temperature oC (X) Oxygen Consumption ml/g/hr (Y) XY X2 Y2 e=Y-Y^ Y^ e2 -18 5.2 -93.6 324 27.04 0.148 5.05 0.022 -15 4.7 -70.5 225 22.09 -0.087 4.78 0.007 -10 4.5 -45.0 100 20.25 0.151 4.34 0.022 -5 3.6 -18.0 25 12.96 -0.310 3.91 0.096 0 3.4 0.0 0 11.56 -0.071 3.47 0.005 5 3.1 15.5 25 9.61 0.067 3.03 0.004 10 2.7 27.0 100 7.29 0.106 2.59 0.011 19 1.8 34.2 361 3.24 -0.004 1.80 0.000 -14 29 -150.4 1160 114.04 0.17 (i):- Draw scatter plot for the data (ii):- Fit simple linear regression and interpret the parameters. Also draw the fitted regression line on the scatter plot (iii):-Find Standard error of estimate, SE(b0) and SE(b1). (iv):- Test the hypothesis that β0=3.5 (v):- Test the hypothesis that there is no linear relation between Y and X. i.e β1=0 (vi):- Construct 95% C.I for regression parameters. (vii):-Perform Analysis of Variance. Calculate/ interpret coefficient of determination (viii):- Test the hypothesis that mean oxygen consumption at 6 degree centigrade in the Population is 2.9 ml/g/hr. Also find 95%C.I for mean value of Y when X=6

- 8. (ix):- Test the hypothesis that the oxygen consumption of a single bird at 6 degree centigrade in the population is 3 ml/g/hr. Also find 95% C.I for single value of Y when X=6 (x):- Test the goodness of fit of the regression model by residual plot Solution:- (i):- Draw scatter plot for the data 20 10 0 -10 -20 5 4 3 2 X Y (ii):- Fit simple linear regression and interpret the parameters. Also draw the fitted regression line on the scatter plot 75 . 1 − = X 625 . 3 = Y − = − = − − = 65 . 99 ) )( ( ) )( ( n Y X XY Y Y X X SXY = − = − = 5 . 1135 ) ( ) ( 2 2 2 n X X X X SXX 915 . 8 ) ( ) ( 2 2 2 = − = − = n Y Y Y Y SYY = = ) ( ) ( 1 XX S XY S b -0.0878 ml/g/hr/ C = − = − − X b Y bo 1 3.47 ml/gr/hr

- 9. So estimated simple linear regression equation is Y^ = 3.47 - 0.0878 X Interpretation of estimated regression parameter ❑ The value of b1=-0.0878, indicates that the average oxygen consumption is expected to decrease by 0.0878 ml with each one degree centigrade increase in temperature. NOTE: The observed range of temperature (Explanatory Variable) in the experiment was -18 to 19 C (i.e scope of the model), therefore it would be an unreasonable extrapolation to expect this rate of decrease in oxygen consumption to continue if temperature were to increase. It is safe to use the results of regression only within the range of the observed value of the independent variable only (i.e within the scope of the model). ❑ In regression equation b0=3.47, is the average oxygen consumption when temperature=0 C . In this example since scope of the model cover x=0 so b0 is the average oxygen consumption. NOTE: Interpolation and Extrapolation Interpolation is making a prediction within the range of values of the predictor in the sample used to generate the model. Interpolation is generally safe. Extrapolation is making a prediction outside the range of values of the predictor in the sample used to generate the model. The more removed the prediction is from the range of values used to fit the model, the riskier the prediction becomes because there is no way to check that the relationship continues to be linear. For example, an individual with 9 kg of lean body mass would be expected to have strength of -4.9 units. This is absurd, but it does not invalidate the model because it was based on lean body masses in the range 27 to 71 kg. (iii):-Find Standard error of estimate, SE(b0) and SE(b1). Standard Error of Estimate The observed values of (x,y) do not all fall on the regression line, but they scatter away from it. The degree of scatter of the observed values about the reg. line is measured by what is called standard deviation of regression or the standard error of estimate, denoted by ( e ), its estimate is Se

- 10. 0283 . 0 6 / 1698 . 0 2 1 0283 . 0 2 ) ( 2 ^ 2 2 = = − − − = − − = n XY b Y bo Y OR n Y Y Se Se.=0.168 The usual approach to fit a regression model. 1. Make a scatter plot of the data, to get an impression about the relationship. 2. Formulate a simple model based on scatter plot. 3. Assess how well the model fits to the data by using formal tests and residuals plot. 4. If it fits good, use it, and if not, try suitable transformation or some model. Inference in Simple Linear Regression (From samples to population) Generally, more is sought in regression analysis than a description of observed data. One usually wishes to draw inferences about the relationship of the variables in the population from which the sample was taken. To draw inferences about population values based on sample results, the following assumptions are needed. • Linearity • Equal Variances for error • Independence of errors • Normality of errors The slope and the intercept estimated from a single sample typically differ from the population values and vary from sample to sample. To use these estimates for inference about the population values, the sampling distributions of the two statistics are needed. When the assumptions of the linear regression model are met, the sampling distribution of bo & b1are normal with mean 0 and 1 with standard errors XX e S S b E S 1 ) 1 ( . = XX e S X n S b E S 2 0 1 ) ( . + =

- 11. 005 . 0 5 . 1135 1 168 . 0 1 ) 1 ( . = = = XX e S S b E S 06 . 0 5 . 1135 75 . 1 8 1 168 . 0 1 ) ( . 2 0 = − + = + = XX e S X n S b E S Confidence intervals for regression parameters A statistics calculated from a sample provides a point estimate of the unknown parameter. A point estimate can be thought of as the single best guess for the population value. While the estimated value from the sample is typically different from the value of the unknown population parameter, the hope is that it is not too for away. Based on the sample estimates, it is possible to calculate a range of values that, with a designated likelihood, includes the population value. Such a range is called a confidence interval. NOTE: 90% C.I can be interpret as If we take 100 samples of the same size under the same conditions and compute 100 C.I’s about parameter, one from each sample, then 90 such C.Is will contain the parameter (i.e not all the constructed C.Is) Confidence interval estimate of a parameter is more informative than point estimate because it reflects the precision of the estimate. The width of the C.I (i.e U.L – L.L) is called precision of the estimate. The precision can be increased either by decreasing the confidence level or by increasing the sample size. (iv):- Test the hypothesis that β0=3.5 Test of hypothesis for 0 1) Construction of hypotheses Ho : o = 3.5 H1: o 3.5 2) Level of significance = 5% 3) Test Statistic 5 . 0 06 . 0 5 . 3 47 . 3 ) ( − = − = − = bo SE o bo t 4) Decision Rule:- Reject Ho if 447 . 2 ) 6 ( 025 . 0 ) 2 ( 2 = = − t t t n cal 5) Result:- So don’t reject Ho

- 12. (vi):- Construct 95% C.I for regression parameters. 95% C.I for 0: ( ) ) 0 ( ) 2 ( 2 / 0 b SE b t n− ) 06 . 0 ( 47 . 3 ) 6 ( 025 . t ) 06 . 0 )( 447 . 2 ( 47 . 3 (3.32 , 3.62) (v):- Test the hypothesis that there is no linear relation between Y and X. i.e β1=0 Test of hypothesis for 1 1) Construction of hypotheses Ho : 1 = 0 H1: 1 0 2) Level of significance = 5% 3) TEST STATISTIC 56 . 17 005 . 0 0 0878 . 0 ) 1 ( 1 1 − = − − = − = b SE b t 4) Decision Rule:- Reject Ho if 447 . 2 ) 6 ( 025 . 0 ) 2 ( 2 = = − t t t n cal 5) Result:- So reject Ho and conclude that there is significant relationship between temperature and oxygen consumption. (vi):- Construct 95% C.I for regression parameters. 95% C.I for 1 ( ) ) 1 ( 1 ) 2 ( 2 / b SE b t n−

- 13. ( ) 005 . 0 0878 . 0 ) 6 ( 025 . t − ( ) 005 . 0 ) 447 . 2 ( 0878 . 0 − (-0.1 , -0.076) (vii):-Perform Analysis of Variance. Calculate/ interpret coefficient of determination ANALYSIS OF VARIANCE IN SIMPLE LINEAR REGRESSION The Analysis of Variance table is also known as the ANOVA table (for ANalysis Of VAriance). It tells the story of how the regression equation accounts for variablity in the response variable. The column labeled Source has three rows: Regression, Residual, and Total. The column labeled Sum of Squares describes the variability in the response variable, Y. Partition of variation in dependent variable into explained and unexplained variation Total variation=Explained variation (Variation due to X also called variation due to regression) + Unexplained variation (Variation due to unknown factors) Total variation:- First, the overall variability of the dependent var is calculated by computing the sumof squares of deviations of Y-values from Y, a quantity termed the total sum of squares. S(YY)= 8.915 Explained variation (Variation in Y due to X also called variation due to regression): b S(XY) =-0.0878(-99.65)=8.74 Unexplained Variation: Total variation – explained variation=8.915-8.7452=0.1698 Associated with any sun of square is its degree of freedom (the # of independent observations) TSS has n-1 d.f b/c it lose 1 d.f in computing sample mean Y and reg .SS has (k-1) d.f. b/c there is only one independent var. and residual SS has n-k d.f. where k is the # of parameters in the model. The hypothesis 1=0 may be tested by analysis of variance procedure. A N O V A T A B L E

- 14. Source Of Variation (S.O.V) Degree of Freedom (DF) Sum of Squares (SS) Mean Sum of Squares (MSS=SS/df) Fcal Ftab Regression Error k-1=1 n-k=7-1=6 8.7452 0.1698 8.7452 0.0283 308.93* F.05(1,6)=5.99 TOTAL n-1=8-1=7 8.915 S = 0.1682 R-Sq = 98.1% R-Sq(adj) = 97.8% Relation between F and t for testing 1=0 F=t2 308.93=(-17.56)2 Goodness of Fit: An important part of any statistical procedure that built models from data is establishing how well the model actually fits. This topic encompasses the detecting of possible violations of the required assumptions in the data being analyzed and to check how close the observed data points to the fitted line. A commonly used measure of the goodness of fit of a linear model is R2 called coefficient of determination. R² is the squared multiple correlation coefficient. It is also called the Coefficient of Determination. R² is the Regression sum of squares divided by the Total sum of squares, RegSS/TotSS. It is the fraction of the variability in the response that is accounted for by the model. Some call R² the proportion of the variance explained by the model. If a model has perfect predictability, the Residual Sum of Squares will be 0 and R²=1. If a model has no predictive capability, R²=0. % 1 . 98 100 915 . 8 7452 . 8 100 . Re 2 = = = x x TotalSS SS g R

- 15. The value of R2, indicates that about 98% variation in the dependent variable has been explained by the linear relationship with X and remaining are due to some other unknown factors. (x): Test the goodness of fit of the regression model by residual plot Residual Plot:- The estimated residuals ei’s are defined as the difference between observed and fitted values of yi’s i.e Y Y e ˆ − = The plot of ei against the corresponding fitted values s Y' ˆ , provides useful information about the appropriateness of the model. If the plot of residuals against s Y' ˆ is a random, scatter and does not show any systematic pattern, then we conclude that the model is appropriate. NOTE: If there are same residuals with very large values, it may be indication of the presence of outliers (the values that are not consistent with data) (viii):- Test the hypothesis that mean oxygen consumption at 6 degree centigrade in the Population is 2.9 ml/g/hr. Also find 95%C.I for mean value of Y when X=6 Prediction in Simple Linear Regression Test of hypothesis for mean value of Y i.e ( ) / ( X Y ): Y6=3.47 -0.0878 (6)=2.943 071 . 0 5 . 1135 ) 75 . 1 6 ( 8 1 168 . 0 ) ( 1 ) ˆ ( . 2 2 0 6 = + + = − + = XX e S X X n S Y E S 1) Construction of hypotheses Ho : y/6 = 2.9 H1: y/6 2.9 2) Level of significance = 5%

- 16. 3) Test Statistic 605 . 0 071 . 0 9 . 2 943 . 2 ) ˆ ( . 6 6 / 6 = − = − = Y E S Y t Y 4) Decision Rule:- Reject Ho if 447 . 2 ) 6 ( 025 . 0 ) 2 ( 2 = = − t t t n cal 5) Result:- So don‘t reject Ho. Confidence interval for mean value of Y i.e y/x ) ˆ ( . ˆ 6 ) 2 ( 2 / 6 Y E S t Y n− ) 071 . 0 )( 447 . 2 ( 943 . 2 (2.77 , 3.116) (ix):- Test the hypothesis that the oxygen consumption of a single bird at 6 degree centigrade in the population is 3 ml/g/hr. Also find 95% C.I for single value of Y when X=6 Test of hypothesis for single value of Y Y6=3.47 -0.0878 (6)=2.943 18 . 0 5 . 1135 ) 75 . 1 6 ( 8 1 1 168 . 0 ) ( 1 1 ) ˆ ( . 2 2 0 6 = + + + = − + + = XX e S X X n S Y E S 1) Construction of hypotheses Ho : Y6 = 3 H1: Y6 3 2) Level of significance = 5% 3) TEST STATISTIC 32 . 0 18 . 0 3 943 . 2 ) ˆ ( . 6 6 / 6 − = − = − = Y E S Y t Y

- 17. 4) Decision Rule:- Reject Ho if 447 . 2 ) 6 ( 025 . 0 ) 2 ( 2 = = − t t t n cal 5) Result:- So don’t reject Ho. Confidence interval for single value of Y ) ˆ ( . ˆ 6 ) 2 ( 2 / 6 Y E S t Y n− ) 18 . 0 )( 447 . 2 ( 943 . 2 (2.50 , 3.38) Multiple Linear Regression (Continue) EXAMPLE: The following data represent the performance of a chemical process as a function of several controllable process variables: CO2 Product Y Solvent Total X1 Hydrogen Consumption X2 Y2 2 1 X 2 2 X X1Y X2Y X1X2 36.98 2227.25 2.06 1367.52 4960643 4.2436 82364 76.179 4588.1 13.74 434.90 1.33 188.79 189138 1.7689 5976 18.274 578.4 10.08 481.19 0.97 101.61 231544 0.9409 4850 9.778 466.8 8.53 247.14 0.62 72.76 61078 0.3844 2108 5.289 153.2 36.42 1645.89 0.22 1326.42 2708954 0.0484 59943 8.012 362.1 26.59 907.59 0.76 707.03 823720 0.5776 24133 20.208 689.8 19.07 608.05 1.71 363.66 369725 2.9241 11596 32.610 1039.8 5.96 380.55 3.93 35.52 144818 15.4449 2268 23.423 1495.6 15.52 213.40 1.97 240.87 45540 3.8809 3312 30.574 420.4 56.61 2043.36 5.08 3204.69 4175320 25.8064 115675 287.579 10380.3 229.50 9189.32 18.65 7608.87 13710479 56.0201 312224 511.926 20174.4 1. Fit a simple linear regression relating CO2 product to total solvent and calculate the value of R2. (Assignment)

- 18. 2. Fit a multiple linear regression relating CO2 product to total solvent and hydrogen consumption and calculate the value of R2 and compare the value of R2 in part (1) and comment. 3. Can we conclude that total solvent and hydrogen consumption are sufficient number of independent variables for explaining the variability in CO2 product? SOLUTION: 43.9475 18.6225 1723.79 716.86 43.9475 18.6225 3.865 1.435 1723.79 716.86 3.865 1.435 Y X1 X2 918.93 1 = X 1.865 2 = X 22.95 = Y 106 . 101329 10 ) 5 . 229 )( 32 . 9189 ( 312224 ) )( ( ) ( 1 1 1 = − = − = n Y X Y X Y X S = − = − = 91 . 83 10 ) 5 . 229 )( 65 . 18 ( 926 . 511 ) )( ( ) ( 2 2 2 n Y X Y X Y X S 8 . 5266118 ) ( ) ( 2 1 2 1 1 1 = − = n X X X X S 24 . 21 ) ( ) ( 2 2 2 2 2 2 = − = n X X X X S = − = 32 . 3036 ) )( ( ) ( 2 1 2 1 2 1 n X X X X X X S 84 . 2341 ) ( ) ( 2 2 = − = n Y Y YY S

- 19. 0185 . 0 2 . 102633124 6 . 1897452 )] , ( [ ) , ( ) , ( ) , ( ) , ( ) , ( ) , ( 1 2 2 1 2 2 1 1 2 2 1 1 2 2 = = − − = X X S X X S X X S Y X S X X S Y X S X X S b 31 . 1 4 . 134212437 )] , ( [ ) , ( ) , ( ) , ( ) , ( ) , ( ) , ( 2 2 2 1 2 2 1 1 1 2 1 2 1 1 = = − − = X X S X X S X X S Y X S X X S Y X S X X S b = − − = − − 2 1 2 1 X b X b Y bo 3.52 Fitted regression line is Y = 3.52 + 0.0185 X1 + 1.31 X2 Test of hypothesis about significance of the partial regression coefficients: Test of hypothesis for 1 1) Construction of hypotheses Ho : 1 = 0 H1: 1 0 2) Level of significance = 5% 3) TEST STATISTIC 68 . 5 003257 . 0 0 0185 . 0 ) 1 ( 1 1 = − = − = b SE b t where 0.003257 ) 32 . 3036 ( ) 24 . 21 )( 8 . 5266118 ( 24 . 21 16 . 7 )] 2 , 1 ( [ ) 2 , 2 ( ) 1 , 1 ( ) 2 , 2 ( ) 1 ( . 2 2 = − = − = X X S X X S X X S X X S S b E S e 4) Decision Rule:- Reject Ho if 306 . 2 ) 8 ( 025 . 0 ) 2 ( 2 = = − t t t n cal 5) Result:- So reject Ho and conclude that there is significant relationship between CO2 Product and Solvent Total

- 20. 95% C.I for 1 ( ) ) 1 ( 1 ) 2 ( 2 / b SE b t n− ( ) 003257 . 0 0185 . 0 ) 6 ( 025 . t ( ) 003257 . 0 ) 306 . 2 ( 0185 . 0 (0.011 , 0.026) Test of hypothesis for 2 1) Construction of hypotheses Ho : 2 = 0 H1: 2 0 2) Level of significance = 5% 3) TEST STATISTIC 81 . 0 622 . 1 0 31 . 1 ) 2 ( 2 2 = − = − = b SE b t where 622 . 1 ) 32 . 3036 ( ) 24 . 21 )( 8 . 5266118 ( 8 . 5266118 16 . 7 )] 2 , 1 ( [ ) 2 , 2 ( ) 1 , 1 ( ) 1 , 1 ( ) 2 ( . 2 2 = − = − = X X S X X S X X S X X S S b E S e 4) Decision Rule:- Reject Ho if 306 . 2 ) 8 ( 025 . 0 ) 2 ( 2 = = − t t t n cal

- 21. 5) Result:- So don’t reject Ho and conclude that there is significant relationship between CO2 Product and Hydrogen Consumption 95% C.I for 2 ( ) ) 2 ( 2 ) 2 ( 2 / b SE b t n− ( ) 622 . 1 31 . 1 ) 6 ( 025 . t ( ) 622 . 1 ) 306 . 2 ( 31 . 1 (-2.43, 5.05) ANALYSIS OF VARIANCE IN MULTIPLE LINEAR REGRESSION The hypothesis 1=2=0 may be tested by analysis of variance procedure. Total SS=S(Y,Y)= 84 . 2341 Reg.SS =b1 S(X1,Y)+ b2 S(X2,Y)=(0.0185)( 106 . 101329 )+(1.31)( 91 . 83 )=1983.07 A N O V A T A B L E Source Of Variation (S.O.V) Degree of Freedom (DF) Sum of Squares (SS) Mean Sum of Squares (MSS=SS/df) Fcal Ftab Regression Error 2 7 1983.07 358.77 991.54 51.25 19.35* F.05(2,7)=4.74 TOTAL 9 12341.84 Coefficient of Determination The co-efficient of determination tells us the proportion of variation in the dependent variable explained by the independent variables % 7 . 84 100 84 . 12341 07 . 1983 100 . Re 2 = = = x x TotalSS SS g R

- 22. The value of R2, indicates that about 85 % variation in the dependent variable has been explained by the linear relationship with X1 & X2 and remaining are due to some other unknown factors. Relative importance of independent variables Standardized regression coefficients are useful for measuring the relative importance of the independent variables because Standardized regression coefficients are unit free quantities 38 . 0 12341.84 8 . 5266118 0185 . 0 ) ( ) , ( 1 1 1 * 1 = = = YY S X X S b b 054 . 0 12341.84 24 . 21 31 . 1 ) ( ) , ( 2 2 2 * 2 = = = YY S X X S b b So Solvent Total(X1) is more important variable than Hydrogen Consumption(X2) in predicting the CO2 Product. Polynomial Regression EXAMPLE: Fit an appropriate regression model to describe the relationship b/w yield response of rice variety and nitrogen fertilizer: X=X1 Y X2 =X2 2 1 X 2 2 X Y2 X1Y X2Y X1X2 0 4878 0 0 0 23794884 0 0 0 30 5506 900 900 810000 30316036 165180 4955400 27000 60 6083 3600 3600 12960000 37002889 364980 21898800 216000 90 6361 8100 8100 65610000 40462321 572490 51524100 729000 120 6291 14400 14400 207360000 39576681 754920 90590400 1728000 150 5876 22500 22500 506250000 34527376 881400 132210000 3375000 180 5478 32400 32400 1049760000 30008484 986040 177487200 5832000 630 40473 81900 81900 1842750000 235688671 3725010 478665900 11907000 1. Draw a scatter plot to the data, and decide the polynomial. 2. Fit an appropriate equation to the data. 3. Test the significance of the quadratic term. 4. Determine the values of X & Y at which the quadratic function is maximum

- 23. SOLUTION: Draw a scatter diagram and observe the relationship b/w two variables. As it is polynomial of 2nd degree, so fit a line of the form: 2 ˆ cX bX a Y + + = SCATTER PLOT 0 100 200 5000 5500 6000 6500 X Y Put X=X1 and X2=X2 1 = X = 2 X = Y 82440 ) )( ( ) ( 1 1 1 = − = n Y X Y X Y X S 5131800 ) )( ( ) ( 2 2 2 = − = n Y X Y X Y X S 25200 ) ( ) ( 2 1 2 1 1 1 = − = n X X X X S 884520000 ) ( ) ( 2 2 2 2 2 2 = − = n X X X X S 4536000 ) )( ( ) ( 2 1 2 1 2 1 = − = n X X X X X X S 86 . 1679566 ) ( ) ( 2 2 = − = n Y Y YY S

- 24. 9 . 28 12 + 1.71461E 13 + 4.96420E )] , ( [ ) , ( ) , ( ) , ( ) , ( ) , ( ) , ( 1 2 2 1 2 2 1 1 2 2 1 1 2 2 = = − − = X X S X X S X X S Y X S X X S Y X S X X S b 143 . 0 )] , ( [ ) , ( ) , ( ) , ( ) , ( ) , ( ) , ( 2 2 2 1 2 2 1 1 1 2 1 2 1 1 − = − − = X X S X X S X X S Y X S X X S Y X S X X S b = − − = − − 2 1 2 1 X b X b Y bo 4845 Regression Analysis: Y versus X1, X2 The Fitted regression equation is Y = 4845 + 29.0 X1 - 0.143 X2 Predictor Coef SE Coef T P Constant 4845.40 68.86 70.36 0.000 X 28.952 1.792 16.16 0.000 X2 -0.142672 0.009564 -14.92 0.000 S = 78.89 R-Sq = 98.5% R-Sq(adj) = 97.8% Analysis of Variance Source DF SS MS F P Regression 2 1654670 827335 132.92 0.000 Residual Error 4 24897 6224 Total 6 1679567

- 25. Comparison of 1st degree and 2nd degree curve: SIMPLE LINEAR REGRESSION ( Ist degree curve) Curvilinear REGRESSION ( 2ND degree curve) Y = 5487 + 3.27 X Se = 531 R2 = 16.1% Y = 4845 + 29.0 X - 0.143 X2 Se = 78.89 R-Sq = 98.5% The 2nd degree curve is appropriate for the above data set The value of X at which maximum or minimum value of quadratic regression occur 4 . 101 ) 143 . 0 ( 2 ) 29 ( 2 2 1 = − − = − = b b X The maximum or minimum value of Y is 3 . 6315 ) 143 . 0 ( 4 ) 29 ( 4845 2 4 1 2 2 = − − = − b b bo EXAMPLE-2: Observations on the yield of a chemical reaction taken at various temperatures were recorded as follows. X( C ) Y X2 Y^ e e2 3 2.8 9 3.31300 -0.513000 0.263169 5 4.9 25 4.60123 0.298770 0.089264 8 6.7 64 6.14558 0.554420 0.307382 14 7.6 196 7.83750 -0.237501 0.056407 21 7.2 441 7.45758 -0.257576 0.066345 25 6.1 625 6.10236 -0.002359 0.000006 28 4.7 784 4.54275 0.157246 0.024726

- 26. 104 40.0 2144 0.000000 0.807300 1. Draw a scatter plot to the data, and decide the polynomial. 2. Fit an appropriate equation to the data. 3. Test the significance of the quadratic term. 4. Estimate the mean population of eggs at X=10 C, and compute the 95% C.I for the mean value of Y. 5. Determine the values of X & Y at which the quadratic function is maximum. SOLUTION: 30 20 10 0 8 7 6 5 4 3 X Y Regression Analysis Sum (X) =104 Sum (Y)=40 Sum (X2)=2144 Sum(X)2=2144 Sum(X2)2=5839716 Sum (Y)2 = 1845.6 Sum (XY) = 4788.2 Sum (X2Y) = 98499 Sum(X1X2)= 273222 The regression equation is Y = 0.993 + 0.851 X - 0.0259 X2 Predictor Coef StDev T P Constant 0.9927 0.5614 1.77 0.152 X 0.85105 0.09601 8.86 0.001 X2 -0.025866 0.003069 -8.43 0.001

- 27. S = 0.4492 R-Sq = 95.3% R-Sq(adj) = 92.9% Analysis of Variance Source DF SS MS F P Regression 2 16.2613 8.1306 40.29 0.002 Error 4 0.8073 0.2018 Total 6 17.0686