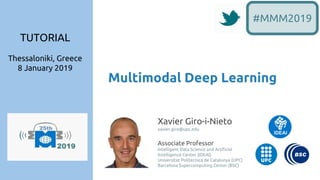

Multimodal Deep Learning

- 1. Multimodal Deep Learning #MMM2019 Xavier Giro-i-Nieto xavier.giro@upc.edu Associate Professor Intelligent Data Science and Artificial Intelligence Center (IDEAI) Universitat Politecnica de Catalunya (UPC) Barcelona Supercomputing Center (BSC) TUTORIAL Thessaloniki, Greece 8 January 2019

- 4. bit.ly/MMM2019 @DocXavi 4 Outline 1. Motivation 2. Deep Neural Topologies 3. Multimedia Encoding and Decoding 4. Multimodal Architectures a. Cross-modal b. Self-supervised Learning c. Multimodal (input)

- 9. bit.ly/MMM2019 @DocXavi 9 Encoder 0 1 0 Cat A Krizhevsky, I Sutskever, GE Hinton “Imagenet classification with deep convolutional neural networks” NIPS 2012

- 10. bit.ly/MMM2019 @DocXavi 10Slide concept: Perronin, F., Tutorial on LSVR @ CVPR’14, Output embedding for LSVR One-hot Representation [1,0,0] [0,1,0] [0,0,1]

- 13. bit.ly/MMM2019 @DocXavi 13 Decoder Radford, Alec, Luke Metz, and Soumith Chintala. "Unsupervised representation learning with deep convolutional generative adversarial networks." ICLR 2016. #DCGAN 0 1 0 Cat Fig: Xudong Mao #DCGAN

- 16. bit.ly/MMM2019 @DocXavi 16 Outline 1. Motivation 2. Deep Neural Topologies 3. Multimedia Encoding and Decoding 4. Multimodal Architectures a. Cross-modal b. Self-supervised Learning c. Multimodal (input)

- 18. bit.ly/MMM2019 @DocXavi 18 One Perceptron Multiple options as activation functions f(·):

- 19. bit.ly/MMM2019 @DocXavi 19 A Layer of N Perceptrons

- 20. bit.ly/MMM2019 @DocXavi 20 Neural Network (single hidden layer)

- 21. bit.ly/MMM2019 @DocXavi 21 Multi-Layer Perceptron (MLP) INPUT(x) OUTPUT(y) FeedForward Hidden States h1 & h2 Feed-forward Weights (Wi ) Figure: Hugo Larochelle

- 22. bit.ly/MMM2019 @DocXavi 22 MLPs for Multimedia Data Limitation #1: Very large amount of input data samples (xi ), which requires a gigantic amount of model parameters. Figure: Ranzatto Limitation #2: Does not naturally handle input data of variable dimension (eg. audio/video/word sequences). #1 #2

- 23. bit.ly/MMM2019 @DocXavi 23 Limitation #1: Very large amount of input data samples (xi ), which requires a gigantic amount of model parameters. For a 200x200 image, we have 4x104 neurons each one with 4x104 inputs, that is 16x108 parameters (*), only for one layer!!! Figure Credit: Ranzatto 16x108 (*) biases not counted MLPs for Multimedia Data #1

- 24. bit.ly/MMM2019 @DocXavi 24 MLPs for Multimedia Data Locally connected network: For a 200x200 image, we have 4x104 neurons each one with 10x10 “local connections” (also called receptive field) inputs, that is 4x106 What else can we do to reduce the number of parameters? Figure Credit: Ranzatto 4x106 #1

- 25. bit.ly/MMM2019 @DocXavi 25 CNNs for Multimedia Data (2D) #1 104 Ex: With 100 different filters (or feature extractors) of size 10x10, the number of parameters is 104a Convolutional Neural Networks (ConvNets, CNNs): Translation invariance: we can use same parameters to capture a specific “feature” in any area of the image. We can use different sets of parameters to capture different features. These operations are equivalent to perform convolutions with different filters.

- 26. bit.ly/MMM2019 @DocXavi 26 CNNs for Multimedia Data (2D) LeCun, Yann, Léon Bottou, Yoshua Bengio, and Patrick Haffner. "Gradient-based learning applied to document recognition." Proceedings of the IEEE 86, no. 11 (1998): 2278-2324. LeNet-5

- 27. bit.ly/MMM2019 @DocXavi 27 CNNs for Multimedia Data (1D) hi there how are you doing ? 100 dim Let’s say we have a sequence of 100 dimensional vectors describing words (text). sequence length = 8 arrangein 2D 100 dim sequence length = 8 , Slide: Santiago Pascual (UPC DLAI 2018)

- 28. bit.ly/MMM2019 @DocXavi 28 CNNs for Multimedia Data (1D) Slide: Santiago Pascual (UPC DLAI 2018) We can apply a 1D convolutional activation over the 2D matrix: for an arbitrary kernel of width=3 100 dim sequence length = 8 K1 Each 1D convolutional kernel is a 2D matrix of size (3, 100)100 dim

- 29. bit.ly/MMM2019 @DocXavi 29 CNNs for Multimedia Data (1D) Slide: Santiago Pascual (UPC DLAI 2018) (Keep in mind we are working with depth=100 although here we depict just depth=1 for simplicity) 1 2 3 4 5 6 7 8 w1 w2 w3 w1 w2 w3 w1 w2 w3 w1 w2 w3 w1 w2 w3 1 2 3 4 5 6 w1 w2 w3 The length result of the convolution is known to be: seq_length - filter_width + 1 = 8 - 3 + 1 = 6 So the output matrix will be (6, 100).

- 30. bit.ly/MMM2019 @DocXavi 30 CNNs for Multimedia Data (1D) Slide: Santiago Pascual (UPC DLAI 2018) When can add zero padding on both sides of the sequence: 1 2 3 4 5 6 7 8 w1 w2 w3 w1 w2 w3 w1 w2 w3 w1 w2 w3 w1 w2 w3 1 2 3 4 5 6 7 8 w1 w2 w3 The length result of the convolution is well known to be: seq_length - filter_width + 1 = 10 - 3 + 1 = 8 So the output matrix will be (8, 100). 0 0 w1 w2 w3 w1 w2 w3

- 31. bit.ly/MMM2019 @DocXavi 31 CNNs for Multimedia Data (1D) Slide: Santiago Pascual (UPC DLAI 2018) When can add zero padding on just one sides of the sequence: 1 2 3 4 5 6 7 8 w1 w2 w3 w1 w2 w3 w1 w2 w3 w1 w2 w3 w1 w2 w3 1 2 3 4 5 6 7 8 w1 w2 w3 The length result of the convolution is well known to be: seq_length - filter_width + 1 = 10 - 3 + 1 = 8 So the output matrix will be (8, 100) because we had padding HOWEVER: now every time-step t depends on the two previous inputs as well as the current time-step → every output is causal Roughly: We make a causal convolution by padding left the sequence with (filter_width - 1) zeros 0 w1 w2 w3 w1 w2 w3 0

- 32. bit.ly/MMM2019 @DocXavi 32 MLPs for Sequences Limitation #2: Dimensionality of input data is variable (eg. audio/video/word sequences). If we have a sequence of samples... predict sample x[t+1] knowing previous values {x[t], x[t-1], x[t-2], …, x[t-τ]} Slide: Santiago Pascual (UPC 2017) #2

- 33. bit.ly/MMM2019 @DocXavi 33 MLPs for Sequences Slide: Santiago Pascual (UPC 2017) Feed Forward approach: ● static window of size L ● slide the window time-step wise ... ... ... x[t+1] x[t-L], …, x[t-1], x[t] x[t+1] L #2

- 34. bit.ly/MMM2019 @DocXavi 34 MLPs for Sequences Slide: Santiago Pascual (UPC 2017) Feed Forward approach: ● static window of size L ● slide the window time-step wise ... ... ... x[t+2] x[t-L+1], …, x[t], x[t+1] ... ... ... x[t+1] x[t-L], …, x[t-1], x[t] x[t+2] L #2

- 35. bit.ly/MMM2019 @DocXavi 35 MLPs for Sequences Slide: Santiago Pascual (UPC 2017) 35 Feed Forward approach: ● static window of size L ● slide the window time-step wise x[t+3] L ... ... ... x[t+3] x[t-L+2], …, x[t+1], x[t+2] ... ... ... x[t+2] x[t-L+1], …, x[t], x[t+1] ... ... ... x[t+1] x[t-L], …, x[t-1], x[t] #2

- 36. bit.ly/MMM2019 @DocXavi 36 Slide: Santiago Pascual (UPC 2017) 36 ... ... ... x1, x2, …, xL Problems for the feed forward + static window approach: ● What’s the matter increasing L? → Fast growth of num of parameters! ● Decisions are independent between time-steps! ○ The network doesn’t care about what happened at previous time-step, only present window matters → doesn’t look good x1, x2, …, xL, …, x2L ... ... x1, x2, …, xL, …, x2L, …, x3L ... ... ... ... MLPs for Sequences #2

- 37. bit.ly/MMM2019 @DocXavi 37 RNNs for Sequences #2 Solution A: Build specific connections capturing the temporal evolution → Shared weights in time

- 38. bit.ly/MMM2019 @DocXavi 38 RNNs for Sequences #2 Recurrent Weights (U) Feed-forward Weights (W)

- 40. bit.ly/MMM2019 @DocXavi 40 RNNs for Sequences #2 time time Unfold (Rotation 90o ) Unfold (Rotation 90o )

- 41. bit.ly/MMM2019 @DocXavi 41 RNNs for Sequences #2 Raimi Karim, “Animated RNN, LSTM and GRU” (Toward Data Science 2018)

- 42. bit.ly/MMM2019 @DocXavi 42 LSTMs for Sequences #2 Hochreiter, Sepp, and Jürgen Schmidhuber. "Long short-term memory." Neural computation 9, no. 8 (1997): 1735-1780.

- 43. bit.ly/MMM2019 @DocXavi 43 LSTMs for Sequences #2 Raimi Karim, “Animated RNN, LSTM and GRU” (Toward Data Science 2018)

- 44. bit.ly/MMM2019 @DocXavi 44 GRUs for Sequences #2 Cho, Kyunghyun, Bart Van Merriënboer, Caglar Gulcehre, Dzmitry Bahdanau, Fethi Bougares, Holger Schwenk, and Yoshua Bengio. "Learning phrase representations using RNN encoder-decoder for statistical machine translation." AMNLP 2014. GRU obtains a similar performance as LSTM with one gate less.

- 45. bit.ly/MMM2019 @DocXavi 45 GRUs for Sequences #2 Raimi Karim, “Animated RNN, LSTM and GRU” (Toward Data Science 2018)

- 46. bit.ly/MMM2019 @DocXavi 46 Efficient RNN for Sequences Used Unused Victor Campos, Brendan Jou, Xavier Giro-i-Nieto, Jordi Torres, and Shih-Fu Chang. “Skip RNN: Learning to Skip State Updates in Recurrent Neural Networks”, ICLR 2018. #SkipRNN

- 47. bit.ly/MMM2019 @DocXavi 47 Efficient RNN for Sequences Victor Campos, Brendan Jou, Xavier Giro-i-Nieto, Jordi Torres, and Shih-Fu Chang. “Skip RNN: Learning to Skip State Updates in Recurrent Neural Networks”, ICLR 2018. #SkipRNN Used Unused CNN CNN CNN... RNN RNN RNN...

- 48. bit.ly/MMM2019 @DocXavi 48 Attention Chis Olah & Shan Cate, “Attention and Augmented Recurrent Neural Networks” (Google Bain 2016)

- 49. bit.ly/MMM2019 @DocXavi 49 Attention Chis Olah & Shan Cate, “Attention and Augmented Recurrent Neural Networks” (Google Brain 2016)

- 50. bit.ly/MMM2019 @DocXavi 50 Self-Attention Jay Alammar, “The Illustrated Transformer” Self-attention refers to attending to other elements from the SAME sequence.

- 51. bit.ly/MMM2019 @DocXavi 51 Auto-regressive FF for Sequences #2 van den Oord, Aaron, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew Senior, and Koray Kavukcuoglu. "WaveNet: A Generative Model for Raw Audio." arXiv preprint arXiv:1609.03499 (2016).

- 52. bit.ly/MMM2019 @DocXavi 52 Auto-regression for Sequences Van Oord, Aaron, Nal Kalchbrenner, and Koray Kavukcuoglu. "Pixel Recurrent Neural Networks." ICML 2016. #2

- 53. bit.ly/MMM2019 @DocXavi 53 Outline 1. Motivation 2. Deep Neural Topologies 3. Multimedia Encoding and Decoding 4. Multimodal Architectures a. Cross-modal b. Self-supervised Learning c. Multimodal (input) d. Multi-task (output)

- 56. bit.ly/MMM2019 @DocXavi 56 Image Encoding A Krizhevsky, I Sutskever, GE Hinton “Imagenet classification with deep convolutional neural networks” NIPS 2012 Cat CNN FC

- 57. bit.ly/MMM2019 @DocXavi 57 Video Encoding Slide: Víctor Campos (UPC 2018) CNN CNN CNN... Combination method Combination is commonly implemented as a small NN on top of a pooling operation (e.g. max, sum, average). Drawback: pooling is not aware of the temporal order! Ng et al., Beyond short snippets: Deep networks for video classification, CVPR 2015

- 58. bit.ly/MMM2019 @DocXavi 58 Video Encoding Slide: Víctor Campos (UPC 2018) Recurrent Neural Networks are well suited for processing sequences. Drawback: RNNs are sequential and cannot be parallelized. Donahue et al., Long-term Recurrent Convolutional Networks for Visual Recognition and Description, CVPR 2015 CNN CNN CNN... RNN RNN RNN...

- 60. bit.ly/MMM2019 @DocXavi 60 Image Decoding CNN Radford, Alec, Luke Metz, and Soumith Chintala. "Unsupervised representation learning with deep convolutional generative adversarial networks." ICLR 2016. #DCGAN

- 62. bit.ly/MMM2019 @DocXavi 62 Image Encoding and Decoding Noh et al. Learning Deconvolution Network for Semantic Segmentation. ICCV 2015 “Regular” VGG “Upside down” VGG

- 63. bit.ly/MMM2019 @DocXavi 63 Ronneberger, Olaf, Philipp Fischer, and Thomas Brox. "U-net: Convolutional networks for biomedical image segmentation." MICCAI 2015. Isola, Phillip, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. "Image-to-image translation with conditional adversarial networks." CVPR 2017.

- 66. 66 ? How to encode text ?

- 67. 67 Example: letters. |V| = 30 ‘a’: x = 1 ‘b’: x = 2 ‘c’: x = 3 . . . ‘.’: x = 30 We impose fake range ordering How to encode text ?

- 68. 68 One hot encoding Example: letters. |V| = 30 ‘a’: xT = [1,0,0, ..., 0] ‘b’: xT = [0,1,0, ..., 0] ‘c’: xT = [0,0,1, ..., 0] . . . ‘.’: xT = [0,0,0, ..., 1]

- 69. 69 One hot encoding Number of words, |V| ? B2: 5K C2: 18K LVSR: 50-100K Wikipedia (1.6B): 400K Crawl data (42B): 2M cat: xT = [1,0,0, ..., 0] dog: xT = [0,1,0, ..., 0] . . house: xT = [0,0,0, …,0,1,0,...,0] . . .

- 70. cat: xT = [1,0,0, ..., 0] dog: xT = [0,1,0, ..., 0] . . house: xT = [0,0,0, …,0,1,0,...,0] . . . 70 One hot encoding ● Large dimensionality ● Sparse representation (mostly zeros) ● Blind representation ○ Only operators: ‘!=’ and ‘==’

- 71. 71 Text projection to word embeddings The one-hot is linearly projected to a embedded space of lower dimension with a MLP. FC Representation

- 72. 72 Embed high dimensional data points (i.e. feature codes) so that pairwise distances are preserved in local neighborhoods. Maaten & Hinton. Visualizing High-Dimensional Data using t-SNE. Journal of Machine Learning Research (2008) #tsne. t-SNE Figure: Christopher Olah, Visualizing Representations Text projection to word embeddings

- 73. 73Pennington, Jeffrey, Richard Socher, and Christopher Manning. "Glove: Global vectors for word representation." EMNLP 2014 Woman-Man Text projection to word embeddings

- 74. 74 ● Represent words using vectors of reduced dimension d (~100 - 500) ● Meaningful (semantic, syntactic) distances ● Good embeddings are useful for many other tasks Text projection to word embeddings

- 75. 75 Training Word Embeddings Figure: TensorFlow tutorial Bengio, Yoshua, Réjean Ducharme, Pascal Vincent, and Christian Jauvin. "A neural probabilistic language model." Journal of machine learning research 3, no. Feb (2003): 1137-1155. Self-supervised learning

- 76. 76Mikolov, Tomas, Ilya Sutskever, Kai Chen, Greg S. Corrado, and Jeff Dean. "Distributed representations of words and phrases and their compositionality." NIPS 2013 #word2vec #continuousbow the cat climbed a tree Given context: a, cat, the, tree Estimate prob. of climbed Self-supervised learning Training Word Embeddings

- 77. 77Mikolov, Tomas, Ilya Sutskever, Kai Chen, Greg S. Corrado, and Jeff Dean. "Distributed representations of words and phrases and their compositionality." NIPS 2013 #word2vec #skipgram Self-supervised learning the cat climbed a tree Given word: climbed Estimate prob. of context words: a, cat, the, tree Training Word Embeddings

- 78. bit.ly/MMM2019 @DocXavi 78 Fig: Kyunghyun Cho, “Introduction to Neural Machine Translation with GPUs” (2015) Cho, Kyunghyun, Bart Van Merriënboer, Caglar Gulcehre, Dzmitry Bahdanau, Fethi Bougares, Holger Schwenk, and Yoshua Bengio. "Learning phrase representations using RNN encoder-decoder for statistical machine translation." EMNLP 2014. (2) (3) Text Encoding RNN FC Representation

- 79. bit.ly/MMM2019 @DocXavi 79 Gehring, Jonas, Michael Auli, David Grangier, Denis Yarats, and Yann N. Dauphin. "Convolutional sequence to sequence learning." ICML 2017. Text Encoding CNN

- 81. bit.ly/MMM2019 @DocXavi 81 Text Decoding Kyunghyun Cho, “Introduction to Neural Machine Translation with GPUs” (2015) RNN Representation

- 83. bit.ly/MMM2019 @DocXavi 83 Neural Machine Translation (NMT) Kyunghyun Cho, “Introduction to Neural Machine Translation with GPUs” (2015)

- 84. 84 Neural Machine Translation (NMT)

- 85. 85 Kyunghyun Cho, “Introduction to Neural Machine Translation with GPUs” (2015) Representation or Embedding Neural Machine Translation (NMT)

- 86. 86 Neural Machine Translation (NMT) Sutskever, Ilya, Oriol Vinyals, and Quoc V. Le. "Sequence to sequence learning with neural networks." NIPS 2014. The Seq2Seq variation: ● trigger the output generation with an input <go> symbol. ● the predicted word at timestep t, becomes the input at t+1.

- 87. bit.ly/MMM2019 @DocXavi 87 NMT with Attention Slide: Marta R. Costa-jussà (UPC DLAI 2018) encoder decoder + Attention allows to use multiple vectors, based on the length of the input.

- 89. bit.ly/MMM2019 @DocXavi 89 NMT with Attention Chis Olah & Shan Cate, “Attention and Augmented Recurrent Neural Networks” (Google Bain 2016)

- 90. 90 Neural Machine Translation (NMT) CNN Gehring, Jonas, Michael Auli, David Grangier, Denis Yarats, and Yann N. Dauphin. "Convolutional sequence to sequence learning." ICML 2017. Attention

- 91. 91 Neural Machine Translation (NMT) Self-Attention Vaswani, Ashish, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. "Attention is all you need." NIPS 2017.

- 94. bit.ly/MMM2019 @DocXavi 94 Chan, William, Navdeep Jaitly, Quoc Le, and Oriol Vinyals. "Listen, attend and spell: A neural network for large vocabulary conversational speech recognition." ICASSP 2016. Speech Encoding RNN CNN Oord, Aaron van den, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew Senior, and Koray Kavukcuoglu. "Wavenet: A generative model for raw audio." arXiv preprint arXiv:1609.03499 (2016).

- 96. bit.ly/MMM2019 @DocXavi 96 Audio Decoding Mehri, Soroush, Kundan Kumar, Ishaan Gulrajani, Rithesh Kumar, Shubham Jain, Jose Sotelo, Aaron Courville, and Yoshua Bengio. "SampleRNN: An unconditional end-to-end neural audio generation model." ICLR 2017. RNN CNN Oord, Aaron van den, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew Senior, and Koray Kavukcuoglu. "Wavenet: A generative model for raw audio." arXiv preprint arXiv:1609.03499 (2016).

- 98. bit.ly/MMM2019 @DocXavi 98 Encoder Decoder Representation Raw MFCC Mel spectrum Raw MFCC Mel spectrum

- 99. bit.ly/MMM2019 @DocXavi 99 Speech Enhancement Pascual, Santiago, Antonio Bonafonte, and Joan Serra. "SEGAN: Speech enhancement generative adversarial network." Interspeech 2017.

- 101. bit.ly/MMM2019 @DocXavi 101 Outline 1. Motivation 2. Deep Neural Topologies 3. Multimedia Encoding and Decoding 4. Multimodal Architectures a. Cross-modal b. Self-supervised Learning c. Multimodal (input) d. Multi-task (output)

- 104. bit.ly/MMM2019 @DocXavi 104 Automatic Speech Recognition (ASR) Slide: Hannun, Awni. "Sequence Modeling with CTC." Distill 2.11 (2017): e8.

- 105. bit.ly/MMM2019 @DocXavi 105 Automatic Speech Recognition (ASR) Graves et al. Connectionist Temporal Classification: Labelling Unsegmented Sequence Data with Recurrent Neural Networks. ICML 2006 The Connectionist Temporal Classification loss (CTC) allows training RNNs with no need of exact alignement. Figure: Hannun, Awni. "Sequence Modeling with CTC." Distill 2.11 (2017): e8. ● Avoiding the need for alignment between input and output sequence by predicting an additional “_” blank word ● Before computing the loss, repeated words and blank tokens are removed

- 106. bit.ly/MMM2019 @DocXavi 106 Automatic Speech Recognition (ASR) Sequence of spectrograms

- 107. bit.ly/MMM2019 @DocXavi 107 Automatic Speech Recognition (ASR) Baidu Research – 34 authors- , “Deep Speech 2: End-to-end Speech Recognition in English and Mandarin”, arXiv:1512.02595 (Dec 2015) [Demo]

- 108. bit.ly/MMM2019 @DocXavi 108 Automatic Speech Speller w/Attention Chan, William, Navdeep Jaitly, Quoc Le, and Oriol Vinyals. "Listen, attend and spell: A neural network for large vocabulary conversational speech recognition." ICASSP 2016. #LAS Listener (encoder) Speller (decoder)

- 109. bit.ly/MMM2019 @DocXavi 109Chis Olah & Shan Cate, “Attention and Augmented Recurrent Neural Networks” (Google Bain 2016) Automatic Speech Speller w/ Attention

- 111. bit.ly/MMM2019 @DocXavi 111 Speech Synthesis Oord, Aaron van den, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew Senior, and Koray Kavukcuoglu. "Wavenet: A generative model for raw audio." arXiv preprint arXiv:1609.03499 (2016).

- 112. bit.ly/MMM2019 @DocXavi 112 Speech Synthesis Prenger, Ryan, Rafael Valle, and Bryan Catanzaro. "WaveGlow: A Flow-based Generative Network for Speech Synthesis." arXiv preprint arXiv:1811.00002 (2018).

- 114. bit.ly/MMM2019 @DocXavi 114 Vinyals, Oriol, Alexander Toshev, Samy Bengio, and Dumitru Erhan. "Show and tell: A neural image caption generator." CVPR 2015. Image Captioning

- 115. bit.ly/MMM2019 @DocXavi 115 Image Captioning (Slides by Marc Bolaños): Karpathy, Andrej, and Li Fei-Fei. "Deep visual-semantic alignments for generating image descriptions." CVPR 2015 #DeepImageSent

- 116. bit.ly/MMM2019 @DocXavi 116 Captioning: Show, Attend & Tell Xu, Kelvin, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron C. Courville, Ruslan Salakhutdinov, Richard S. Zemel, and Yoshua Bengio. "Show, Attend and Tell: Neural Image Caption Generation with Visual Attention." ICML 2015

- 117. bit.ly/MMM2019 @DocXavi 117 Captioning: Show, Attend & Tell Xu, Kelvin, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron C. Courville, Ruslan Salakhutdinov, Richard S. Zemel, and Yoshua Bengio. "Show, Attend and Tell: Neural Image Caption Generation with Visual Attention." ICML 2015

- 118. bit.ly/MMM2019 @DocXavi 118 Johnson, Justin, Andrej Karpathy, and Li Fei-Fei. "Densecap: Fully convolutional localization networks for dense captioning." CVPR 2016 Captioning (+ Detection): DenseCap

- 119. bit.ly/MMM2019 @DocXavi 119 Captioning (+ Detection): DenseCap XAVI: “man has short hair”, “man with short hair” AMAIA:”a woman wearing a black shirt”, “ BOTH: “two men wearing black glasses” Johnson, Justin, Andrej Karpathy, and Li Fei-Fei. "Densecap: Fully convolutional localization networks for dense captioning." CVPR 2016

- 120. bit.ly/MMM2019 @DocXavi 120 Johnson, Justin, Andrej Karpathy, and Li Fei-Fei. "Densecap: Fully convolutional localization networks for dense captioning." CVPR 2016 Captioning (+ Detection): DenseCap

- 121. bit.ly/MMM2019 @DocXavi 121 Jeffrey Donahue, Lisa Anne Hendricks, Sergio Guadarrama, Marcus Rohrbach, Subhashini Venugopalan, Kate Saenko, Trevor Darrel. Long-term Recurrent Convolutional Networks for Visual Recognition and Description, CVPR 2015. code Captioning: Video

- 122. bit.ly/MMM2019 @DocXavi 122 (Slides by Marc Bolaños) Pingbo Pan, Zhongwen Xu, Yi Yang,Fei Wu,Yueting Zhuang Hierarchical Recurrent Neural Encoder for Video Representation with Application to Captioning, CVPR 2016. LSTM unit (2nd layer) Time Image t = 1 t = T hidden state at t = T first chunk of data Captioning: Video

- 123. bit.ly/MMM2019 @DocXavi 123 Sign Language Translation Camgoz, Necati Cihan, et al. Neural Sign Language Translation. CVPR 2018.

- 124. bit.ly/MMM2019 @DocXavi 124 Assael, Yannis M., Brendan Shillingford, Shimon Whiteson, and Nando de Freitas. "LipNet: End-to-End Sentence-level Lipreading." (2016).

- 125. bit.ly/MMM2019 @DocXavi 125 Lip Reading Assael, Yannis M., Brendan Shillingford, Shimon Whiteson, and Nando de Freitas. "LipNet: End-to-End Sentence-level Lipreading." (2016).

- 126. bit.ly/MMM2019 @DocXavi 126 Chung, Joon Son, Andrew Senior, Oriol Vinyals, and Andrew Zisserman. "Lip reading sentences in the wild." CVPR 2017

- 127. bit.ly/MMM2019 @DocXavi 127 Lipreading: Watch, Listen, Attend & Spell Audio features Image features Chung, Joon Son, Andrew Senior, Oriol Vinyals, and Andrew Zisserman. "Lip reading sentences in the wild." CVPR 2017

- 128. bit.ly/MMM2019 @DocXavi 128 Lipreading: Watch, Listen, Attend & Spell Chung, Joon Son, Andrew Senior, Oriol Vinyals, and Andrew Zisserman. "Lip reading sentences in the wild." CVPR 2017 Attention over output states from audio and video is computed at each timestep

- 130. bit.ly/MMM2019 @DocXavi 130 Reed, Scott, Zeynep Akata, Xinchen Yan, Lajanugen Logeswaran, Bernt Schiele, and Honglak Lee. "Generative adversarial text to image synthesis." ICML 2016. Text-to-Image

- 131. bit.ly/MMM2019 @DocXavi 131 Reed, Scott, Zeynep Akata, Xinchen Yan, Lajanugen Logeswaran, Bernt Schiele, and Honglak Lee. "Generative adversarial text to image synthesis." ICML 2016. Text-to-Image

- 132. bit.ly/MMM2019 @DocXavi 132 Text-to-Image Salvador, Amaia, Michal Drozdzal, Xavier Giro-i-Nieto, and Adriana Romero. "Inverse Cooking: Recipe Generation from Food Images." arXiv preprint arXiv:1812.06164 (2018).

- 134. bit.ly/MMM2019 @DocXavi 134 Self-supervised Feature Learning Owens, Andrew, Jiajun Wu, Josh H. McDermott, William T. Freeman, and Antonio Torralba. "Ambient sound provides supervision for visual learning." ECCV 2016 Based on the assumption that ambient sound in video is related to the visual semantics.

- 135. bit.ly/MMM2019 @DocXavi 135 Self-supervised Feature Learning Owens, Andrew, Jiajun Wu, Josh H. McDermott, William T. Freeman, and Antonio Torralba. "Ambient sound provides supervision for visual learning." ECCV 2016 Use videos to train a CNN that predicts the audio statistics of a frame.

- 136. bit.ly/MMM2019 @DocXavi 136 Self-supervised Feature Learning Owens, Andrew, Jiajun Wu, Josh H. McDermott, William T. Freeman, and Antonio Torralba. "Ambient sound provides supervision for visual learning." ECCV 2016 Task: Use the predicted audio stats to clusters images. Audio clusters built with K-means overthe training set Cluster assignments at test time (one row=one cluster)

- 137. bit.ly/MMM2019 @DocXavi 137 Self-supervised Feature Learning Owens, Andrew, Jiajun Wu, Josh H. McDermott, William T. Freeman, and Antonio Torralba. "Ambient sound provides supervision for visual learning." ECCV 2016 Although the CNN was not trained with class labels, local units with semantic meaning emerge.

- 138. bit.ly/MMM2019 @DocXavi 138 Video Sonorization Owens, Andrew, Phillip Isola, Josh McDermott, Antonio Torralba, Edward H. Adelson, and William T. Freeman. "Visually indicated sounds." CVPR 2016. Retrieve matching sounds for videos of people hitting objects with a drumstick.

- 139. bit.ly/MMM2019 @DocXavi 139 Video Sonorization Owens, Andrew, Phillip Isola, Josh McDermott, Antonio Torralba, Edward H. Adelson, and William T. Freeman. "Visually indicated sounds." CVPR 2016. The Greatest Hits Dataset

- 140. bit.ly/MMM2019 @DocXavi 140 Video Sonorization Owens, Andrew, Phillip Isola, Josh McDermott, Antonio Torralba, Edward H. Adelson, and William T. Freeman. "Visually indicated sounds." CVPR 2016. Audio Clip Retrieval Not end-to-end

- 141. bit.ly/MMM2019 @DocXavi 141 Owens, Andrew, Phillip Isola, Josh McDermott, Antonio Torralba, Edward H. Adelson, and William T. Freeman. "Visually indicated sounds." CVPR 2016.

- 143. bit.ly/MMM2019 @DocXavi 143 Speech Reconstruction Ephrat, Ariel, and Shmuel Peleg. "Vid2speech: speech reconstruction from silent video." ICASSP 2017. CNN (VGG) Frame from a silent video Audio feature Post-hoc synthesis

- 144. bit.ly/MMM2019 @DocXavi 144 Speech Reconstruction Ephrat, Ariel, Tavi Halperin, and Shmuel Peleg. "Improved speech reconstruction from silent video." In ICCV 2017 Workshop on Computer Vision for Audio-Visual Media. 2017.

- 145. bit.ly/DLCV2018 #DLUPC 145 Ephrat, Ariel, Tavi Halperin, and Shmuel Peleg. "Improved speech reconstruction from silent video." In ICCV Workshop on Computer Vision for Audio-Visual Media. 2017.

- 147. bit.ly/MMM2019 @DocXavi 147 Speech to Pixels Amanda Duarte, Francisco Roldan, Miquel Tubau, Janna Escur, Santiago Pascual, Amaia Salvador, Eva Mohedano et al. “Wav2Pix: Speech-conditioned Face Generation using Generative Adversarial Networks” (under progress)

- 148. bit.ly/MMM2019 @DocXavi 148 Speech to Pixels Amanda Duarte, Francisco Roldan, Miquel Tubau, Janna Escur, Santiago Pascual, Amaia Salvador, Eva Mohedano et al. “Wav2Pix: Speech-conditioned Face Generation using Generative Adversarial Networks” (under progress) Generated faces from known identities.

- 149. bit.ly/MMM2019 @DocXavi 149 Speech to Pixels Amanda Duarte, Francisco Roldan, Miquel Tubau, Janna Escur, Santiago Pascual, Amaia Salvador, Eva Mohedano et al. “Wav2Pix: Speech-conditioned Face Generation using Generative Adversarial Networks” (under progress) Faces from average speeches.

- 150. bit.ly/MMM2019 @DocXavi 150 Speech to Pixels Amanda Duarte, Francisco Roldan, Miquel Tubau, Janna Escur, Santiago Pascual, Amaia Salvador, Eva Mohedano et al. “Wav2Pix: Speech-conditioned Face Generation using Generative Adversarial Networks” (under progress) Interpolated faces from interpolated speeches.

- 151. bit.ly/MMM2019 @DocXavi 151 Outline 1. Motivation 2. Deep Neural Topologies 3. Multimedia Encoding and Decoding 4. Multimodal Architectures a. Cross-modal b. Joint Representations (embeddings) c. Multimodal (input) d. Multi-task (output)

- 154. bit.ly/MMM2019 @DocXavi 154 Joint Representations (Embeddings) Frome, Andrea, Greg S. Corrado, Jon Shlens, Samy Bengio, Jeff Dean, and Tomas Mikolov. "Devise: A deep visual-semantic embedding model." NIPS 2013

- 155. bit.ly/MMM2019 @DocXavi 155 Zero-shot learning Socher, R., Ganjoo, M., Manning, C. D., & Ng, A., Zero-shot learning through cross-modal transfer. NIPS 2013 [slides] [code] No images from “cat” in the training set... ...but they can still be recognised as “cats” thanks to the representations learned from text .

- 156. bit.ly/MMM2019 @DocXavi 156 Multimodal Retrieval Aytar, Yusuf, Lluis Castrejon, Carl Vondrick, Hamed Pirsiavash, and Antonio Torralba. "Cross-Modal Scene Networks." CVPR 2016.

- 157. bit.ly/MMM2019 @DocXavi 157 Multimodal Retrieval Aytar, Yusuf, Lluis Castrejon, Carl Vondrick, Hamed Pirsiavash, and Antonio Torralba. "Cross-Modal Scene Networks." CVPR 2016.

- 158. bit.ly/MMM2019 @DocXavi 158 Multimodal Retrieval Amaia Salvador, Nicholas Haynes, Yusuf Aytar, Javier Marín, Ferda Ofli, Ingmar Weber, Antonio Torralba, “Learning Cross-modal Embeddings for Cooking Recipes and Food Images”. CVPR 2017 #pic2recipe

- 160. bit.ly/MMM2019 @DocXavi 160 Multimodal Retrieval Amanda Duarte, Dídac Surís, Amaia Salvador, Jordi Torres, and Xavier Giró-i-Nieto. "Cross-modal Embeddings for Video and Audio Retrieval." ECCV Women in Computer Vision Workshop 2018. Best match Audio feature

- 161. bit.ly/MMM2019 @DocXavi 161 Multimodal Retrieval Best match Visual feature Audio feature Amanda Duarte, Dídac Surís, Amaia Salvador, Jordi Torres, and Xavier Giró-i-Nieto. "Cross-modal Embeddings for Video and Audio Retrieval." ECCV Women in Computer Vision Workshop 2018.

- 162. bit.ly/MMM2019 @DocXavi 162 Feature Learning by Label Transfer Aytar, Yusuf, Carl Vondrick, and Antonio Torralba. "Soundnet: Learning sound representations from unlabeled video." NIPS 2016. Teacher network: Visual Recognition (object & scenes)

- 163. bit.ly/MMM2019 @DocXavi 163 Aytar, Yusuf, Carl Vondrick, and Antonio Torralba. "Soundnet: Learning sound representations from unlabeled video." NIPS 2016.

- 164. bit.ly/MMM2019 @DocXavi 164 Aytar, Yusuf, Carl Vondrick, and Antonio Torralba. "Soundnet: Learning sound representations from unlabeled video." NIPS 2016. Learned audio features are good for environmental sound recognition. Feature Learning by Label Transfer

- 165. bit.ly/MMM2019 @DocXavi 165 Aytar, Yusuf, Carl Vondrick, and Antonio Torralba. "Soundnet: Learning sound representations from unlabeled video." NIPS 2016. Learned audio features are good for environmental sound recognition. Feature Learning by Label Transfer

- 166. bit.ly/MMM2019 @DocXavi 166 Aytar, Yusuf, Carl Vondrick, and Antonio Torralba. "Soundnet: Learning sound representations from unlabeled video." NIPS 2016. Visualization of the 1D filters over raw audio in conv1. Feature Learning by Label Transfer

- 167. bit.ly/MMM2019 @DocXavi 167 Aytar, Yusuf, Carl Vondrick, and Antonio Torralba. "Soundnet: Learning sound representations from unlabeled video." NIPS 2016. Visualization of the 1D filters over raw audio in conv1. Feature Learning by Label Transfer

- 168. bit.ly/MMM2019 @DocXavi 168 Aytar, Yusuf, Carl Vondrick, and Antonio Torralba. "Soundnet: Learning sound representations from unlabeled video." NIPS 2016. Visualize video frames that mostly activate a neuron in a late layer (conv7) Feature Learning by Label Transfer

- 169. bit.ly/MMM2019 @DocXavi 169 Aytar, Yusuf, Carl Vondrick, and Antonio Torralba. "Soundnet: Learning sound representations from unlabeled video." NIPS 2016. Visualize video frames that mostly activate a neuron in a late layer (conv7) Feature Learning by Label Transfer

- 170. bit.ly/MMM2019 @DocXavi 170 Cross-modal Label Transfer S Albanie, A Nagrani, A Vedaldi, A Zisserman, “Emotion Recognition in Speech using Cross-Modal Transfer in the Wild” ACM Multimedia 2018. Teacher network: Facial Emotion Recognition (visual)

- 171. bit.ly/MMM2019 @DocXavi 171 Joint Feature Learning Korbar, Bruno, Du Tran, and Lorenzo Torresani. "Cooperative Learning of Audio and Video Models from Self-Supervised Synchronization." NIPS 2018. #AVTS #selfsupervision

- 172. bit.ly/MMM2019 @DocXavi 172 Joint Feature Learning Korbar, Bruno, Du Tran, and Lorenzo Torresani. "Cooperative Learning of Audio and Video Models from Self-Supervised Synchronization." NIPS 2018. #AVTS #selfsupervision

- 173. bit.ly/MMM2019 @DocXavi 173 Joint Feature Learning Korbar, Bruno, Du Tran, and Lorenzo Torresani. "Cooperative Learning of Audio and Video Models from Self-Supervised Synchronization." NIPS 2018. #AVTS #selfsupervision

- 174. bit.ly/MMM2019 @DocXavi 174Arandjelović, Relja, and Andrew Zisserman. "Look, Listen and Learn." ICCV 2017. #selfsupervision Joint Feature Learning

- 175. bit.ly/MMM2019 @DocXavi 175Arandjelović, Relja, and Andrew Zisserman. "Look, Listen and Learn." ICCV 2017. Joint Feature Learning Most activated unit in pool4 layer of the visual network.

- 176. bit.ly/MMM2019 @DocXavi 176Arandjelović, Relja, and Andrew Zisserman. "Look, Listen and Learn." ICCV 2017. Joint Feature Learning Visual features used to train a linear classifier on ImageNet.

- 177. bit.ly/MMM2019 @DocXavi 177Arandjelović, Relja, and Andrew Zisserman. "Look, Listen and Learn." ICCV 2017. Joint Feature Learning Most activated unit in pool4 layer of the audio network

- 178. bit.ly/MMM2019 @DocXavi 178Arandjelović, Relja, and Andrew Zisserman. "Look, Listen and Learn." ICCV 2017. Joint Feature Learning Audio features achieve state of the art performance.

- 179. bit.ly/MMM2019 @DocXavi 179 Sound Source Localization Arandjelović, Relja, and Andrew Zisserman. "Objects that Sound." ECCV 2018. #selfsupervision

- 180. bit.ly/MMM2019 @DocXavi 180Arandjelović, Relja, and Andrew Zisserman. "Objects that Sound." ECCV 2018.

- 181. bit.ly/MMM2019 @DocXavi 181 Sound Source Localization Senocak, Arda, Tae-Hyun Oh, Junsik Kim, Ming-Hsuan Yang, and In So Kweon. "Learning to Localize Sound Source in Visual Scenes." CVPR 2018.

- 182. bit.ly/MMM2019 @DocXavi 182 Senocak, Arda, Tae-Hyun Oh, Junsik Kim, Ming-Hsuan Yang, and In So Kweon. "Learning to Localize Sound Source in Visual Scenes." CVPR 2018.

- 184. bit.ly/MMM2019 @DocXavi 184 Speech Grounding (temporal) Harwath, David, Antonio Torralba, and James Glass. "Unsupervised learning of spoken language with visual context." NIPS 2016. [talk] Train a visual & speech networks with pairs of (non-)corresponding images & speech.

- 185. bit.ly/MMM2019 @DocXavi 185 Harwath, David, Antonio Torralba, and James Glass. "Unsupervised learning of spoken language with visual context." NIPS 2016. [talk] Similarity curve show which regions of the spectrogram are relevant for the image. Important: no text transcriptions used during the training !! Speech Grounding (temporal)

- 186. bit.ly/MMM2019 @DocXavi 186 Speech Grounding (spatiotemporal) Harwath, David, Adrià Recasens, Dídac Surís, Galen Chuang, Antonio Torralba, and James Glass. "Jointly Discovering Visual Objects and Spoken Words from Raw Sensory Input." ECCV 2018.

- 187. bit.ly/MMM2019 @DocXavi 187 Harwath, David, Adrià Recasens, Dídac Surís, Galen Chuang, Antonio Torralba, and James Glass. "Jointly Discovering Visual Objects and Spoken Words from Raw Sensory Input." ECCV 2018. Regions matching the spoken word “WOMAN”: Speech Grounding (spatiotemporal)

- 188. bit.ly/MMM2019 @DocXavi 188 Harwath, David, Adrià Recasens, Dídac Surís, Galen Chuang, Antonio Torralba, and James Glass. "Jointly Discovering Visual Objects and Spoken Words from Raw Sensory Input." ECCV 2018

- 189. bit.ly/MMM2019 @DocXavi 189 Outline 1. Motivation 2. Deep Neural Topologies 3. Multimedia Encoding and Decoding 4. Multimodal Architectures a. Cross-modal b. Joint Representations (Embeddings) c. Multimodal inputs

- 192. bit.ly/MMM2019 @DocXavi 192 Visual Question Answering Antol, Stanislaw, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, and Devi Parikh. "VQA: Visual question answering." CVPR 2015.

- 193. bit.ly/MMM2019 @DocXavi 193 Visual Question Answering (VQA) [z1 , z2 , … zN ] [y1 , y2 , … yM ] “Is economic growth decreasing ?” “Yes” Encode Encode Decode

- 194. bit.ly/MMM2019 @DocXavi 194 Extract visual features Embedding Predict answerMerge Question What object is flying? Answer Kite Slide credit: Issey Masuda Visual Question Answering (VQA)

- 195. bit.ly/MMM2019 @DocXavi 195 Visual Question Answering (VQA) Masuda, Issey, Santiago Pascual de la Puente, and Xavier Giro-i-Nieto. "Open-Ended Visual Question-Answering." ETSETB UPC TelecomBCN (2016). Image Question Answer

- 196. bit.ly/MMM2019 @DocXavi 196 Visual Question Answering (VQA) Francisco Roldán, Issey Masuda, Santiago Pascual de la Puente, and Xavier Giro-i-Nieto. "Visual Question-Answering 2.0." ETSETB UPC TelecomBCN (2017).

- 197. bit.ly/MMM2019 @DocXavi 197 Noh, H., Seo, P. H., & Han, B. Image question answering using convolutional neural network with dynamic parameter prediction. CVPR 2016 Dynamic Parameter Prediction Network (DPPnet) Visual Question Answering (VQA)

- 198. bit.ly/MMM2019 @DocXavi 198 Visual Question Answering: Dynamic (Slides and Slidecast by Santi Pascual): Xiong, Caiming, Stephen Merity, and Richard Socher. "Dynamic Memory Networks for Visual and Textual Question Answering." ICML 2016

- 199. bit.ly/MMM2019 @DocXavi 199 Visual Question Answering: Grounded (Slides and Screencast by Issey Masuda): Zhu, Yuke, Oliver Groth, Michael Bernstein, and Li Fei-Fei."Visual7W: Grounded Question Answering in Images." CVPR 2016.

- 200. bit.ly/MMM2019 @DocXavi 200 Visual Reasoning Johnson, Justin, Bharath Hariharan, Laurens van der Maaten, Li Fei-Fei, C. Lawrence Zitnick, and Ross Girshick. "CLEVR: A Diagnostic Dataset for Compositional Language and Elementary Visual Reasoning." CVPR 2017

- 201. bit.ly/MMM2019 @DocXavi 201 Visual Reasoning (Slides by Fran Roldan) Justin Johnson, Bharath Hariharan, Laurens van der Maaten, Judy Hoffman, Fei-Fei Li, Larry Zitnick, Ross Girshick , “Inferring and Executing Programs for Visual Reasoning”. ICCV 2017 Program Generator Execution Engine

- 202. bit.ly/MMM2019 @DocXavi 202 Visual Reasoning Santoro, Adam, David Raposo, David G. Barrett, Mateusz Malinowski, Razvan Pascanu, Peter Battaglia, and Timothy Lillicrap. "A simple neural network module for relational reasoning." NIPS 2017. Relation Networks concatenate all possible pairs of objects with the an encoded question to later find the answer with a MLP.

- 203. bit.ly/MMM2019 @DocXavi 203 Multimodal Machine Translation Challenge on Multimodal Image Translation: http://www.statmt.org/wmt17/multimodal-task.html#task1

- 205. bit.ly/MMM2019 @DocXavi 205 Speech Separation with Vision (lips) Afouras, Triantafyllos, Joon Son Chung, and Andrew Zisserman. "The Conversation: Deep Audio-Visual Speech Enhancement." Interspeech 2018.

- 206. bit.ly/DLCV2018 #DLUPC 206 Afouras, Triantafyllos, Joon Son Chung, and Andrew Zisserman. "The Conversation: Deep Audio-Visual Speech Enhancement." Interspeech 2018..

- 208. bit.ly/MMM2019 @DocXavi 208 Visual Re-dubbing (pixels) Chung, Joon Son, Amir Jamaludin, and Andrew Zisserman. "You said that?." BMVC 2017. #speech2vid

- 209. bit.ly/DLCV2018 #DLUPC 209Chung, Joon Son, Amir Jamaludin, and Andrew Zisserman. "You said that?." BMVC 2017. #speech2vid

- 210. bit.ly/MMM2019 @DocXavi 210 Chen, Lele, Zhiheng Li, Ross K. Maddox, Zhiyao Duan, and Chenliang Xu. "Lip Movements Generation at a Glance." ECCV 2018. Visual Re-dubbing (pixels)

- 211. bit.ly/DLCV2018 #DLUPC 211Chen, Lele, Zhiheng Li, Ross K. Maddox, Zhiyao Duan, and Chenliang Xu. "Lip Movements Generation at a Glance." ECCV 2018.

- 212. bit.ly/MMM2019 @DocXavi 212 Visual Re-dubbing (pixels) Vougioukas, Konstantinos, Stavros Petridis, and Maja Pantic. "End-to-End Speech-Driven Facial Animation with Temporal GANs." arXiv preprint arXiv:1805.09313 (2018). Adversarial losses at frame (spatial) & sequence (temporal) scales.

- 213. bit.ly/DLCV2018 #DLUPC 213 Vougioukas, Konstantinos, Stavros Petridis, and Maja Pantic. "End-to-End Speech-Driven Facial Animation with Temporal GANs." arXiv preprint arXiv:1805.09313 (2018).

- 214. bit.ly/MMM2019 @DocXavi 214 Visual Re-dubbing (lip keypoints) Suwajanakorn, Supasorn, Steven M. Seitz, and Ira Kemelmacher-Shlizerman. "Synthesizing Obama: learning lip sync from audio." SIGGRAPH 2017.

- 215. bit.ly/DLCV2018 #DLUPC 215 Karras, Tero, Timo Aila, Samuli Laine, Antti Herva, and Jaakko Lehtinen. "Audio-driven facial animation by joint end-to-end learning of pose and emotion." SIGGRAPH 2017

- 216. bit.ly/MMM2019 @DocXavi 216 Visual Re-dubbing (3D meshes) Karras, Tero, Timo Aila, Samuli Laine, Antti Herva, and Jaakko Lehtinen. "Audio-driven facial animation by joint end-to-end learning of pose and emotion." SIGGRAPH 2017

- 217. bit.ly/DLCV2018 #DLUPC 217 Karras, Tero, Timo Aila, Samuli Laine, Antti Herva, and Jaakko Lehtinen. "Audio-driven facial animation by joint end-to-end learning of pose and emotion." SIGGRAPH 2017

- 218. bit.ly/MMM2019 @DocXavi 218 Outline 1. Motivation 2. Deep Neural Topologies 3. Multimedia Encoding and Decoding 4. Multimodal Architectures a. Cross-modal b. Joint Representations (Embeddings) c. Multimodal inputs

- 220. bit.ly/MMM2019 @DocXavi 220 Deep Learning online courses by UPC: ● MSc course [2017] [2018] ● BSc course [2018] [2019] ● 1st edition (2016) ● 2nd edition (2017) ● 3rd edition (2018) ● 4th edition (2019) ● 1st edition (2017) ● 2nd edition (2018) BSc course 22 to 29 January 2019 Registrations open for Spring 2019 (Speech) / Summer 2019 (NLP)Registration open for 2019

- 221. bit.ly/MMM2019 @DocXavi 221 Training for professionals Sign up here. Course starts on February 2019.

- 222. bit.ly/MMM2019 @DocXavi 222 Deep Learning & AI in Barcelona deeplearning.barcelona bcn.ai

- 223. bit.ly/MMM2019 @DocXavi 223 Phd Opening @ BSC on Multimodal [Phd in Multimodal Deep Reinforcement Learning]

- 224. bit.ly/MMM2019 @DocXavi 224 Our team at UPC-BSC Barcelona Victor Campos Amaia Salvador Amanda Duarte Dèlia Fernández Eduard Ramon Andreu Girbau Dani Fojo Oscar Mañas Santi Pascual Xavi Giró Miriam Bellver Janna Escur Carles Ventura Miquel Tubau Paula Gómez Benet Oriol Mariona Carós Jordi Torres

- 225. bit.ly/MMM2019 @DocXavi @DocXavi Xavier Giro-i-Nieto Slides available in 24 hours at: http://bit.ly/mmm2019-xavigiro xavier.giro@upc.edu #MMM2019 Suggestions for improvement this tutorial (refs, study cases…) ?