2017 IEEE Projects 2017 For Cse ( Trichy, Chennai )

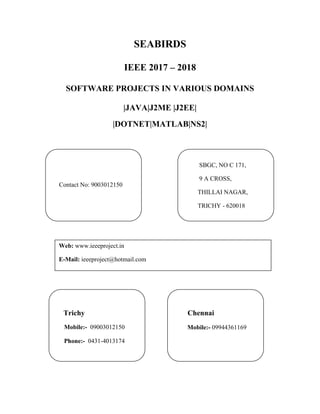

- 1. SEABIRDS IEEE 2017 – 2018 SOFTWARE PROJECTS IN VARIOUS DOMAINS |JAVA|J2ME |J2EE| |DOTNET|MATLAB|NS2| Chennai Mobile:- 09944361169 Trichy Mobile:- 09003012150 Phone:- 0431-4013174 Web: www.ieeeproject.in E-Mail: ieeeproject@hotmail.com SBGC, NO C 171, 9 A CROSS, THILLAI NAGAR, TRICHY - 620018 Contact No: 9003012150

- 2. SBGC Provides IEEE 2017 -2018 projects for all Final Year Students. We do assist the students with Technical Guidance for two categories. Category 1: Students with new project ideas / New or Old IEEE Papers. Category 2: Students selecting from our project list. When you register for a project we ensure that the project is implemented to your fullest satisfaction and you have a thorough understanding of every aspect of the project. SEABIRDS PROVIDES YOU THE LATEST IEEE 2017 PROJECTS / IEEE 2018 PROJECTS FOR FOLLOWING DEPARTMENT STUDENTS B.E, B.TECH, M.TECH, M.E, DIPLOMA, MS, BSC, MSC, BCA, MCA, MBA, BBA, PHD, B.E (ECE, EEE, E&I, ICE, MECH, PROD, CSE, IT, THERMAL, AUTOMOBILE, MECATRONICS, ROBOTICS) B.TECH(ECE, MECATRONICS, E&I, EEE, MECH , CSE, IT, ROBOTICS) M.TECH(EMBEDDED SYSTEMS, COMMUNICATION SYSTEMS, POWER ELECTRONICS, COMPUTER SCIENCE, SOFTWARE ENGINEERING, APPLIED ELECTRONICS, VLSI Design) M.E(EMBEDDED SYSTEMS, COMMUNICATION SYSTEMS, POWER ELECTRONICS, COMPUTER SCIENCE, SOFTWARE ENGINEERING, APPLIED ELECTRONICS, VLSI Design) DIPLOMA (CE, EEE, E&I, ICE, MECH, PROD, CSE, IT)

- 3. MBA (HR, FINANCE, MANAGEMENT, OPERATION MANAGEMENT, SYSTEM MANAGEMENT, PROJECT MANAGEMENT, HOSPITAL MANAGEMENT, EDUCATION MANAGEMENT, MARKETING MANAGEMENT, TECHNOLOGY MANAGEMENT) We also have training and project, R & D division to serve the students and make them job oriented professionals. PROJECT SUPPORT AND DELIVERABLES Project Abstract IEEE PAPER IEEE Reference Papers, Materials & Books in CD PPT / Review Material Project Report (All Diagrams & Screen shots) Working Procedures Algorithm Explanations Project Installation in Laptops Project Certificate

- 4. DATA MINING (JAVA) 1. Enabling Kernel-Based Attribute-Aware Matrix Factorization for Rating Prediction In recommender systems, one key task is to predict the personalized rating of a user to a new item and then return the new items having the top predicted ratings to the user. Recommender systems usually apply collaborative filtering techniques (e.g., matrix factorization) over a sparse user-item rating matrix to make rating prediction. However, the collaborative filtering techniques are severely affected by the data sparsity of the underlying user-item rating matrix and often confront the cold-start problems for new items and users. Since the attributes of items and social links between users become increasingly accessible in the Internet, this paper exploits the rich attributes of items and social links of users to alleviate the rating sparsity effect and tackle the cold-start problems. Specifically, we first propose a Kernel- based Attribute-aware Matrix Factorization model called KAMF to integrate the attribute information of items into matrix factorization. KAMF can discover the nonlinear interactions among attributes, users, and items, which mitigate the rating sparsity effect and deal with the cold-start problem for new items by nature. Further, we extend KAMF to address the cold-start problem for new users by utilizing the social links between users. Finally, we conduct a comprehensive performance evaluation for KAMF using two large-scale real-world data sets recently released in Yelp and MovieLens. Experimental results show that KAMF achieves significantly superior performance against other state-of-the-art rating prediction techniques.

- 5. 2. Query Expansion with Enriched User Profiles for Personalized Search Utilizing Folksonomy Data Abstract: Query expansion has been widely adopted in Web search as a way of tackling the ambiguity of queries. Personalized search utilizing folksonomy data has demonstrated an extreme vocabulary mismatch problem that requires even more effective query expansion methods. Co-occurrence statistics, tag-tag relationships, and semantic matching approaches are among those favored by previous research. However, user profiles which only contain a user’s past annotation information may not be enough to support the selection of expansion terms, especially for users with limited previous activity with the system. We propose a novel model to construct enriched user profiles with the help of an external corpus for personalized query expansion. Our model integrates the current state-of-the-art text representation learning framework, known as word embeddings, with topic models in two groups of pseudo-aligned documents. Based on user profiles, we build two novel query expansion techniques. These two techniques are based on topical weights-enhanced word embeddings, and the topical relevance between the query and the terms inside a user profile, respectively. The results of an in-depth experimental evaluation, performed on two real-world datasets using different external corpora, show that our approach outperforms traditional techniques, including existing non-personalized and personalized query expansion methods.

- 6. 3. Collaboratively Training Sentiment Classifiers for Multiple Domains We propose a collaborative multi-domain sentiment classification approach to train sentiment classifiers for multiple domains simultaneously. In our approach, the sentiment information in different domains is shared to train more accurate and robust sentiment classifiers for each domain when labeled data is scarce. Specifically, we decompose the sentiment classifier of each domain into two components, a global one and a domain-specific one. The global model can capture the general sentiment knowledge and is shared by various domains. The domain- specific model can capture the specific sentiment expressions in each domain. In addition, we extract domain-specific sentiment knowledge from both labeled and unlabeled samples in each domain and use it to enhance the learning of domain-specific sentiment classifiers. Besides, we incorporate the similarities between domains into our approach as regularization over the domain-specific sentiment classifiers to encourage the sharing of sentiment information between similar domains. Two kinds of domain similarity measures are explored, one based on textual content and the other one based on sentiment expressions. Moreover, we introduce two efficient algorithms to solve the model of our approach. Experimental results on benchmark datasets show that our approach can effectively improve the performance of multi-domain sentiment classification and significantly outperform baseline methods.

- 7. 4. Energy-Efficient Query Processing in Web Search Engines Web search engines are composed by thousands of query processing nodes, i.e., servers dedicated to process user queries. Such many servers consume a significant amount of energy, mostly accountable to their CPUs, but they are necessary to ensure low latencies, since users expect sub-second response times (e.g., 500 ms). However, users can hardly notice response times that are faster than their expectations. Hence, we propose the Predictive Energy Saving Online Scheduling Algorithm (PESOS) to select the most appropriate CPU frequency to process a query on a per-core basis. PESOS aims at process queries by their deadlines, and leverage high-level scheduling information to reduce the CPU energy consumption of a query processing node. PESOS bases its decision on query efficiency predictors, estimating the processing volume and processing time of a query. We experimentally evaluate PESOS upon the TREC ClueWeb09B collection and the MSN2006 query log. Results show that PESOS can reduce the CPU energy consumption of a query processing node up to ~48 percent compared to a system running at maximum CPU core frequency. PESOS outperforms also the best state-of-the-art competitor with a ~20 percent energy saving, while the competitor requires a fine parameter tuning and it may incurs in uncontrollable latency violations.

- 8. 5. A Scalable Data Chunk Similarity Based Compression Approach for Efficient Big Sensing Data Processing on Cloud Big sensing data is prevalent in both industry and scientific research applications where the data is generated with high volume and velocity. Cloud computing provides a promising platform for big sensing data processing and storage as it provides a flexible stack of massive computing, storage, and software services in a scalable manner. Current big sensing data processing on Cloud have adopted some data compression techniques. However, due to the high volume and velocity of big sensing data, traditional data compression techniques lack sufficient efficiency and scalability for data processing. Based on specific on-Cloud data compression requirements, we propose a novel scalable data compression approach based on calculating similarity among the partitioned data chunks. Instead of compressing basic data units, the compression will be conducted over partitioned data chunks. To restore original data sets, some restoration functions and predictions will be designed. MapReduce is used for algorithm implementation to achieve extra scalability on Cloud. With real world meteorological big sensing data experiments on U-Cloud platform, we demonstrate that the proposed scalable compression approach based on data chunk similarity can significantly improve data compression efficiency with affordable data accuracy loss.

- 9. 6. User-Centric Similarity Search User preferences play a significant role in market analysis. In the database literature, there has been extensive work on query primitives, such as the well known top-k query that can be used for the ranking of products based on the preferences customers have expressed. Still, the fundamental operation that evaluates the similarity between products is typically done ignoring these preferences. Instead products are depicted in a feature space based on their attributes and similarity is computed via traditional distance metrics on that space. In this work, we utilize the rankings of the products based on the opinions of their customers in order to map the products in a user-centric space where similarity calculations are performed. We identify important properties of this mapping that result in upper and lower similarity bounds, which in turn permit us to utilize conventional multidimensional indexes on the original product space in order to perform these user-centric similarity computations. We show how interesting similarity calculations that are motivated by the commonly used range and nearest neighbor queries can be performed efficiently, while pruning significant parts of the data set based on the bounds we derive on the user-centric similarity of products

- 10. 7. Computing Semantic Similarity of Concepts in Knowledge Graphs This paper presents a method for measuring the semantic similarity between concepts in Knowledge Graphs (KGs) such as WordNet and DBpedia. Previous work on semantic similarity methods have focused on either the structure of the semantic network between concepts (e.g., path length and depth), or only on the Information Content (IC) of concepts. We propose a semantic similarity method, namely wpath, to combine these two approaches, using IC to weight the shortest path length between concepts. Conventional corpus-based IC is computed from the distributions of concepts over textual corpus, which is required to prepare a domain corpus containing annotated concepts and has high computational cost. As instances are already extracted from textual corpus and annotated by concepts in KGs, graph-based IC is proposed to compute IC based on the distributions of concepts over instances. Through experiments performed on well known word similarity datasets, we show that the wpath semantic similarity method has produced a statistically significant improvement over other semantic similarity methods. Moreover, in a real category classification evaluation, the wpath method has shown the best performance in terms of accuracy and F score.

- 11. 8. Efficient Pattern-Based Aggregation on Sequence Data A Sequence OLAP (S-OLAP) system provides a platform on which pattern-based aggregate (PBA) queries on a sequence database are evaluated. In its simplest form, a PBA query consists of a pattern template T and an aggregate function F. A pattern template is a sequence of variables, each is defined over a domain. Each variable is instantiated with all possible values in its corresponding domain to derive all possible patterns of the template. Sequences are grouped based on the patterns they possess. The answer to a PBA query is a sequence cuboid (s-cuboid), which is a multidimensional array of cells. Each cell is associated with a pattern instantiated from the query’s pattern template. The value of each s-cuboid cell is obtained by applying the aggregate function F to the set of data sequences that belong to that cell. Since a pattern template can involve many variables and can be arbitrarily long, the induced s-cuboid for a PBA query can be huge. For most analytical tasks, however, only iceberg cells with very large aggregate values are of interest. This paper proposes an efficient approach to identifying and evaluating iceberg cells of s-cuboids. Experimental results show that our algorithms are orders of magnitude faster than existing approaches.

- 12. 9. Efficient Distance-Aware Influence Maximization in Geo-Social Networks Given a social network G and a positive integer k, the influence maximization problem aims to identify a set of k nodes in G that can maximize the influence spread under a certain propagation model. As the proliferation of geo-social networks, location-aware promotion is becoming more necessary in real applications. In this paper, we study the distance-aware influence maximization (DAIM) problem, which advocates the importance of the distance between users and the promoted location. Unlike the traditional influence maximization problem, DAIM treats users differently based on their distances from the promoted location. In this situation, the k nodes selected are different when the promoted location varies. In order to handle the large number of queries and meet the online requirement, we develop two novel index-based approaches, MIA-DA and RIS-DA, by utilizing the information over some pre-sampled query locations. MIA-DA is a heuristic method which adopts the maximum influence arborescence (MIA) model to approximate the influence calculation. In addition, different pruning strategies as well as a priority-based algorithm are proposed to significantly reduce the searching space. To improve the effectiveness, in RIS-DA, we extend the reverse influence sampling (RIS) model and come up with an unbiased estimator for the DAIM problem. Through carefully analyzing the sample size needed for indexing, RIS-DA is able to return a 1 – 1/e - E approximate solution with at least 1 - d probability for any given query. Finally, we demonstrate the efficiency and effectiveness of proposed methods over real geo-social networks.

- 13. 10. A Systematic Approach to Clustering Whole Trajectories of Mobile Objects in Road Networks Most of mobile object trajectory clustering analysis to date has been focused on clustering the location points or sub-trajectories extracted from trajectory data. This paper presents TRACEMOB, a systematic approach to clustering whole trajectories of mobile objects traveling in road networks. TRACEMOB as a whole trajectory clustering framework has three unique features. First, we design a quality measure for the distance between two whole trajectories. By quality, we mean that the distance measure can capture the complex characteristics of trajectories as a whole including their varying lengths and their constrained movement in the road network space. Second, we develop an algorithm that transforms whole trajectories in a road network space into multidimensional data points in a euclidean space while preserving their relative distances in the transformed metric space. This transformation enables us to effectively shift the clustering task for whole mobile object trajectories in the complex road network space to the traditional clustering task for multidimensional data in a euclidean space. Third, we develop a cluster validation method for evaluating the clustering quality in both the transformed metric space and the road network space. Extensive experimental evaluation with trajectories generated on real road network maps of different cities shows that TRACEMOB produces higher quality clustering results and outperforms existing approaches by an order of magnitude.

- 14. 11. Bag-of-Discriminative-Words (BoDW) Representation via Topic Modeling Many of the words in a given document either deliver facts (objective) or express opinions (subjective), respectively, depending on the topics they are involved in. For example, given a bunch of documents, the word “bug” assigned to the topic “order Hemiptera” apparently remarks one object (i.e., one kind of insects), while the same word assigned to the topic “software” probably conveys a negative opinion. Motivated by the intuitive assumption that different words have varying degrees of discriminative power in delivering the objective sense or the subjective sense with respect to their assigned topics, a model named as discriminatively objective-subjective LDA (dosLDA) is proposed in this paper. The essential idea underlying the proposed dosLDA is that a pair of objective and subjective selection variables are explicitly employed to encode the interplay between topics and discriminative power for the words in documents in a supervised manner. As a result, each document is appropriately represented as “bag-of-discriminativewords” (BoDW). The experiments reported on documents and images demonstrate that dosLDA not only performs competitively over traditional approaches in terms of topic modeling and document classification, but also has the ability to discern the discriminative power of each word in terms of its objective or subjective sense with respect to its assigned topic.

- 15. 12. Search Rank Fraud and Malware Detection in Google Play Fraudulent behaviors in Google Play, the most popular Android app market, fuel search rank abuse and malware proliferation. To identify malware, previous work has focused on app executable and permission analysis. In this paper, we introduce FairPlay, a novel system that discovers and leverages traces left behind by fraudsters, to detect both malware and apps subjected to search rank fraud. FairPlay correlates review activities and uniquely combines detected review relations with linguistic and behavioral signals gleaned from Google Play app data (87 K apps, 2.9 M reviews, and 2.4M reviewers, collected over half a year), in order to identify suspicious apps. FairPlay achieves over 95 percent accuracy in classifying gold standard datasets of malware, fraudulent and legitimate apps. We show that 75 percent of the identified malware apps engage in search rank fraud. FairPlay discovers hundreds of fraudulent apps that currently evade Google Bouncer’s detection technology. FairPlay also helped the discovery of more than 1,000 reviews, reported for 193 apps, that reveal a new type of “coercive” review campaign: users are harassed into writing positive reviews, and install and review other apps.

- 16. CLOUD COMPUTING (JAVA) 1. TEES: An Efficient Search Scheme over Encrypted Data on Mobile Cloud Abstract: Cloud storage provides a convenient, massive, and scalable storage at low cost, but data privacy is a major concern that prevents users from storing files on the cloud trustingly. One way of enhancing privacy from data owner point of view is to encrypt the files before outsourcing them onto the cloud and decrypt the files after downloading them. However, data encryption is a heavy overhead for the mobile devices, and data retrieval process incurs a complicated communication between the data user and cloud. Normally with limited bandwidth capacity and limited battery life, these issues introduce heavy overhead to computing and communication as well as a higher power consumption for mobile device users, which makes the encrypted search over mobile cloud very challenging. In this paper, we propose traffic and energy saving encrypted search (TEES), bandwidth and energy efficient encrypted search architecture over mobile cloud. The proposed architecture offloads the computation from mobile devices to the cloud, and we further optimize the communication between the mobile clients and the cloud. It is demonstrated that the data privacy does not degrade when the performance enhancement methods are applied. Our experiments show that TEES reduces the computation time by 23 to 46 percent and save the energy consumption by 35 to 55 percent per file retrieval; meanwhile the network traffics during the file retrievals are also significantly reduced.

- 17. 2. Joint Pricing and Capacity Planning in the IaaS Cloud Market In the cloud context, pricing and capacity planning are two important factors to the profit of the infrastructure-as-a-service (IaaS) providers. This paper investigates the problem of joint pricing and capacity planning in the IaaS provider market with a set of software-as-a-service (SaaS) providers, where each SaaS provider leases the virtual machines (VMs) from the IaaS providers to provide cloud-based application services to its end-users. We study two market models, one with a monopoly IaaS provider market, the other with multiple-IaaS-provider market. For the monopoly IaaS provider market, we first study the SaaS providers’ optimal decisions in terms of the amount of end-user requests to admit and the number of VMs to lease, given the resource price charged by the IaaS provider. Based on the best responses of the SaaS providers, we then derive the optimal solution to the problem of joint pricing and capacity planning to maximize the IaaS provider’s profit. Next, for the market with multiple IaaS providers, we formulate the pricing and capacity planning competition among the IaaS providers as a three-stage Stackelberg game. We explore the existence and uniqueness of Nash equilibrium, and derive the conditions under which there exists a unique Nash equilibrium. Finally, we develop an iterative algorithm to achieve the Nash equilibrium.

- 18. 3. Mathematical Programming Approach for Revenue Maximization in Cloud Federations This paper assesses the benefits of cloud federation for cloud providers. Outsourcing and insourcing are explored as means to maximize the revenues of the providers involved in the federation. An exact method using a linear integer program is proposed to optimize the partitioning of the incoming workload across the federation members. A pricing model is suggested to enable providers to set their offers dynamically and achieve highest revenues. The conditions leading to highest gains are identified and the benefits of cloud federation are quantified.

- 19. 4. kBF: Towards Approximate and Bloom Filter based Key-Value Storage for Cloud Computing Systems As one of the most popular cloud services, data storage has attracted great attention in recent research efforts. Key-value (k-v) stores have emerged as a popular option for storing and querying billions of key-value pairs. So far, existing methods have been deterministic. Providing such accuracy, however, comes at the cost of memory and CPU time. In contrast, we present an approximate k-v storage for cloud-based systems that is more compact than existing methods. The tradeoff is that it may, theoretically, return errors. Its design is based on the probabilistic data structure called “bloom filter”, where we extend the classical bloom filter to support key- value operations. We call the resulting design as the kBF (key-value bloom filter). We further develop a distributed version of the kBF (d-kBF) for the unique requirements of cloud computing platforms, where multiple servers cooperate to handle a large volume of queries in a load- balancing manner. Finally, we apply the kBF to a practical problem of implementing a state machine to demonstrate how the kBF can be used as a building block for more complicated software infrastructures.

- 20. 5. Cloud-Based Utility Service Framework for Trust Negotiations Using Federated Identity Management Utility based cloud services can efficiently provide various supportive services to different service providers. Trust negotiations with federated identity management are vital for preserving privacy in open systems such as distributed collaborative systems. However, due to the large amounts of server based communications involved in trust negotiations scalability issues prove to be less cumbersome when offloaded on to the cloud as a utility service. In this view, we propose trust based federated identity management as a cloud based utility service. The main component of this model is the trust establishment between the cloud service provider and the identity providers. We propose novel trust metrics based on the potential vulnerability to be attacked, the available security enforcements and a novel cost metric based on policy dependencies to rank the cooperativeness of identity providers. Practical use of these trust metrics is demonstrated by analyses using simulated data sets, attack history data: published by MIT Lincoln laboratory, real-life attacks and vulnerabilities extracted from Common Vulnerabilities and Exposures (CVE) repository and fuzzy rule based evaluations. The results of the evaluations imply the significance of the proposed trust model to support cloud based utility services to ensure reliable trust negotiations using federated identity management.

- 21. 6. On the Latency and Energy Efficiency of Distributed Storage Systems The increase in data storage and power consumption at data-centers has made it imperative to design energy efficient distributed storage systems (DSS). The energy efficiency of DSS is strongly influenced not only by the volume of data, frequency of data access and redundancy in data storage, but also by the heterogeneity exhibited by the DSS in these dimensions. To this end, we propose and analyze the energy efficiency of a heterogeneous distributed storage system in which n storage servers (disks) store the data of R distinct classes. Data of class i is encoded using a (n, ki) erasure code and the (random) data retrieval requests can also vary across classes. We show that the energy efficiency of such systems is closely related to the average latency and hence motivates us to study the energy efficiency via the lens of average latency. Through this connection, we show that erasure coding serves the dual purpose of reducing latency and increasing energy efficiency. We present a queuing theoretic analysis of the proposed model and establish upper and lower bounds on the average latency for each data class under various scheduling policies. Through extensive simulations, we present qualitative insights which reveal the impact of coding rate, number of servers, service distribution and number of redundant requests on the average latency and energy efficiency of the DSS.

- 22. 7. Orchestrating Bulk Data Transfers across Geo-Distributed Datacenters As it has become the norm for cloud providers to host multiple datacenters around the globe, significant demands exist for inter-datacenter data transfers in large volumes, e.g., migration of big data. A challenge arises on how to schedule the bulk data transfers at different urgency levels, in order to fully utilize the available inter-datacenter bandwidth. The Software Defined Networking (SDN) paradigm has emerged recently which decouples the control plane from the data paths, enabling potential global optimization of data routing in a network. This paper aims to design a dynamic, highly efficient bulk data transfer service in a geo-distributed datacenter system, and engineer its design and solution algorithms closely within SDN architecture. We model data transfer demands as delay tolerant migration requests with different finishing deadlines. Thanks to the flexibility provided by SDN, we enable dynamic, optimal routing of distinct chunks within each bulk data transfer (instead of treating each transfer as an infinite flow), which can be temporarily stored at intermediate datacenters to mitigate bandwidth contention with more urgent transfers. An optimal chunk routing optimization model is formulated to solve for the best chunk transfer schedules over time. To derive the optimal schedules in an online fashion, three algorithms are discussed, namely a bandwidth-reserving algorithm, a dynamically-adjusting algorithm, and a future-demand-friendly algorithm, targeting at different levels of optimality and scalability. Webuild an SDN systembased on the Beacon platform and OpenFlow APIs, and carefully engineer our bulk data transfer algorithms in the system. Extensive real-world experiments are carried out to compare the three algorithms as well as those from the existing literature, in terms of routing optimality, computational delay and overhead

- 23. NETWORKING (JAVA) 1. Accurate Per-Packet Delay Tomography in Wireless Ad Hoc Networks Abstract: In this paper, we study the problem of decomposing the end-to-end delay into the per- hop delay for each packet, in multi-hop wireless ad hoc networks. Knowledge on the per-hop per-packet delay can greatly improve the network visibility and facilitate network measurement and management. We propose Domo, a passive, lightweight, and accurate delay tomography approach to decomposing the packet end-to-end delay into each hop. We first formulate the per packet delay tomography problem into a set of optimization problems by carefully considering the constraints among various timing quantities. At the network side, Domo attaches a small overhead to each packet for constructing constraints of the optimization problems. By solving these optimization problems by semi-definite relaxation at the PC side, Domo is able to estimate the per-hop delays with high accuracy as well as give a upper bound and lower bound for each unknown per-hop delay. We implement Domo and evaluate its performance extensively using both trace-driven studies and large-scale simulations. Results show that Domo significantly outperforms two existing methods, nearly tripling the accuracy of the state-of-the-art.

- 24. 2. FiDoop-DP: Data Partitioning in Frequent Itemset Mining on Hadoop Clusters Traditional parallel algorithms for mining frequent itemsets aim to balance load by equally partitioning data among a group of computing nodes. We start this study by discovering a serious performance problem of the existing parallel Frequent Itemset Mining algorithms. Given a large dataset, data partitioning strategies in the existing solutions suffer high communication and mining overhead induced by redundant transactions transmitted among computing nodes. We address this problem by developing a data partitioning approach called FiDoop-DP using the MapReduce programming model. The overarching goal of FiDoop-DP is to boost the performance of parallel Frequent Itemset Mining on Hadoop clusters. At the heart of FiDoop-DP is the Voronoi diagram-based data partitioning technique, which exploits correlations among transactions. Incorporating the similarity metric and the Locality-Sensitive Hashing technique, FiDoop-DP places highly similar transactions into a data partition to improve locality without creating an excessive number of redundant transactions. We implement FiDoop- DP on a 24-node Hadoop cluster, driven by a wide range of datasets created by IBM Quest Market-Basket Synthetic Data Generator. Experimental results reveal that FiDoop-DP is conducive to reducing network and computing loads by the virtue of eliminating redundant transactions on Hadoop nodes. FiDoop-DP significantly improves the performance of the existing parallel frequent-pattern scheme by up to 31 percent with an average of 18 percent.

- 25. 3. CoRE: Cooperative End-to-End Traffic Redundancy Elimination for Reducing Cloud Bandwidth Cost Abstract: The pay-as-you-go service model impels cloud customers to reduce the usage cost of bandwidth. Traffic Redundancy Elimination (TRE) has been shown to be an effective solution for reducing bandwidth costs, and thus has recently captured significant attention in the cloud environment. By studying the TRE techniques in a trace driven approach, we found that both short-term (time span of seconds) and long-term (time span of hours or days) data redundancy can concurrently appear in the traffic, and solely using either sender-based TRE or receiver- based TRE cannot simultaneously capture both types of traffic redundancy. Also, the efficiency of existing receiver-based TRE solution is susceptible to the data changes compared to the historical data in the cache. In this paper, we propose a Cooperative end-to-end TRE solution (CoRE) that can detect and remove both short-term and long-term redundancy through a two- layer TRE design with cooperative operations between layers. An adaptive prediction algorithm is further proposed to improve TRE efficiency through dynamically adjusting the prediction window size based on the hit ratio of historical predictions. Besides, we enhance CoRE to adapt to different traffic redundancy characteristics of cloud applications to improve its operation cost. Extensive evaluation with several real traces shows that CoRE is capable of effectively identifying both short-term and long-term redundancy with low additional cost while ensuring TRE efficiency from data changes.

- 26. 4. PDFS: Partially Dedupped File System for Primary Workloads Abstract: Primary storage dedup is difficult to be accomplished because of challenges to achieve low IO latency and high throughput while eliminating data redundancy effectively in the critical IO Path. In this paper, we design and implement the PDFS, a partially dedupped file system for primary workloads, which is built on a generalized framework using partial data lookup for efficient searching of redundant data in quickly chosen data subsets instead of the whole data. PDFS improves IO latency and throughput systematically by techniques including write path optimization, data dedup parallelization and write order preserving. Such design choices bring dedup to the masses for general primary workloads. Experimental results show that PDFS achieves 74-99 percent of the theoretical maximum dedup ratio with very small or even negative performance degradations compared with main stream file systems without dedup support. Discussions about varied configuring experiences of PDFS are also carried out.

- 27. 5. EAFR: An Energy-Efficient Adaptive File Replication System in Data-Intensive Clusters In data intensive clusters, a large amount of files are stored, processed and transferred simultaneously. To increase the data availability, some file systems create and store three replicas for each file in randomly selected servers across different racks. However, they neglect the file heterogeneity and server heterogeneity, which can be leveraged to further enhance data availability and file system efficiency. As files have heterogeneous popularities, a rigid number of three replicas may not provide immediate response to an excessive number of read requests to hot files, and waste resources (including energy) for replicas of cold files that have few read requests. Also, servers are heterogeneous in network bandwidth, hardware configuration and capacity (i.e., the maximal number of service requests that can be supported simultaneously), it is crucial to select replica servers to ensure low replication delay and request response delay. In this paper, we propose an Energy-Efficient Adaptive File Replication System (EAFR), which incorporates three components. It is adaptive to time-varying file popularities to achieve a good tradeoff between data availability and efficiency. Higher popularity of a file leads to more replicas and vice versa. Also, to achieve energy efficiency, servers are classified into hot servers and cold servers with different energy consumption, and cold files are stored in cold servers. EAFR then selects a server with sufficient capacity (including network bandwidth and capacity) to hold a replica. To further improve the performance of EAFR, we propose a dynamic transmission rate adjustment strategy to prevent potential incast congestion when replicating a file to a server, a networkaware data node selection strategy to reduce file read latency, and a load-aware replica maintenance strategy to quickly create file replicas under replica node failures. Experimental results on a real-world cluster show the effectiveness of EAFR and

- 28. proposed strategies in reducing file read latency, replication time, and power consumption in large clusters. 6. Energy-Aware Scheduling of Embarrassingly Parallel Jobs and Resource Allocation in Cloud In cloud computing, with full control of the underlying infrastructures, cloud providers can flexibly place user jobs on suitable physical servers and dynamically allocate computing resources to user jobs in the form of virtual machines. As a cloud provider, scheduling user jobs in a way that minimizes their completion time is important, as this can increase the utilization, productivity, or profit of a cloud. In this paper, we focus on the problem of scheduling embarrassingly parallel jobs composed of a set of independent tasks and consider energy consumption during scheduling. Our goal is to determine task placement plan and resource allocation plan for such jobs in a way that minimizes the Job Completion Time (JCT). We begin with proposing an analytical solution to the problem of optimal resource allocation with pre- determined task placement. In the following, we formulate the problem of scheduling a single job as a Non-linear Mixed Integer Programming problem and present a relaxation with an equivalent Linear Programming problem. We further propose an algorithm named TaPRA and its simplified version TaPRA-fast that solve the single job scheduling problem. Lastly, to address multiple jobs in online scheduling, we propose an online scheduler named OnTaPRA. By comparing with the start-of-the-art algorithms and schedulers via simulations, we demonstrate that TaPRA and TaPRA-fast reduce the JCT by 40-430 percent and the OnTaPRA scheduler reduces the average JCT by 60-280 percent. In addition, TaPRA-fast can be 10 times faster than TaPRA with around 5 percent performance degradation compared to TaPRA, which makes the use of TaPRA-fast very appropriate in practice

- 29. NETWORK SECURITY (JAVA) 1. A Novel Class of Robust Covert Channels Using Out-of-Order Packets Covert channels are usually used to circumvent security policies and allow information leakage without being observed. In this paper, we propose a novel covert channel technique using the packet reordering phenomenon as a host for carrying secret communications. Packet reordering is a common phenomenon on the Internet. Moreover, it is handled transparently from the user and application-level processes. This makes it an attractive medium to exploit for sending hidden signals to receivers by dynamically manipulating packet order in a network flow. In our approach, specific permutations of successive packets are selected to enhance the reliability of the channel, while the frequency distribution of their usage is tuned to increase stealthiness by imitating real Internet traffic. It is very expensive for the adversary to discover the covert channel due to the tremendous overhead to buffer and sort the packets among huge amount of background traffic. A simple tool is implemented to demonstrate this new channel. We studied extensively the robustness and capabilities of our proposed channel using both simulation and experimentation over large varieties of traffic characteristics. The reliability and capacity of this technique have shown promising results. We also investigated a practical mechanism for distorting and potentially preventing similar novel channels.

- 30. 2. Optimized Identity-Based Encryption from Bilinear Pairing for Lightweight Devices Lightweight devices such as smart cards and RFID tags have a very limited hardware resource, which could be too weak to cope with asymmetric-key cryptography. It would be desirable if the cryptographic algorithm could be optimized in order to better use hardware resources. In this paper, we demonstrate how identity-based encryption algorithms from bilinear pairing can be optimized so that hardware resources can be saved. We notice that the identity- based encryption algorithms from bilinear pairing in the literature must perform both elliptic curve group operations and multiplicative group operations, which consume a lot of hardware resources. We manage to eliminate the need of multiplicative group operations for encryption. This is a significant discovery since the hardware structure can be simplified for implementing pairing-based cryptography. Our experimental results show that our encryption algorithm saves up to 47 percent memory (27,239 RAM bits) in FPGA implementation.

- 31. 3. Efficient and Confidentiality-Preserving Content Based Publish/Subscribe with Prefiltering Content-based publish/subscribe provides a loosely-coupled and expressive form of communication for large-scale distributed systems. Confidentiality is a major challenge for publish/subscribe middleware deployed over multiple administrative domains. Encrypted matching allows confidentiality-preserving content-based filtering but has high performance overheads. It may also prevent the use of classical optimizations based on subscriptions containment. We propose a support mechanism that reduces the cost of encrypted matching, in the form of a prefiltering operator using Bloom filters and simple randomization techniques. This operator greatly reduces the amount of encrypted subscriptions that must be matched against incoming encrypted publications. It leverages subscription containment information when available, but also ensures that containment confidentiality is preserved otherwise. We propose containment obfuscation techniques and provide a rigorous security analysis of the information leaked by Bloom filters in this case. We conduct a thorough experimental evaluation of prefiltering under a large variety of workloads. Our results indicate that prefiltering is successful at reducing the space of subscriptions to be tested in all cases. We show that while there is a tradeoff between prefiltering efficiency and information leakage when using containment obfuscation, it is practically possible to obtain good prefiltering performance while securing the technique against potential leakages.

- 32. 4. An Efficient Lattice Based Multi-Stage Secret Sharing Scheme In this paper, we construct a lattice based ðt; nÞ threshold multi-stage secret sharing (MSSS) scheme according to Ajtai’s construction for one-way functions. In an MSSS scheme, the authorized subsets of participants can recover a subset of secrets at each stage while other secrets remain undisclosed. In this paper, each secret is a vector from a t-dimensional lattice and the basis of each lattice is kept private. A t-subset of n participants can recover the secret(s) using their assigned shares. Using a lattice based one-way function, even after some secrets are revealed, the computational security of the unrecovered secrets is provided against quantum computers. The scheme is multi-use in the sense that to share a new set of secrets, it is sufficient to renew some public information such that a new share distribution is no longer required. Furthermore, the scheme is verifiable meaning that the participants can verify the shares received from the dealer and the recovered secrets from the combiner, using public information.

- 33. 5. Efficient and Privacy-Preserving Min and kth Min Computations in Mobile Sensing Systems Protecting the privacy of mobile phone user participants is extremely important for mobile phone sensing applications. In this paper, we study how an aggregator can expeditiously compute the minimum value or the kth minimum value of all users’ data without knowing them.We construct two secure protocols using probabilistic coding schemes and a cipher system that allows homomorphic bitwise XOR computations for our problems. Following the standard cryptographic security definition in the semi-honest model, we formally prove our protocols’ security. The protocols proposed by us can support time-series data and need not to assume the aggregator is trusted. Moreover, different from existing protocols that are based on secure arithmetic sum computations, our protocols are based on secure bitwise XOR computations, thus are more efficient.

- 34. 6. My Privacy My Decision: Control of Photo Sharing on Online Social Networks Photo sharing is an attractive feature which popularizes online social networks (OSNs). Unfortunately, it may leak users’ privacy if they are allowed to post, comment, and tag a photo freely. In this paper, we attempt to address this issue and study the scenario when a user shares a photo containing individuals other than himself/herself (termed co-photo for short). To prevent possible privacy leakage of a photo, we design a mechanism to enable each individual in a photo be aware of the posting activity and participate in the decision making on the photo posting. For this purpose, we need an efficient facial recognition (FR) system that can recognize everyone in the photo. However, more demanding privacy setting may limit the number of the photos publicly available to train the FR system. To deal with this dilemma, our mechanism attempts to utilize users’ private photos to design a personalized FR system specifically trained to differentiate possible photo co-owners without leaking their privacy. We also develop a distributed consensus-based method to reduce the computational complexity and protect the private training set. We show that our system is superior to other possible approaches in terms of recognition ratio and efficiency. Our mechanism is implemented as a proof of concept Android application on Facebook’s platform

- 35. 7. Secure and Private Data Aggregation for Energy Consumption Scheduling in Smart Grids The recent proposed solutions for demand side energy management leverage the two-way communication infrastructure provided by modern smart-meters and sharing the usage information with the other users. In this paper, we first highlight the privacy and security issues involved in the distributed demand management protocols. We propose a novel protocol to share required information among users providing privacy, confidentiality, and integrity. We also propose a new clustering-based, distributed multi-party computation (MPC) protocol. Through simulation experiments we demonstrate the efficiency of our proposed solution. The existing solutions typically usually thwart selfish and malicious behavior of consumers by deploying billing mechanisms based on total consumption during a few time slots. However, the billing is typically based on the total usage in each time slot in smart grids. In the second part of this paper, we formally prove that under the per-slot based charging policy, users have incentive to deviate from the proposed protocols. We also propose a protocol to identify untruthful users in these networks. Finally, considering a repeated interaction among honest and dishonest users, we derive the conditions under which the smart grid can enforce cooperation among users and prevents dishonest declaration of consumption.

- 36. 8. Using Virtual Machine Allocation Policies to Defend against Co-Resident Attacks in Cloud Computing Cloud computing enables users to consume various IT resources in an on-demand manner, and with low management overhead. However, customers can face new security risks when they use cloud computing platforms. In this paper, we focus on one such threat—the co- resident attack, where malicious users build side channels and extract private information from virtual machines co-located on the same server. Previous works mainly attempt to address the problem by eliminating side channels. However, most of these methods are not suitable for immediate deployment due to the required modifications to current cloud platforms. We choose to solve the problem from a different perspective, by studying how to improve the virtual machine allocation policy, so that it is difficult for attackers to co-locate with their targets. Specifically, we (1) define security metrics for assessing the attack; (2) model these metrics, and compare the difficulty of achieving co-residence under three commonly used policies; (3) design a new policy that not only mitigates the threat of attack, but also satisfies the requirements for workload balance and low power consumption; and (4) implement, test, and prove the effectiveness of the policy on the popular open-source platform OpenStack.

- 37. MOBILE COMPUTING (JAVA) 1. Virtual Multipath Attack and Defense for Location Distinction in Wireless Networks In wireless networks, location distinction aims to detect location changes or facilitate authentication of wireless users. To achieve location distinction, recent research has focused on investigating the spatial uncorrelation property of wireless channels. Specifically, differences in wireless channel characteristics are used to distinguish locations or identify location changes. However, we discover a new attack against all existing location distinction approaches that are built on the spatial uncorrelation property of wireless channels. In such an attack, the adversary can easily hide her location changes or impersonate movements by injecting fake wireless channel characteristics into a target receiver. To defend against this attack, we propose a detection technique that utilizes an auxiliary receiver or antenna to identify these fake channel characteristics. We also discuss such attacks and corresponding defenses in OFDM systems. Experimental results on our USRP-based prototype show that the discovered attack can craft any desired channel characteristic with a successful probability of 95.0 percent to defeat spatial uncorrelation based location distinction schemes and our novel detection method achieves a detection rate higher than 91.2 percent while maintaining a very low false alarm rate.

- 38. 2. Design and Analysis of an Efficient Friend-to-Friend Content Dissemination System Opportunistic communication, off-loading, and decentrlaized distribution have been proposed as a means of cost efficient disseminating content when users are geographically clustered into communities. Despite its promise, none of the proposed systems have not been widely adopted due to unbounded high content delivery latency, security, and privacy concerns. This paper, presents a novel hybrid content storage and distribution system addressing the trust and privacy concerns of users, lowering the cost of content distribution and storage, and shows how they can be combined uniquely to develop mobile social networking services. The system exploit the fact that users will trust their friends, and by replicating content on friends’ devices who are likely to consume that content it will be possible to disseminate it to other friends when connected to low cost networks. The paper provides a formal definition of this content replication problem, and show that it is NP hard. Then, it presents a community based greedy heuristic algorithm with novel dynamic centrality metrics that replicates the content on a minimum number of friends’ devices, to maximize availability. Then using both real world and synthetic datasets, the effectiveness of the proposed scheme is demonstrated. The practicality of the proposed system, is demonstrated through an implementation on Android smartphones.

- 39. 3. Cost-Effective Mapping between Wireless Body Area Networks and Cloud Service Providers Based on Multi-Stage Bargaining This paper presents a bargaining-based resource allocation and price agreement in an environment of cloud-assisted Wireless Body Area Networks (WBANs). The challenge is to finalize a price agreement between the Cloud Service Providers (CSPs) and the WBANs, followed by a cost-effective mapping among them. Existing solutions primarily focus on profits of the CSPs, while guaranteeing different user satisfaction levels. Such pricing schemes are bias prone, as quantifying user satisfaction is fuzzy in nature and hard to implement. Moreover, such an traditional approach may lead to an unregulated market, where few service providers enjoy the monopoly/oligopoly situation. However, in this work, we try to remove such biasness from the pricing agreements, and envision this challenge from a comparatively fair point of view. In order to do so, we use the concept of bargaining, an interesting approach involving cooperative game theory. We introduce an exposition - multi-stage Nash bargaining solution (MUST-NBS), that unfolds into multiple stages of bargaining, as the name suggests, until we conclude price agreement between the CSPs and the WBANs. In addition, the proposed approach also consummates the final mapping between the CSPs and the WBANs, depending on the cost- effectiveness of the WBANs. Analysis of the proposed algorithms and the inferences of the results validates the usefulness of the proposed mapping technique.

- 40. 4. Near Optimal Data Gathering in Rechargeable Sensor Networks with a Mobile Sink We study data gathering problem in Rechargeable Sensor Networks (RSNs) with a mobile sink, where rechargeable sensors are deployed into a region of interest to monitor the environment and a mobile sink travels along a pre-defined path to collect data from sensors periodically. In such RSNs, the optimal data gathering is challenging because the required energy consumption for data transmission changes with the movement of the mobile sink and the available energy is time-varying. In this paper, we formulate data gathering problem as a network utility maximization problem, which aims at maximizing the total amount of data collected by the mobile sink while maintaining the fairness of network. Since the instantaneous optimal data gathering scheme changes with time, in order to obtain the globally optimal solution, we first transform the primal problem into an approximate network utility maximization problem by shifting the energy consumption conservation and analyzing necessary conditions for the optimal solution. As a result, each sensor does not need to estimate the amount of harvested energy and the problem dimension is reduced. Then, we propose a Distributed Data Gathering Approach (DDGA), which can be operated distributively by sensors, to obtain the optimal data gathering scheme. Extensive simulations are performed to demonstrate the efficiency of the proposed algorithm.

- 41. 5. Optimal Sleep-Wake Scheduling for Energy Harvesting Smart Mobile Devices In this paper, we develop optimal sleep/wake scheduling algorithms for smart mobile devices that are powered by batteries and are capable of harvesting energy from the environment. Using a novel combination of the two-timescale Lyapunov optimization approach and weight perturbation, we first design the Optimal Sleep/wake scheduling Algorithm (OSA), which does not require any knowledge of the harvestable energy process. We prove that OSA is able to achieve any system performance that is within O() of the optimal, and explicitly compute the required battery size, which is O(1/). We then extend our results to incorporate system information into algorithm design. Specifically, we develop the Information-aided OSA algorithm (IOSA) by introducing a novel drift augmenting idea in Lyapunov optimization. We show that IOSA is able to achieve the O() close-to-optimal utility performance and ensures that the required traffic buffer and energy storage size are O(log (1/)2 ) with high probability.

- 42. 6. Two-Sided Matching Based Cooperative Spectrum Sharing Dynamic spectrum access (DSA) can effectively improve the spectrum efficiency and alleviate the spectrumscarcity, by allowing unlicensed secondary users (SUs) to access the licensed spectrumof primary users (PUs) opportunistically. Cooperative spectrum sharing is a new promising paradigm to provide necessary incentives for both PUs and SUs in dynamic spectrum access. The key idea is that SUs relay the traffic of PUs in exchange for the access time on the PUs’ licensed spectrum. In this paper, we formulate the cooperative spectrum sharing between multiple PUs and multiple SUs as a two-sided market, and study the market equilibrium under both complete and incomplete information. First, we characterize the sufficient and necessary conditions for the market equilibrium. We analytically show that there may exist multiple market equilibria, among which there is always a unique Pareto-optimal equilibrium for Pus (called PU-Optimal-EQ), in which every PU achieves a utility no worse than in any other equilibrium. Then, we show that under complete information, the unique Pareto-optimal equilibrium PU-Optimal-EQ can always be achieved despite the competition among PUs; whereas, under incomplete information, the PU-Optimal-EQ may not be achieved due to the mis- representations of SUs (in reporting their private information). Regarding this, we further study the worse-case equilibrium for PUs, and characterize a Robust equilibrium for PUs (called PU- Robust-EQ), which provides every PU a guaranteed utility under all possible mis-representation behaviors of SUs. Numerical results show that in a typical network where the number of PUs and SUs are different, the performance gap between PU-Optimal-EQ and PU-Robust-EQ is quite small (e.g., less than 10 percent in the simulations).

- 43. 7. GDVAN: A New Greedy Behavior Attack Detection Algorithm for VANETs Vehicular Ad hoc Networks (VANETs), whose main objective is to provide road safety and enhance the driving conditions, are exposed to several kinds of attacks such as Denial of Service (DoS) attacks which affect the availability of the underlying services for legitimate users. We focus especially on the greedy behavior which has been extensively addressed in the literature for Wireless LAN (WLAN) and for Mobile Ad hoc Networks (MANETs). However, this attack has been much less studied in the context of VANETs. This is mainly because the detection of a greedy behavior is much more difficult for high mobility networks such as VANETs. In this paper, we propose a new detection approach called GDVAN (Greedy Detection for VANETs) for greedy behavior attacks in VANETs. The process to conduct the proposed method mainly consists of two phases, which are namely the suspicion phase and the decision phase. The suspicion phase is based on the linear regression mathematical concept while decision phase is based on a fuzzy logic decision scheme. The proposed algorithm not only detects the existence of a greedy behavior but also establishes a list of the potentially compromised nodes using three newly defined metrics. In addition to being passive, one of the major advantages of our technique is that it can be executed by any node of the network and does not require any modification of the IEEE 802.11p standard. Moreover, the practical effectiveness and efficiency of the proposed approach are corroborated through simulations and experiments.

- 44. INFORMATION AND FORENSICS SECURITY (JAVA) 1. BASIS: A Practical Multi-User Broadcast Authentication Scheme in Wireless Sensor Networks Multi-user broadcast authentication is an important security service in wireless sensor networks (WSNs), as it allows a large number of mobile users of the WSNs to join in and broadcast messages to WSNs dynamically and authentically. To reduce communication cost due to the transmission of public-key certificates, broadcast authentication schemes based on identity (ID)- based cryptography have been proposed, but the schemes suffer from expensive pairing computations. In this paper, to minimize computation and communication costs, we propose a new provably secure pairing-free ID-based signature schemes with message recovery, MR-IBS, and PMR-IBS. We then construct an IDbased multi-user broadcast authentication scheme, BASIS, based on MR-IBS and PMR-IBS for broadcast authentication between users and a sink. We evaluate the practical feasibility of BASIS on WSN hardware platforms, MICAz and Tmote Sky are used in real-life deployments in terms of computation/communication cost and energy consumption. Consequently, BASIS reduces the total energy consumption on Tmote Sky by up to 72% and 17% compared with Bloom filter-based authentication scheme based on a variant of ECDSA with message recovery and IMBAS based on a ID-based signature scheme with message appendix, respectively.

- 45. 2. JPEG Quantization Step Estimation and Its Applications to Digital Image Forensics The goal of this paper is to propose an accurate method for estimating quantization steps from an image that has been previously JPEG-compressed and stored in lossless format. The method is based on the combination of the quantization effect and the statistics of discrete cosine transform (DCT) coefficient characterized by the statistical model that has been proposed in our previous works. The analysis of quantization effect is performed within a mathematical framework, which justifies the relation of local maxima of the number of integer quantized forward coefficients with the true quantization step. From the candidate set of the true quantization step given by the previous analysis, the statistical model of DCT coefficients is used to provide the optimal quantization step candidate. The proposed method can also be exploited to estimate the secondary quantization table in a double-JPEG compressed image stored in lossless format and detect the presence of JPEG compression. Numerical experiments on large image databases with different image sizes and quality factors highlight the high accuracy of the proposed method.

- 46. 3. MasterPrint: Exploring the Vulnerability of Partial Fingerprint-Based Authentication Systems This paper investigates the security of partial fingerprint-based authentication systems, especially when multiple fingerprints of a user are enrolled. A number of consumer electronic devices, such as smartphones, are beginning to incorporate fingerprint sensors for user authentication. The sensors embedded in these devices are generally small and the resulting images are, therefore, limited in size. To compensate for the limited size, these devices often acquire multiple partial impressions of a single finger during enrollment to ensure that at least one of them will successfully match with the image obtained from the user during authentication. Furthermore, in some cases, the user is allowed to enroll multiple fingers, and the impressions pertaining to multiple partial fingers are associated with the same identity (i.e., one user). A user is said to be successfully authenticated if the partial fingerprint obtained during authentication matches any one of the stored templates. This paper investigates the possibility of generating a “MasterPrint,” a synthetic or real partial fingerprint that serendipitously matches one or more of the stored templates for a significant number of users. Our preliminary results on an optical fingerprint data set and a capacitive fingerprint data set indicate that it is indeed possible to locate or generate partial fingerprints that can be used to impersonate a large number of users. In this regard, we expose a potential vulnerability of partial fingerprint-based authentication systems, especially when multiple impressions are enrolled per finger.

- 47. 4. Privacy-Preserving Image Denoising From External Cloud Databases Along with the rapid advancement of digital image processing technology, image denoising remains a fundamental task, which aims to recover the original image from its noisy observation. With the explosive growth of images on the Internet, one recent trend is to seek high quality similar patches at cloud image databases and harness rich redundancy therein for promising denoising performance. Despite the wellunderstood benefits, such a cloud-based denoising paradigm would undesirably raise security and privacy issues, especially for privacy- sensitive image data sets. In this paper, we initiate the first endeavor toward privacy-preserving image denoising from external cloud databases. Our design enables the cloud hosting encrypted databases to provide secure query-based image denoising services. Considering that image denoising intrinsically demands high quality similar image patches, our design builds upon recent advancements on secure similarity search, Yao’s garbled circuits, and image denoising operations, where each is used at a different phase of the design for the best performance. We formally analyze the security strengths. Extensive experiments over real-world data sets demonstrate that our design achieves the denoising quality close to the optimal performance in plaintext.

- 48. 5. Two-Cloud Secure Database for Numeric-Related SQL Range Queries With Privacy Preserving Industries and individuals outsource database to realize convenient and low-cost applications and services. In order to provide sufficient functionality for SQL queries, many secure database schemes have been proposed. However, such schemes are vulnerable to privacy leakage to cloud server. The main reason is that database is hosted and processed in cloud server, which is beyond the control of data owners. For the numerical range query (“>,” “<,” and so on), those schemes cannot provide sufficient privacy protection against practical challenges, e.g., privacy leakage of statistical properties, access pattern. Furthermore, increased number of queries will inevitably leak more information to the cloud server. In this paper, we propose a two-cloud architecture for secure database, with a series of intersection protocols that provide privacy preservation to various numeric-related range queries. Security analysis shows that privacy of numerical information is strongly protected against cloud providers in our proposed scheme.

- 49. 6. Identity-Based Remote Data Integrity Checking With Perfect Data Privacy Preserving for Cloud Storage Remote data integrity checking (RDIC) enables a data storage server, say a cloud server, to prove to a verifier that it is actually storing a data owner’s data honestly. To date, a number of RDIC protocols have been proposed in the literature, but most of the constructions suffer from the issue of a complex key management, that is, they rely on the expensive public key infrastructure (PKI), which might hinder the deployment of RDIC in practice. In this paper, we propose a new construction of identity-based (ID-based) RDIC protocol by making use of key- homomorphic cryptographic primitive to reduce the system complexity and the cost for establishing and managing the public key authentication framework in PKI-based RDIC schemes. We formalize ID-based RDIC and its security model, including security against a malicious cloud server and zero knowledge privacy against a third party verifier. The proposed ID-based RDIC protocol leaks no information of the stored data to the verifier during the RDIC process. The new construction is proven secure against the malicious server in the generic group model and achieves zero knowledge privacy against a verifier. Extensive security analysis and implementation results demonstrate that the proposed protocol is provably secure and practical in the real-world applications.

- 50. 7. Identity-Based Data Outsourcing with Comprehensive Auditing in Clouds Cloud storage system provides facilitative file storage and sharing services for distributed clients. To address integrity, controllable outsourcing, and origin auditing concerns on outsourced files, we propose an identity-based data outsourcing (IBDO) scheme equipped with desirable features advantageous over existing proposals in securing outsourced data. First, our IBDO scheme allows a user to authorize dedicated proxies to upload data to the cloud storage server on her behalf, e.g., a company may authorize some employees to upload files to the company’s cloud account in a controlled way. The proxies are identified and authorized with their recognizable identities, which eliminates complicated certificate management in usual secure distributed computing systems. Second, our IBDO scheme facilitates comprehensive auditing, i.e., our scheme not only permits regular integrity auditing as in existing schemes for securing outsourced data, but also allows to audit the information on data origin, type, and consistence of outsourced files. Security analysis and experimental evaluation indicate that our IBDO scheme provides strong security with desirable efficiency

- 51. 8. RAAC: Robust and Auditable Access Control with Multiple Attribute Authorities for Public Cloud Storage Data access control is a challenging issue in public cloud storage systems. Ciphertext- policy attribute-based encryption (CP-ABE) has been adopted as a promising technique to provide flexible, fine-grained, and secure data access control for cloud storage with honest-but- curious cloud servers. However, in the existing CP-ABE schemes, the single attribute authority must execute the time-consuming user legitimacy verification and secret key distribution, and hence, it results in a single-point performance bottleneck when a CP-ABE scheme is adopted in a large-scale cloud storage system. Users may be stuck in the waiting queue for a long period to obtain their secret keys, thereby resulting in low efficiency of the system. Although multiauthority access control schemes have been proposed, these schemes still cannot overcome the drawbacks of single-point bottleneck and low efficiency, due to the fact that each of the authorities still independently manages a disjoint attribute set. In this paper, we propose a novel heterogeneous framework to remove the problem of single-point performance bottleneck and provide a more efficient access control scheme with an auditing mechanism. Our framework employs multiple attribute authorities to share the load of user legitimacy verification. Meanwhile, in our scheme, a central authority is introduced to generate secret keys for legitimacy verified users. Unlike other multi-authority access control schemes, each of the authorities in our scheme manages the whole attribute set individually. To enhance security, we also propose an auditing mechanism to detect which attribute authority has incorrectly or maliciously performed the legitimacy verification procedure. Analysis shows that our system not only guarantees the security requirements but also makes great performance improvement on key generation.

- 52. 9. Provably Secure Dynamic ID-Based Anonymous Two-Factor Authenticated Key Exchange Protocol With Extended Security Model Authenticated key exchange (AKE) protocol allows a user and a server to authenticate each other and generate a session key for the subsequent communications. With the rapid development of low-power and highly-efficient networks, such as pervasive and mobile computing network in recent years, many efficient AKE protocols have been proposed to achieve user privacy and authentication in the communications. Besides secure session key establishment, those AKE protocols offer some other useful functionalities, such as two-factor user authentication and mutual authentication. However, most of them have one or more weaknesses, such as vulnerability against lost-smart-card attack, offline dictionary attack, de- synchronization attack, or the lack of forward secrecy, and user anonymity or untraceability. Furthermore, an AKE scheme under the public key infrastructure may not be suitable for light- weight computational devices, and the security model of AKE does not capture user anonymity and resist lost-smart-card attack. In this paper, we propose a novel dynamic ID-based anonymous two-factor AKE protocol, which addresses all the above issues. Our protocol also supports smart card revocation and password update without centralized storage. Further, we extend the security model of AKE to support user anonymity and resist lost-smart-card attack, and the proposed scheme is provably secure in extended security model. The low-computational and bandwidth cost indicates that our protocol can be deployed for pervasive computing applications and mobile communications in practice.

- 53. 10. Privacy-Preserving Smart Semantic Search Based on Conceptual Graphs Over Encrypted Outsourced Data Searchable encryption is an important research area in cloud computing. However, most existing efficient and reliable ciphertext search schemes are based on keywords or shallow semantic parsing, which are not smart enough to meet with users’ search intention. Therefore, in this paper, we propose a content-aware search scheme, which can make semantic search more smart. First, we introduce conceptual graphs (CGs) as a knowledge representation tool. Then, we present our two schemes (PRSCG and PRSCG-TF) based on CGs according to different scenarios. In order to conduct numerical calculation, we transfer original CGs into their linear form with some modification and map them to numerical vectors. Second, we employ the technology of multi-keyword ranked search over encrypted cloud data as the basis against two threat models and raise PRSCG and PRSCG-TF to resolve the problem of privacy-preserving smart semantic search based on CGs. Finally, we choose a real-world data set: CNN data set to test our scheme. We also analyze the privacy and efficiency of proposed schemes in detail. The experiment results show that our proposed schemes are efficient.

- 54. 11. Strong Key-Exposure Resilient Auditing for Secure Cloud Storage Key exposure is one serious security problem for cloud storage auditing. In order to deal with this problem, cloud storage auditing scheme with key-exposure resilience has been proposed. However, in such a scheme, the malicious cloud might still forge valid authenticators later than the key-exposure time period if it obtains the current secret key of data owner. In this paper, we innovatively propose a paradigm named strong key-exposure resilient auditing for secure cloud storage, in which the security of cloud storage auditing not only earlier than but also later than the key exposure can be preserved. We formalize the definition and the security model of this new kind of cloud storage auditing and design a concrete scheme. In our proposed scheme, the key exposure in one time period doesn’t affect the security of cloud storage auditing in other time periods. The rigorous security proof and the experimental results demonstrate that our proposed scheme achieves desirable security and efficiency.

- 55. IMAGE PROCESSING (Java) 1. Image Re-Ranking Based on Topic Diversity Social media sharing Websites allow users to annotate images with free tags, which significantly contribute to the development of the web image retrieval. Tag-based image search is an important method to find images shared by users in social networks. However, how to make the top ranked result relevant and with diversity is challenging. In this paper, we propose a topic diverse ranking approach for tag-based image retrieval with the consideration of promoting the topic coverage performance. First, we construct a tag graph based on the similarity between each tag. Then, the community detection method is conducted to mine the topic community of each tag. After that, inter-community and intra-community ranking are introduced to obtain the final retrieved results. In the inter-community ranking process, an adaptive random walk model is employed to rank the community based on the multi-information of each topic community. Besides, we build an inverted index structure for images to accelerate the searching process. Experimental results on Flickr data set and NUS-Wide data sets show the effectiveness of the proposed approach.

- 56. 2. Fast Bayesian JPEG Decompression and Denoising With Tight Frame Priors JPEG decompression can be understood as an image reconstruction problem similar to denoising or deconvolution. Such problems can be solved within the Bayesian maximum a posteriori probability framework by iterative optimization algorithms. Prior knowledge about an image is usually described by the l1 norm of its sparse domain representation. For many problems, if the sparse domain forms a tight frame, optimization by the alternating direction method of multipliers can be very efficient. However, for JPEG, such solution is not straightforward, e.g., due to quantization and subsampling of chrominance channels. Derivation of such solution is the main contribution of this paper. In addition, we show that a minor modification of the proposed algorithm solves simultaneously the problem of image denoising. In the experimental section, we analyze the behavior of the proposed decompression algorithm in a small number of iterations with an interesting conclusion that this mode outperforms full convergence. Example images demonstrate the visual quality of decompression and quantitative experiment.

- 57. 3. Robust ImageGraph: Rank-Level Feature Fusion for Image Search Recently, feature fusion has demonstrated its effectiveness in image search. However, bad features and inappropriate parameters usually bring about false positive images, i.e., outliers, leading to inferior performance. Therefore, a major challenge of fusion scheme is how to be robust to outliers. Towards this goal, this paper proposes a rank-level framework for robust feature fusion. First, we define Rank Distance to measure the relevance of images at rank level. Based on it, Bayes similarity is introduced to evaluate the retrieval quality of individual features, through which true matches tend to obtain higher weight than outliers. Then, we construct the directed ImageGraph to encode the relationship of images. Each image is connected to its K nearest neighbors with an edge, and the edge is weighted by Bayes similarity. Multiple rank lists resulted from different methods are merged via ImageGraph. Furthermore, on the fused ImageGraph, local ranking is performed to re-order the initial rank lists. It aims at local optimization, and thus is more robust to global outliers. Extensive experiments on four benchmark data sets validate the effectiveness of our method. Besides, the proposed method outperforms two popular fusion schemes, and the results are competitive to the state-of-the-art.