Content based filtering

•Transferir como PPTX, PDF•

10 gostaram•8,504 visualizações

Content based filtering, with several techniques.

Denunciar

Compartilhar

Denunciar

Compartilhar

Recomendados

Recomendados

Mais conteúdo relacionado

Mais procurados

Mais procurados (20)

Recommender Systems (Machine Learning Summer School 2014 @ CMU)

Recommender Systems (Machine Learning Summer School 2014 @ CMU)

[Final]collaborative filtering and recommender systems![[Final]collaborative filtering and recommender systems](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[Final]collaborative filtering and recommender systems](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

[Final]collaborative filtering and recommender systems

Destaque

W zalewie informacji odnalezienie tych które nas rzeczywiście interesują staje się bardzo trudne. Wspomagają nas w tym systemy IR, np. w postaci wyszukiwarek internetowych. O krok dalej idą systemy rekomendacji, próbując odgadnąć preferencje użytkownika i zaoferować najlepiej spersonalizowane treści automatycznie.

Podejście do problemu rekomendacji użytkownikowi najbardziej dopasowanych informacji zmieniało się w czasie. Aktualnie do wyboru mamy szereg gotowych do zastosowania metod: od prostego opisu podobieństwa użytkowników, kończąc na złożonych modelach trenowanych przez metody ML. Trudność zaczyna stanowić poprawne zrozumienie problemu/domeny, odpowiednie dobranie metody rekomendacji oraz sposób jej pomiaru.

Na prezentacji zostanie przedstawione krótkie wprowadzenie do tematyki systemów rekomendacji. Omówione zostaną metod rekomendacji oraz sposoby ich ewaluacja. Zaprezentowane zostanie podejście do problemu jako "ranking top-N" najlepszych ofert. Całość uzupełniona zostanie doświadczeniami i ciekawymi problemami z implementacji platformy rekomendacyjnej dla największego serwisu e-commerce w Polsce.Rekomendujemy - Szybkie wprowadzenie do systemów rekomendacji oraz trochę wie...

Rekomendujemy - Szybkie wprowadzenie do systemów rekomendacji oraz trochę wie...Bartlomiej Twardowski

PyParis 2017

http://pyparis.org Collaborative filtering for recommendation systems in Python, Nicolas Hug

Collaborative filtering for recommendation systems in Python, Nicolas HugPôle Systematic Paris-Region

Destaque (6)

How to Build Recommender System with Content based Filtering

How to Build Recommender System with Content based Filtering

Rekomendujemy - Szybkie wprowadzenie do systemów rekomendacji oraz trochę wie...

Rekomendujemy - Szybkie wprowadzenie do systemów rekomendacji oraz trochę wie...

Collaborative filtering for recommendation systems in Python, Nicolas Hug

Collaborative filtering for recommendation systems in Python, Nicolas Hug

Semelhante a Content based filtering

Semelhante a Content based filtering (20)

Publish or Perish: Towards a Ranking of Scientists using Bibliographic Data ...

Publish or Perish: Towards a Ranking of Scientists using Bibliographic Data ...

Models for Information Retrieval and Recommendation

Models for Information Retrieval and Recommendation

Textual Document Categorization using Bigram Maximum Likelihood and KNN

Textual Document Categorization using Bigram Maximum Likelihood and KNN

Último

Último (20)

Presentation on how to chat with PDF using ChatGPT code interpreter

Presentation on how to chat with PDF using ChatGPT code interpreter

Scaling API-first – The story of a global engineering organization

Scaling API-first – The story of a global engineering organization

Tech Trends Report 2024 Future Today Institute.pdf

Tech Trends Report 2024 Future Today Institute.pdf

Mastering MySQL Database Architecture: Deep Dive into MySQL Shell and MySQL R...

Mastering MySQL Database Architecture: Deep Dive into MySQL Shell and MySQL R...

Strategize a Smooth Tenant-to-tenant Migration and Copilot Takeoff

Strategize a Smooth Tenant-to-tenant Migration and Copilot Takeoff

The 7 Things I Know About Cyber Security After 25 Years | April 2024

The 7 Things I Know About Cyber Security After 25 Years | April 2024

Apidays Singapore 2024 - Building Digital Trust in a Digital Economy by Veron...

Apidays Singapore 2024 - Building Digital Trust in a Digital Economy by Veron...

How to Troubleshoot Apps for the Modern Connected Worker

How to Troubleshoot Apps for the Modern Connected Worker

Strategies for Unlocking Knowledge Management in Microsoft 365 in the Copilot...

Strategies for Unlocking Knowledge Management in Microsoft 365 in the Copilot...

2024: Domino Containers - The Next Step. News from the Domino Container commu...

2024: Domino Containers - The Next Step. News from the Domino Container commu...

Boost PC performance: How more available memory can improve productivity

Boost PC performance: How more available memory can improve productivity

Content based filtering

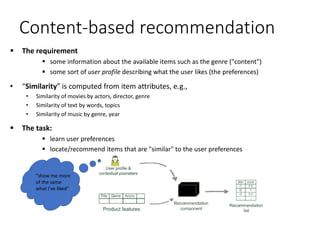

- 1. Content-based recommendation The requirement some information about the available items such as the genre ("content") some sort of user profile describing what the user likes (the preferences) • “Similarity” is computed from item attributes, e.g., • Similarity of movies by actors, director, genre • Similarity of text by words, topics • Similarity of music by genre, year The task: learn user preferences locate/recommend items that are "similar" to the user preferences "show me more of the same what I've liked"

- 2. • Most Content Based-recommendation techniques were applied to recommending text documents. • Like web pages or newsgroup messages for example. • Content of items can also be represented as text documents. • With textual descriptions of their basic characteristics. • Structured: Each item is described by the same set of attributes Title Genre Author Type Price Keywords The Night of the Gun Memoir David Carr Paperback 29.90 Press and journalism, drug addiction, personal memoirs, New York The Lace Reader Fiction, Mystery Brunonia Barry Hardcover 49.90 American contemporary fiction, detective, historical Into the Fire Romance, Suspense Suzanne Brockmann Hardcover 45.90 American fiction, murder, neo-Nazism

- 3. Item representation Content representation and item similarities • Approach • Compute the similarity of an unseen item with the user profile based on the keyword overlap (e.g. using the Dice coefficient) • Or use and combine multiple metrics Title Genre Author Type Price Keywords The Night of the Gun Memoir David Carr Paperback 29.90 Press and journalism, drug addiction, personal memoirs, New York The Lace Reader Fiction, Mystery Brunonia Barry Hardcover 49.90 American contemporary fiction, detective, historical Into the Fire Romance, Suspense Suzanne Brockmann Hardcover 45.90 American fiction, murder, neo- Nazism User profile Title Genre Author Type Price Keywords … Fiction Brunonia, Barry, Ken Follett Paperback 25.65 Detective, murder, New York 𝟐 × 𝒌𝒆𝒚𝒘𝒐𝒓𝒅𝒔 𝒃𝒊 ∩ 𝒌𝒆𝒚𝒘𝒐𝒓𝒅𝒔 𝒃𝒋 𝒌𝒆𝒚𝒘𝒐𝒓𝒅𝒔 𝒃𝒊 + 𝒌𝒆𝒚𝒘𝒐𝒓𝒅𝒔 𝒃𝒋 𝑘𝑒𝑦𝑤𝑜𝑟𝑑𝑠 𝑏𝑗 describes Book 𝑏𝑗 with a set of keywords

- 4. Term-Frequency - Inverse Document Frequency (𝑻𝑭 − 𝑰𝑫𝑭) • Simple keyword representation has its problems • in particular when automatically extracted as • not every word has similar importance • longer documents have a higher chance to have an overlap with the user profile • Standard measure: TF-IDF • Encodes text documents in multi-dimensional Euclidian space • weighted term vector • TF: Measures, how often a term appears (density in a document) • assuming that important terms appear more often • normalization has to be done in order to take document length into account • IDF: Aims to reduce the weight of terms that appear in all documents

- 5. • Given a keyword 𝑖 and a document 𝑗 • 𝑇𝐹 𝑖, 𝑗 • term frequency of keyword 𝑖 in document 𝑗 • 𝐼𝐷𝐹 𝑖 • inverse document frequency calculated as 𝑰𝑫𝑭 𝒊 = 𝒍𝒐𝒈 𝑵 𝒏 𝒊 • 𝑁 : number of all recommendable documents • 𝑛 𝑖 : number of documents from 𝑁 in which keyword 𝑖 appears • 𝑇𝐹 − 𝐼𝐷𝐹 • is calculated as: 𝑻𝑭-𝑰𝑫𝑭 𝒊, 𝒋 = 𝑻𝑭 𝒊, 𝒋 ∗ 𝑰𝑫𝑭 𝒊 Term-Frequency - Inverse Document Frequency (𝑻𝑭 − 𝑰𝑫𝑭)

- 6. Cosine similarity • Usual similarity metric to compare vectors: Cosine similarity (angle) • Cosine similarity is calculated based on the angle between the vectors • 𝑠𝑖𝑚 𝑎, 𝑏 = 𝑎∙𝑏 𝑎 ∗ 𝑏 • Adjusted cosine similarity • take average user ratings into account ( 𝑟𝑢), transform the original ratings • U: set of users who have rated both items a and b • 𝑠𝑖𝑚 𝑎, 𝑏 = 𝑢∈𝑈 𝑟 𝑢,𝑎− 𝑟 𝑢 𝑟 𝑢,𝑏− 𝑟 𝑢 𝑢∈𝑈 𝑟 𝑢,𝑎− 𝑟 𝑢 2 𝑢∈𝑈 𝑟 𝑢,𝑏− 𝑟 𝑢 2

- 7. An example for computing cosine similarity of annotations To calculate cosine similarity between two texts t1 and t2, they are transformed in vectors as shown in the Table

- 9. Calculation of probabilities in simplistic approach Item1 Item2 Item3 Item4 Item5 Alice 1 3 3 2 ? User1 2 4 2 2 4 User2 1 3 3 5 1 User3 4 5 2 3 3 User4 1 1 5 2 1 X = (Item1 =1, Item2=3, Item3= … )

- 10. Item1 Item5 Alice 2 ? User1 1 2 Idea of Slope One predictors is simple and is based on a popularity differential between items for users Example: p(Alice, Item5) = Basic scheme: Take the average of these differences of the co-ratings to make the prediction In general: Find a function of the form f(x) = x + b Slope One predictors - 2 + ( 2 - 1 ) = 3

- 11. Relevant Nonrelevant • Most learning methods aim to find coefficients of a linear model • A simplified classifier with only two dimensions can be represented by a line Other linear classifiers: – Naive Bayes classifier, Rocchio method, Windrow-Hoff algorithm, Support vector machines Linear classifiers The line has the form 𝒘 𝟏 𝒙 𝟏 + 𝒘 𝟐 𝒙 𝟐 = 𝒃 – 𝑥1 and 𝑥2 correspond to the vector representation of a document (using e.g. TF-IDF weights) – 𝑤1, 𝑤2 and 𝑏 are parameters to be learned – Classification of a document based on checking 𝑤1 𝑥1 + 𝑤2 𝑥2 > 𝑏 In n-dimensional space the classification function is 𝑤 𝑇 𝑥 = 𝑏

- 12. – Mean Absolute Error (MAE) computes the deviation between predicted ratings and actual ratings – Root Mean Square Error (RMSE) is similar to MAE, but places more emphasis on larger deviation Metrics Measure Error rate

- 13. Next … • Hybrid recommendation systems • More theories • Boolean and Vector Space Retrieval Models • Clustering • Data mining • And so on