Testing trapeze-2014-april

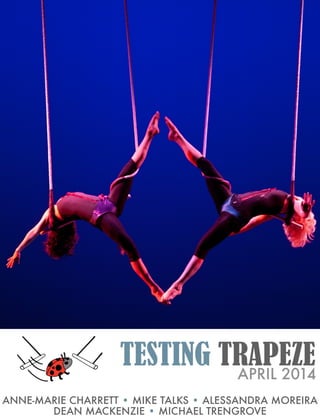

- 1. TESTING TRAPEZEAPRIL 2014 ANNE-MARIE CHARRETT • MIKE TALKS • ALESSANDRA MOREIRA DEAN MACKENZIE • MICHAEL TRENGROVE

- 2. 02 • EDITORIAL " KATRINA CLOKIE 03 • HOW DID YOU MISS " THAT? BLIND SPOTS " THAT SIDE SWIPE " TESTERS " DEAN MACKENZIE 10 • TESTING GRAVITY " MIKE TALKS 17 • ENCOURAGING " " " " CURIOSITY " ALESSANDRA MOREIRA 23 • WHERE TO FOR THE " " FUTURE OF SOFTWARE " TESTING? " MICHAEL TRENGROVE 31 • TEACHING CRITICAL " " THINKING " ANNE-MARIE CHARRETT CONTENTS

- 3. Thank you for the wonderful response to our first issue of Testing Trapeze. It was exciting to see the magazine being distributed throughout testing networks internationally, to have our efforts seen by so many, and to receive plenty of positive comments about what we had assembled. We learned a lot from the first issue and hope to resolve some of the problems people experienced with our PDF format. Please let us know if you spot something funny as you read, we would like to offer the same reading experience to everyone. In the following pages we have some amazing articles from another talented batch of testers; Anne- Marie Charrett, Mike Talks, Dean Mackenzie, Michael Trengrove and Alessandra Moreira. It’s a privilege to have contributors of such high caliber; their words make our magazine. 2" TESTING TRAPEZE | APRIL 2014 KATRINA CLOKIE WELLINGTON, NEW ZEALAND EDITORIAL Katrina Clokie is a tester from Wellington, New Zealand. She is co-founder and organiser of WeTest Workshops, a regular participant of KWST, and the editor of Testing Trapeze. She blogs and tweets about testing.

- 4. IN A RECENT PROJECT FOR A MOBILE application, I was testing a newly-added piece of payment-related functionality. I explored the feature, guided by a list of test ideas gathered from earlier investigation, and duly noted bugs where I found them. Satisfied, I moved on to other work, assigning a few final checks to a less experienced associate. To my surprise and dismay, he soon returned to report he’d found a bug in the functionality I had looked at. It turned out that accessing the payments page from a certain other page triggered a problem. I had been well aware of the other page, but had simply failed to account for it in my testing. My first thoughts in the irritated swirl that bubbled up contained a prominent question – How did I miss that? It wasn’t a long sequence bug or a particularly complex problem, yet had eluded my seemingly careful investigation and exploration. When exploring an application, the numbers of tests we can perform are almost infinite (though the number of more useful tests is somewhat lower). In such circumstances, the scope to make mistakes, omissions and oversights is correspondingly high, impacting testing in a number of different ways: • Different quality criteria for the product, such as performance or reliability, might be completely ignored (for a list of potential criteria, see Bach’s heuristic test strategy model or Edgren’s Little Black Book of Test Design). • The quality of the tests conducted may be compromised, insofar as their effectiveness in exploring or evaluating the product and finding problems is concerned. • The information gathered from testing, such as bugs, questions and new test ideas, may prove to be misleading and ultimately useless. HOW DID YOU MISS THAT? BLIND SPOTS THAT SIDE SWIPE TESTERS 3" TESTING TRAPEZE | APRIL 2014 DEAN MACKENZIE BRISBANE, AUSTRALIA

- 5. There is an almost endless list of factors that can negatively influence our testing. The following are some of the traps I’ve encountered throughout my testing journey. Obsession with Function In my days as an enthusiastic but uneducated acceptance tester, testing began and finished with uninspired inspections of the UI. This flawed outlook of testing stayed with me until my first “real” testing position, where the fundamental flaws in this attitude were soon apparent as new and unrealised types of bugs slipped into production. Since that time, I’ve seen a lot of other testers fall into the same narrow view of testing – and many don’t seem to realise it. A “fascination with functional correctness”, as Michael Bolton puts it, presents a major pitfall that many testers fall head-long into. Function is the easiest and most apparent aspect of most software products, and so provides testers with an obvious focus. Unfortunately, that focus can lead to a myopic attitude to the exclusion of other (equally important) aspects of the software, leaving entire categories of test ideas and quality criteria unexamined or important problems undetected. This monomania is often exaggerated by requirements that fixate solely on the functional aspects of the software, and only implicitly touch on other areas that require investigation. While astute testers may pick up on these clues (and there are a growing number who actively work on discovering software needs beyond the requirements), most simply fail to notice the many missed opportunities in their focus on functionality. Not Stopping to Think Early in my career, I was an advocate of the “get in there and test!” approach. The bigger the test effort required, the more light-weight I went with planning, such was my eagerness to get started. Planning was largely a waste of time, or so I thought (though in the case of “planning is simply completing a template” views, I still do). It took a year, a few set-backs with my test projects and an awkward conversation or two with a manager before I started to spend the time on planning and preparation. I still occasionally have to fight the urge to “just get going”. most simply fail to notice the many missed opportunities in their focus on functionality 4" TESTING TRAPEZE | APRIL 2014 “ ”

- 6. In her book Blindspots, Madeleine Hecke points out that that we often fail to recognise “thinking opportunities” – that is, we simply don’t realise that we should be spending a moment to think about what we’re about to do. She continues to state several reasons that can cause our minds shut down, among them being: • Emotional Stress: when deadlines or a crisis looms (and testing is a prime candidate for such events), thinking often flies out the window in the panic of an immediate response. • Information Overload: when we receive too much information, our minds become limited by what we can process, and so we miss some of the thinking outcomes that accompany them. • Everyday Life: routine can immerse us in our activities to the point where we’re doing without thinking. Each reason is distinct, but can lead to the same result – unfocused testing that relies on ad hoc decisions to drive it. Without thinking, our testing efforts are compromised before we begin, and no amount of enthusiasm or can-do attitude can compensate for that handicap. Not Noticing Something Ever missed something obvious when testing? I have. The most recent occasion was when I compared the UI layout between an application and a mock screenshot. I completely failed to notice the absence of some text at the bottom of a particular screen – important disclaimer text, at that. In testing, failing to notice things can be very simple to do. It could even be argued that it’s not a tester’s job to notice every minute change that occurs in software throughout development, but rather to notice changes that can potentially be (or actually pose) a problem. In that sense, training our observation skills or using automation may help improve the ability to detect differences or problems. However, our ability to “ignore the familiar” extends beyond external observation. We can also fail to notice what we are thinking about. And in software testing, that’s a more serious problem. Without thinking, our testing efforts are compromised before we begin 5" TESTING TRAPEZE | APRIL 2014 “ ”

- 7. Not noticing our thinking isn’t necessarily the same as not stopping to think. We can be thinking without realising what we’re thinking. Yet our routine use of well-established thinking patterns, influenced by various beliefs and attitudes, can tick along without us ever noticing them, let alone stopping to critique them. That’s not necessarily a problem – unless those beliefs are ineffectual, out-dated or flat-out wrong. Using test scripts can accentuate this particular blind spot, in both the external and internal senses. Focused entirely on the test steps, testers become more interested in verifying the software meets whatever narrow prescription has been codified in the test case, rather than observing the software as an entire system (and the resulting behaviours). And their adherence to following the test script “rail-roads” their mind into thinking that the test case represents “testing” a feature in its entirety, sapping creative options and the potential for much higher quality work. Confirmation Bias The first time I didn’t have requirements to test software, it felt like a minor disaster! How could we possibly test this effectively? The client was being unreasonable! A handful of screenshots and a couple of meetings to discuss the application, and that’s all we were going to get? Requirements make testing straight-forward. Or so it seems. They provide an apparently authoritative list of things a tester must cover in their activities. Work your way through each requirement, confirm the software meets each one and your testing appears reasonably thorough. But beyond the functional focus this approach encourages, only verifying that requirements have been met can lead to a passive, confirmatory attitude in the testing – in other words, a confirmation bias. In turn, testers often end up looking to confirm this implied bias laid out in requirements with a weak array of positive tests. And while proving that the happy path works has some value, it is only a single spanner in the toolbox of methods a tester should evaluate software with. Negative tests, critical thinking (on both the requirements and the software) and an exploratory attitude can all potentially yield far more information than the simple validation exercises many testers employ. Requirements make testing straightforward. Or so it seems. 6" TESTING TRAPEZE | APRIL 2014 “ ”

- 8. Using an arsenal of testing techniques on software can push past the boundaries implicitly set by requirements, exposing new and unexpected problems in the software. Confirmation bias is just one of many cognitive biases, many of which can have a significant effect on how a tester performs. Unquestioning Trust Like most testers, I’ve worked with many developers – some have been error/bug-prone, others have consistently produced first-class work. I’ll listen to their explanations and advice respectfully. I’ll ask questions. I’ll usually jot down notes. And I’ll also remain a sceptic, determined to find the truth, the whole truth and nothing but the truth through investigation of the product itself. Of course, trust isn’t necessarily a trap, pitfall or blind spot. It’s a critical ingredient in building relationships within a development or project team. But in the realm of testing, where critical questioning and evaluating is a core component, placing unswerving trust in requirements documentation or the words of a fellow team member may pose a significant hazard. The specification or requirements document is not the product under test. Similarly, the developer’s explanation of a feature or expected behaviour is not the product. In fact, the apparent behaviour or performance of the product may not indicate “what lies beneath”. Yet, I’ve seen testers refer to requirements as if they were an unquestionable oracle, or take a developer’s word as an unbreakable oath. In many cases, they were sorely disappointed when things they read or heard turned out to be less than 100% reliable. At best, to not ask questions can limit your testing’s effectiveness, increasing the risk of missing potential problems or other noteworthy discoveries. At worst, it can completely misguide your testing efforts, raising false flags or communicating incorrect information (and a host of related assumptions) that disrupt the development. Use every information source you can as potentially useful. But don’t believe everything you read, see or hear. 7" TESTING TRAPEZE | APRIL 2014 The specification or requirements document is not the product under test. Similarly, the developer’s explanation of a feature or expected behaviour is not the product “ ”

- 9. Testing without Context In my early days as a tester, I was an ardent collector of generic test ideas, techniques and thoughts, all neatly arranged in a series of lists. It seemed an excellent way of capturing a host of various tips, tricks and so on that I could apply at a moment’s glance. But I soon found that it was merely a bunch of words, completely free of context and as a result, of limited usefulness whenever I turned to them with a specific situation in mind. Applying a raft of cookie-cutter methods without first thinking about the nuances of the situation can be a potentially ineffective and dangerous short-cut. Few testing scenarios are the same, and those that are similar will still possess characteristics that prevent thoughtless application of any particular technique. This isn’t to say that every single aspect of every different test project is context-specific. Michael Bolton’s post on context-free test questions is an excellent reference of re-usable resources, as are the earlier mentioned quality categories. Nor is it a bad idea to build up a testing toolkit of techniques, ideas and heuristics that are available to you. However, knowing WHEN to utilise these tools is as much of a skill and equally important as the HOW they are used. As the paraphrased cliché goes, context is king. THESE ARE ONLY A SMALL SAMPLE OF THE myriad mental traps awaiting unsuspecting software testers. There are many more out there ready to pounce and I encourage you to actively look for them as you go about your work. On a final note, these are only intended as potential causes, and while they may help explain poor testing should not should be used to excuse it. By categorising some of these blind spots, I hope it may help anyone involved in software development (testers or otherwise) to be on their guard against them. Awareness of the pitfalls that may derail testing won’t necessarily win the war against them, but as a certain children’s toy from the 1980s and 90s so sagely quoted, “knowing is half the battle”. 8" TESTING TRAPEZE | APRIL 2014 Dean Mackenzie is a software tester living in overly sunny Brisbane, Australia. Having fallen into testing around seven years ago, he subsequently discovered the context-driven school several years later. Dean aims to play an increasing part in the context-driven testing community in Australia, especially in encouraging others to re-examine traditional attitudes towards software testing. He enjoys studying new ideas (especially those around testing or learning) and finds there just isn’t enough time to look at all of the good ones. Dean blogs intermittently at Yes, Broken... and can be found under @deanamackenzie on Twitter.

- 10. 9" TESTING TRAPEZE | FEBRUARY 2014 Testing Circus is one of the world’s leading English language magazines for software testers and test enthusiasts. Published regularly since September 2010, it has established itself as a platform for knowledge sharing in software testing profession. Testing Circus is read by thousands of software testers worldwide. Free monthly subscription is available at the Testing Circus website. JOIN THE CIRCUS ONLINE: www.testingcircus.com facebook.com/testingcircus twitter.com/testingcircus TESTING CIRCUS

- 11. AS PART OF THE WELLINGTON WETEST Weekend Workshop back in November, myself and Dave Robinson ran an exercise called ‘Testing Gravity’, the idea for which traced its roots back to when I used to work as a teacher of science in the 90s. Most people think back to learning science as an accumulation of facts – the Earth moves around the Sun (although that one is a relatively new fact), the Earth is round, etc. But being good in science is more than an accumulation of knowledge, and being able to churn those things back. As a science teacher, along with a guide for the levels of knowledge we were to instill in our students, there was also a guide for developing their experimentation skills. This, as you can imagine, was much more difficult to instill than just the retention of facts; there is no “right” way to do it. Included within this skill set is developing students’ intuition: • Given a query about nature, how can I develop an experimentation that will help to investigate that question? • How can I collect data on my experiment? • Given the results I collect, how can I use them to either enhance and modify my experimentation techniques, or else ask new queries (and hence try new experiments)? The heart of a scientific method then, is very close to that of good testing. ‘Testing Gravity’ was designed to take a group of testers back to the classroom, look at how they approached problems there, and ask if they could take this approach from the science classroom back to the testing office... 10" TESTING TRAPEZE | APRIL 2014 TESTING GRAVITY MIKE TALKS WELLINGTON, NEW ZEALAND

- 12. “I’m actually a bit older than I seem. I was one of the architects of the Big Bang, which I can assure you was the original startup! Originally we started the Universe with a single unified force, but almost immediately we realized it wasn’t good enough. So I was in charge of designing and delivering a special force we called “object attraction 2.0”, but you probably know under the brand name it was given in the 17th Century of “gravity”. To be honest we didn’t document what we were doing, we just fixed things on the fly. And obviously some time has gone through. If pressed, I would sum up and describe “object attraction 2.0” as “how objects fall”. From what I do remember about the project though is this: • An object will always fall downwards, never upwards to a large object like the Earth. • The more heavy an object is, the more force it feels from the Earth. • So heavier objects must fall faster than lighter ones. • Two objects of the same weight will always fall at the same speed. Obviously some time has passed, and the Universe is a lot bigger and a different shape than it used to be. I need you over the next 30 minutes to show me what you can find out about gravity, what it does, and anything surprising according to my definition that I need to be aware of.” The Task At the beginning of the workshop, the attendees were given this brief by myself, acting as the business owner: At this point my partner in crime, Dave (who was acting as manager of the testing project) reminded everyone that they weren’t after theory or conjecture, we were after proof of what they could find about gravity. 11" TESTING TRAPEZE | APRIL 2014

- 13. Are these the tools you would choose to test gravity? We put them into teams, and asked them to start their test planning, putting before them the equipment they had to use which included: • Balls of different densities, weights, materials, size • Sheets of paper • Army men with parachutes • Stopwatches • Measuring tape • Kitchen scales The workshop was actually filled with a number of subtle traps, which were a major point of the exercise. Sure enough, when we moved from our “planning stage” to our “testing phase” most people had a plan of using the scales to measure the weight of the object; using the measuring tape to ensure you were dropping from a consistent height; and using the stopwatch to measure the time to fall. Most of the teams also deduced it would be more scientific if they added an element of repeatability to their tests, and hence measure each object three times. Bravo! As soon as testing began though, some of the traps started to snap... 12" TESTING TRAPEZE | APRIL 2014

- 14. Trap 1: The Scales The kitchen scales were designed to measure weights for baking. The objects in front of the teams were significantly lighter than a 100g portion of flour for a cake. The needle barely budged. This is a trap many scientists and testers fall into – if a piece of equipment is put in front of you, you feel obliged to use it as part of your solution. Its very presence makes people feel it has to be part of their plan. Trap 2: The Stopwatch When you drop an object from shoulder height, it takes so little time to fall that the stopwatch user barely had time to click start and stop. Collecting data for the same object, the results were all over the place because the human error in reaction was significant next to the time it took to fall. So it was useless, unless you tried to increase the height you dropped from (but the ceiling would indeed put a ceiling on that). What’s going on? Measuring the weight, the height, and the time of an object to fall were brilliant ideas. It’s just the tools provided weren’t up to the job. At this point, the careful test plans of our participants were in shreds, in the first few minutes all that pre-work had gone up in smoke, as one problem after another invalidated that planning. So did anyone shrink back to their desk in a sulk? Or even try to keep making their plan work in spite of the results they encountered? I’m pleased to say we didn’t have anyone give up... the failures of experimentation led to people trying new things. A common approach was quite simple: take two different objects, and drop them both at the same time from the same height, then see which one lands first, and use this method of comparison to draw up a hierarchy of which objects fall fastest. Many teams brought me over to repeat experiments they’d run. Some savvy teams had filmed with a smartphone, and were able to show me any experiment I wanted onscreen. Things people had worked out included: • An object falling was not affected by its colour - two similar objects but different colour fell in the same time (this is one of the great things about exercises like this - I’m educated to University level in 13" TESTING TRAPEZE | APRIL 2014 the failures of experimentation led to people trying new things “ ”

- 15. physics, and have run this as a teacher a dozen times, but it never occurred to me to test colour - and it was a great observation to make). • The shape of an object affected “gravity” - a piece of paper scrunched up fell differently to a piece of paper that fell as a sheet. And this was important. I - the business owner - had defined “gravity” to me to be “how something fell”, so it was worth bringing to my attention. • Despite my insistence at the start (this was Trap 3) and the proof of a hundred Road Runner cartoons, heavier objects did not fall faster. This was brought up by many, but WeTest regular Joshua Raine put it most eloquently when he summed: “I have two objects. One is many times the weight of the other, which means the effect of gravity on the heavier object should be many factors greater, and yet I’m seeing no noticeable difference in time to fall”. More than once I threw a mock-tantrum with a team, as I’d watch an object hit the ground, and then see a defect kick in as the ball stopped moving down and started moving up, screaming “defect!”. One team advised me that the ball did eventually settle on the ground, and the effect was seen when you threw the ball at a wall horizontally, so it wasn’t a sole property of when it moved due to gravity (which, remember, we defined as falling). The workshop (indeed the whole WeTest event beyond this one exercise) was a huge success. Testing, like science, is something we can read a lot of material on, and yet we learn the most from doing, and trying things out. A key part of our session was learning to make mistakes in planning, and to then revise the plan. Both Context-Driven and Agile schools embrace this. Context-Driven Testers will quote, “Projects unfold over time in ways that are often not predictable” and in a similar vein Agilistas will talk about “Responding to change over following a plan”. Learning to adapt is a key part of being a tester; to look at the challenge in front of you, and if your methods and tools aren’t working, learn to adapt, discard and make new ones as you need to. The testers in the workshop had to explain to me why they changed their approach – I was playing a somewhat surly individual, and as someone who’s been to drama school, I hate to break character – but they did well, and 14" TESTING TRAPEZE | APRIL 2014 Learning to adapt is a key part of being a tester “ ”

- 16. they each found their own voice in explaining - again part of the object of the exercise. Let’s go back to my teacher training - the following describes one of the higher achievement levels in “an understanding of scientific thinking”. By replacing just a couple of words - “pupils” become “testers”, and “teacher” changes to “peers” - it becomes a great and powerful statement, and one that I feel we should be challenging ourselves with every day of our testing lives: 15" TESTING TRAPEZE | APRIL 2014 Mike Talks grew up with a love and passion for science and experimentation, which included an interest in taking his toys apart to see how they worked. So it’s kind of natural he’s ended up in a career as a software tester. He works at Datacom as a test manager. “[Testers] recognise that different strategies are required to investigate different kinds of questions, and use knowledge and understanding to select an appropriate strategy. In consultation with their [peers] they adapt their approach to practical work to control risk. They record data that are relevant and sufficiently detailed, and choose methods that will obtain these data with the precision and reliability needed. They analyse data and begin to explain, and allow for, anomalies. They carry out multi-step calculations and use compound measures appropriately. They communicate findings and arguments, showing awareness of a range of views. They evaluate evidence critically and suggest how inadequacies can be remedied.”

- 17. 16" TESTING TRAPEZE | APRIL 2014 OZWST 14TH & 15TH JUNE 2014 BRISBANE, AUSTRALIA OZWST (Australian Workshop on Software Testing) is happening again for the third time in 2014! James Bach will be joining us in Brisbane as Content Owner, along with Richard Robinson as Facilitator. OZWST was started in 2012 by David Greenlees in Adelaide, with Anne-Marie Charrett as Content Owner and Scott Barber as Facilitator. 2013 saw the location change to Sydney and Rob Sabourin as Content Owner with David Greenlees as Facilitator. This year, we go to Brisbane on the 14th and 15th of June with the theme of ‘Experiences in Acquiring a Sense of Risk’. OZWST is an invite-only peer conference styled on the Los Altos Workshop on Software Testing format. For more information on this style of peer conference, and on OZWST itself, please visit the website.

- 18. CURIOSITY IS A NORMAL EMOTION AND behaviour in humans. As adults, how we react to our inquisitive nature differs greatly in degree and can be influenced by a number of factors. Our upbringing, the culture we are raised in, the type of education system we are put through, our personal experiences and exposure, can all have an impact in how we react to curiosity. In his book Flow and the Psychology of Discovery and Invention, the psychologist Mihaly Csikszentmihalyi explains that we are born with two contradictory sets of instructions: a conservative tendency, which includes instincts for self-preservation and saving energy, and a comprehensive tendency made up of instincts for exploring, for enjoying novelty and risk—the curiosity that leads to creativity belongs to this set. The conservative tendency requires little encouragement or support from outside to motivate behaviour, but instincts such as curiosity can wilt if not cultivated. As testers we should actively nurture curiosity and our thirst for knowledge. This is not a new idea. In 1961 Jerry Weinberg said that to be able to test better "we must struggle to develop a suspicious nature as well as a lively imagination.” (Computer Programming Fundamentals). 17" TESTING TRAPEZE | APRIL 2014 ENCOURAGING CURIOSITY ALESSANDRA MOREIRA FLORIDA, USA curiosity | ˌkyo͝orēˈäsitē | noun ( pl. curiosities ) 1. a strong desire to know or learn something

- 19. Have you ever wondered about how many people saw an apple fall before Newton, and why he was the first who asked that very question: why? One of the many roles of a tester is to join the dots, to ask the questions that no one else thinks of asking, to explore the unknown, to bring to light the dark areas that have fallen through the cracks of documentation and communication. Testers are constantly learning new systems, gathering information about them and reporting what they find. Curiosity can be one of the guides and inspirations to exploration, and has the potential to make a great tester out of an average one. Since from one curious thought a number of other insights can develop. We can stimulate our curiosity in systematic ways and we can learn to trust it to guide us towards new knowledge. “How can we get better at being curious?” I hear you asking. Here are some ways that have worked for me. Focus Attentional Resources Often times the more we know about something, the more we want to know, it is just our nature. By directing our attention to objects, systems or something of interest we can become more curious about it. For example, think of rocks, they don't seem too interesting until you develop an interest and start collecting them. Soon you will find out about different types of rocks, classes of minerals, where to find them, and so on. If there are a lot of things to be curious about when it comes to rocks, imagine how much more you could discover about the system you are testing. Test charters are a great way to focus attentional resources. A good test charter provides inspiration and focus areas without dictating specific steps, leaving room for curiosity to lead without being too broad. As soon as we find something interesting and start directing attention and interest towards it, our curious nature kicks in and wants to know more. The Rule of Three is another way to direct attentional resources to any given subject. Millions saw the apple fall, but Newton was the one who asked why. - Bernard Baruch 18" TESTING TRAPEZE | APRIL 2014 “ ”

- 20. The Rule of Three Jerry Weinberg’s Rule of Three: "If you can't think of at least three different meanings for what you observed, you haven't thought enough about it". The beauty of the Rule of Three is that it can be used almost everywhere. It forces us to think harder, to direct more attentional resources to a problem, instead of stopping at one explanation. It sparks curiosity. Next time you find a bug, think of at least three possible ways it can be recreated. If you use test charters, ask yourself how you can approach the area under test in at least three different ways. If you pair test with developers, try asking what are at least three paths data can take. If it seems like stakeholders don’t seem to understand why testing takes so long, ask yourself what could be at least three possible reasons. Getting to at least three options or solutions is important because one solution is a trap, two is a dilemma and three solutions breaks logjam thinking. When you make the rule of three a habit you will find yourself inspired to ask more questions. It is not always easy to come up with three solutions, but often when I do, other ideas start flooding in. Keep Asking Questions Becoming a tester is easy, the hard thing is to be good at it. Some bugs don't require much mental effort to be discovered. But to find more complex bugs, those that are difficult to discover and reproduce, is a much more difficult task. In his book Exploratory Software Testing, James Whittaker explains that almost anyone can learn to be a decent tester. Apply even a little common sense to input selection and you will find bugs. Testing at this level is a real fishing-in-a-barrel activity, enough to make anyone feel smart. To become an exceptional tester takes a professional that treats testing as a craft that can be mastered, that is constantly learning from each test executed, from other testers, from literature, from the world around them, and from the community. That is true exploratory testing: gathering information, analyzing it, reporting it and making better decisions each time the cycle goes around. Curiosity is vital to keep this cycle going. 19" TESTING TRAPEZE | APRIL 2014 When you make the rule of three a habit you will find yourself inspired to ask more questions “ ”

- 21. Asking questions and seeking answers leads to new information, and in turn new information triggers curiosity and inspires us to ask more questions. Although there is such a thing as a stupid question, good information can come as much from intelligent questions as from stupid ones. Questions deemed stupid by some can lead to very insightful answers. As Kaner, Bach and Pettichord describe in the classic book Lessons Learned in Software Testing, "The value of questions is not limited to those that are asked out loud. Any questions that occur to you may help provoke your own thoughts in directions that lead to vital insights. If you ever find yourself testing and realise that you have no questions about the product, take a break." During that break check out Michael Bolton's blog about Context-Free Questioning for Testing – there are enough questions there to trigger even the most bored mind! Yet another reason we should keep asking questions is that it helps us to better understand context. Our brain is a fine tuned machine that uses very little energy to process large amounts of input. Hence it is really proficient at coming up with contexts of its own. To save energy, it will try to make sense of data, circumstances or anything by creating context. If you don't ask questions about the context of your testing, chances are you will interpret what you see within your own context. Creating your own context could be correct or not - but most likely not. Questions such as 'why am I testing this?' or 'who is my audience?' or 'what is the purpose of this test?' can help clarify context for people who matter. THE SAYING “CURIOSITY KILLED THE CAT” SUGGESTS THAT curiosity can get people in trouble. As with many good things in life, curiosity can have its drawbacks. Despite the fact that curiosity is an indispensable behaviour for testers, in our quest for knowledge we can be perceived as intrusive and make others uncomfortable. We can find issues that can rub people the wrong way, or no one wants to consider. We may uncover problems which require others to make decisions they were not prepared to make. There are teams and work environments that do not foster the type of freedom a truly curious mind needs. 20" TESTING TRAPEZE | APRIL 2014 in our quest for knowledge we can be perceived as intrusive and make others uncomfortable “ ”

- 22. Testers are hired to do a job and when they do it well they can face predicaments. Yet an ethical tester investigates and reports back, in spite of the potential danger of being seeing as nosy, intrusive, or inconvenient. In the words of Arnold Edinborough: “Curiosity is the very basis of education, and if you tell me that curiosity killed the cat, I say only the cat died nobly.” 21" TESTING TRAPEZE | APRIL 2014 Ale is a student of and advocate for the context-driven school of testing. She spent part of her career following test scripts day in and day out, so appreciates the effectiveness and power of CDT. She started testing in 2000 in Sydney, Australia and has since worked in diverse industries and a variety of Her experience working as a context-driven tester in highly scripted environments has given her a passion to ‘waken’ factory testers and to work on projects such as Weekend Testing Australia and New Zealand where, as the organizer and facilitator, she has the opportunity to help and empower other testers in their own journeys. Ale is @testchick on Twitter and blogs at Road Less Tested. roles, from technical tester to test manager.

- 23. 22" TESTING TRAPEZE | APRIL 2014 JOIN THE TESTING TRAPEZE COMMUNITY ONLINE Tell us what you think of this edition, check out the previous one, discuss and debate our articles, and discover a vibrant testing community: www.facebook.com/testingtrapeze www.twitter.com/testingtrapeze www.testingcircus.com/category/testing-trapeze WANT TO WRITE FOR TESTING TRAPEZE? We welcome contributions from testers in Australia and New Zealand. If you would like to write for Testing Trapeze, please contact the editor: testingtrapeze@testingcircus.com.

- 24. WHERE IS OUR PROFESSION OF SOFTWARE testing heading? Are we destined to create and follow test cases forever in organisations that would rather slap a Band-Aid on problems rather than tackle the root causes? Sadly there seems to be a growing divide between companies that have managed to empower highly productive teams and those that seem to buckle under the weight of complexity and technical debt. All too often the latter companies never conduct a meaningful retrospective on the root issues and we see testers being asked to pick up the pieces. On the other hand, in highly productive teams, the role of a software tester isn’t considered inferior to that of a developer or any other key role. On the contrary, testers are empowered and encouraged to bring their mindset and skills to the rest of the team and engage constructively from the outset. What does it take to bring change and elevate the concept of test first within a development organisation? I wanted to find out so I keyed up meetings with two senior leaders in the Canterbury software sector. WALKING INTO THE ADSCALE OFFICE, I’M surprised to see a crowd of developers huddled around what looks to be a full bar and kitchen in the middle of the office. The smell of fried bacon, eggs and fresh coffee signals breakfast is in full swing. The scene is amazingly informal - everyone’s in jeans and t-shirts and someone has brought their pet dog to work. Adscale CEO Scott Noakes greets me and takes me to his ‘office’, a small desk in the middle of the large open-plan workspace. “It’s my last day” he says, “all I have to do is clean my desk off and then I’m out of here.” Scott Noakes has 20 years experience in the tech industry and has been involved in everything from software development to product management and company leadership. For the past two years he’s been CEO of Adscale Laboratories, a development house in 23" TESTING TRAPEZE | APRIL 2014 WHERE TO FOR THE FUTURE OF SOFTWARE TESTING? MICHAEL TRENGROVE CHRISTCHURCH, NEW ZEALAND

- 25. Christchurch that serves more than 300 million ads a day to German internet users. Scott’s also CEO of a start-up company called Linewize and has recently resigned from his job at Adscale to focus more completely on the start-up. I start by asking Scott how he has seen testing evolve over the years. He says he’s always been in start-up companies where dedicated testers haven't been a luxury they could always afford. Developers had to write good code because if it broke they were the ones getting woken up in the middle of the night to deal with the problem. “And I really strongly believe that is the right way to do it,” Scott says. “Testing in a lot of its current forms is kind of a Band-Aid on a problem that has some fundamental causes behind it.” I ask Scott to explain what some of those causes are and he quickly replies that lack of good processes and educational knowledge are the most common causes of Band-Aid testing. “Often the process is completely rubbish - bad process produces consistently bad results." Bad practices are another fundamental cause, Scott says. Testers are often brought in to put plasters on a development problem. They quantify it, measure it and try to stop defects making their way to the final customer. Scott believes the idea behind the traditional form of testing has approached the problem from the wrong direction, “If you really focus on getting your practices right, getting your processes right and getting that common agreement around what it is we’re building, and do that in a very agile and granular way, then the need for testing, in my opinion, can actually almost disappear - at least the need for traditional testing. I still believe there is a need for testing, but it’s in a very different form.” This totally resonates with me as I’ve been part of both forms of testing in my career so far. In my first job I was tasked with manual testing and was challenged daily by the question of what value I was actually bringing to the project by clicking through screens following a test script. Coming from a farming background and with a rather practical bent to the way I like to do things, I couldn’t believe I was being paid so well to do such a menial task with little perceived value coming of it. Developers had to write good code because if it broke they were the ones getting woken up in the middle of the night to deal with the problem 24" TESTING TRAPEZE | APRIL 2014 “ ”

- 26. My second job was the complete opposite - test automation enabling near instant feedback of code changes. The test automation work enabled me to use my skill set to help shorten the feedback loop for the team when committing code changes. It helped break down the wall between developer and tester and assisted the company to deliver a quality product in a shorter time: win win. Scott nods in agreement. He’s seen it all before. “Typical testing, to me, is developers develop something as fast as they can, they’re under way too much pressure, they don’t actually have the leeway to do a good job based on some arbitrary promise by a salesperson to the customer and if we don’t deliver it by this date we lose this many million dollars.” The result is a kind of ping-pong game between the two siloed camps of developers and testers, with each blaming the other while the project drags beyond deadlines. “Even in a scrum team?” I ask. That all depends whether Scrum is being done properly, Scott answers. A lot of organisations who claim to be Agile are still stuck in the old waterfall mentality. In truly Agile organisations testers are a part of the development team and have a valued position within the team because of the perspective they bring. “The perfect tester is someone who is part product owner,” Scott says. “They really understand the problem we’re trying to solve, they have a greater end-to-end view of the world than some of the others in the team, and they have a wide understanding of the product. They’re there at the outset debating what is this functionality, really getting clear on what the satisfaction criteria is with the product owner or customer.” Testers could spend years testing a product, Scott says, but the market demands quick development so testers need to prioritise their work in order of importance and make a call about how much risk they’re willing to live with. If the system fails, at least let it fail elegantly, he says. Engineer things so that each piece of functionality can be turned off without pulling down the whole system. A lot of organisations who claim to be Agile are still stuck in the old waterfall mentality 25" TESTING TRAPEZE | APRIL 2014 “ ”

- 27. “This has been a really key learning of Adscale, it is actually impossible to test on a production equivalent test framework; we can’t recreate the German internet!” Looking forward, Scott sees a different future for software testing. Speaking with a strong sense of conviction, he tells me something I never expected to hear from a tech CEO. “I have a very strong opinion that there is no place for manual testing in the industry whatsoever - manual being testers who are told to spend days following test scripts.” Scott goes on to say testers have historically come from a customer service background and often don’t have the technical skills to foot it with the development team. If a tester is unable to automate their tests and give advice to the development team on architecture and design issues at an early stage, then they shouldn’t be there, Scott says. “It has to be automated. How on earth can you wait four days for someone to run through a manual test procedure? It’s a waste of time.” Scott believes there remains a place for exploratory testing but the tester’s time needs to be used wisely. Furthermore, if a tester is part of a development team, the whole team generally picks up on the testing perspective and incorporates their learning at the design stage. So- called “hero programmers” are a major obstacle to this type of integrated teamwork, Scott says, and are as detrimental to a scrum team as non-technical testers. “You need your testers helping the hero. A lot of what they’re writing can actually be destructive to the organisation because they’re producing stuff that no one else understands, maybe it’s obtuse, maybe it’s unmaintainable. This interaction with pair programming and test driven development is all designed to pull back towards a state where you have maintainable code that is understood by everyone in a domain that is understood by everyone.” Before I leave I ask Scott if he thinks the role of test manager is still relevant. The old school test manager role is dead, Scott says, however there exists a role for a test strategist who can provide overall direction and It has to be automated. How on earth can you wait four days for someone to run through a manual test procedure? It’s a waste of time 26" TESTING TRAPEZE | APRIL 2014 “ ”

- 28. continually push for faster regression tests. Scott walks me to the door and leaves me with a parting thought that seems to summarise his whole philosophy. “If developers were really craftsman all the time, you’d get very few defects.” ACROSS TOWN I MEET WITH ORION HEALTH’S PRODUCT Development Director, Jan Behrens. Orion Health is one of New Zealand's fastest growing software companies and has aggressively expanded around the globe in recent years. With key development offices in Christchurch, Auckland, Canberra, Bangkok and Arizona there is a lot at stake if a practical pragmatic approach to software development isn’t taken. Jan is a forthright German expat now helping lead over 100 staff in the Christchurch and Bangkok development centres. Before shifting to Orion Health, Jan was the CTO for PayGlobal, a privately held company offering payroll and HR software for businesses. I ask him how he’s seen the role of testing change since he started in IT. Until the past three to five years, Jan says, testers were thought of as “glorified monkeys” who would go through a system clicking buttons and following a test script. “Essentially testers were just an executor of the system. It wasn’t perceived as a true profession. I think it was quite often - and still is in many places - just seen as an entry level position into an IT company.” The biggest change for testing over the past few years is that the role is now being seen as a professional one, on a par with that of software developers, Jan says. Whereas ten years ago you would never see a tester as part of a development team, now there has been this shift to cross-functional teams who take responsibility for delivering a product. “Today the biggest benefit of having test professionals embedded in cross-functional development teams is not that they are the ones doing all the testing but, similar to an architect or a business analyst or a UX designer, they have a particular set of skills that they help the whole team to apply.” The tester brings a certain mindset to the team - call it a rainy day mindset - which understands how things are broken and The biggest change for testing over the past few years is that the role is now being seen as a professional one 27" TESTING TRAPEZE | APRIL 2014 “ ”

- 29. pushes to mitigate against these possibilities. The tester’s role is to continually remind the team about the importance to test early and the concept of holistic testing, Jan says. Looking forward, Jan says he sees the cross-functional trends continuing until the roles of developer and tester are blended into one. Testers writing code, and programmers further developing a tester’s mindset. The different job descriptions of testers and developers will become less relevant as the focus shifts towards delivering something of value with the right kind of functional attributes, Jan says. “The individual roles are much less important to a company with this viewpoint, it’s much more about the sum of a team’s parts.” With this in mind, I repeat my question of whether the role of test manager is now dead. “In my personal opinion, yes it is,” Jan says. He goes on to say that the role of test manager as someone who organises and manages the process of testing is completely at odds with where the industry is going in terms of cross-functional teams. Having someone outside the team organise the process is just an oxymoron, he says. “What I do believe is that people who have the skills to coach and mentor others in the art of testing, they definitely have a future in this industry. I personally think that this idea of a test manager who manages a team of testers who are then distributed across other teams or work as a siloed unit, I think that's dead.” As I leave Jan at Orion Health I feel somewhat overwhelmed by the level the company is going to to invest in its staff, and the process around the art of creating a quality software product. There was a great working atmosphere and it seemed like everyone was pretty stoked to be there - and who wouldn’t be? The office is incredible: full kitchen stocked with food, sofas, bean bags everywhere, an Xbox and ping pong table, and lots of big open spaces to brainstorm ideas. There are even brightly coloured pods that you can crawl inside and take a nap or make a private phone call. Testers are treated as equals; they’re paid on par with developers and have the same career progression road map available to them. Testers are treated as equals 28" TESTING TRAPEZE | APRIL 2014 “ ”

- 30. REFLECTING LATER ON MY TWO INTERVIEWS I’M EXCITED FOR what the future holds. However, as with everything in technology, unless we continually adapt as test professionals we’ll become less and less relevant as teams look to improve their process and practices. As professional testers we are moving away from being the ones responsible for finding defects to becoming more like guardians of the business and it’s development processes - looking constantly at ways to prevent bugs right at the beginning of the software development lifecycle when design decisions are being made. If you’re a recent graduate or someone looking at moving into professional software testing it’s important to understand that all companies are on a journey and teams are continually finding better ways of doing things. Newcomers may not have the most exciting, progressive roles open to them immediately, but by continually learning and progressing your own individual test techniques and understanding, you assist the companies you’re working within to move forward. As software testers we have a powerful role to play in progressing software development practices and it can be one of the most rewarding things: being part of a team that’s tackling change. Looking forward, I believe software companies will continue to peel back the Band-Aid testing mentality. It might be painful initially as transparency becomes the norm, but the future of our industry depends on it. For some testers who need to up-skill it will be uncomfortable and some will transition out, but the ones who are prepared to roll up their sleeves and embrace the new realities of software testing have a great career ahead of them. As professional testers we are moving away from being the ones responsible for finding defects to becoming more like guardians of the business and it’s development processes 29" TESTING TRAPEZE | APRIL 2014 Michael Trengrove is a Test Consultant, Snowboard Instructor and ex Sheep Farmer. You can find him on twitter @mdtrengrove. “ ”

- 31. NEXT TIME IN TESTING TRAPEZE AVAILABLE JUNE 15TH 2014 30" TESTING TRAPEZE | FEBRUARY 2014 LEE HAWKINS MELBOURNE, AUSTRALIA OLIVER ERLEWEIN WELLINGTON, NEW ZEALAND LIZ RENTON ADELAIDE, AUSTRALIA NICOLA OWEN & ALICE CHU AUCKLAND & WELLINGTON, NEW ZEALAND PETE WALEN MICHIGAN, USA

- 32. CRITICAL THINKING IS INTEGRAL TO software testing. It can change our testing from weak exercises in confirmatory bias to powerhouses of scientific investigation. We all think, but our thinking is often flawed. We're inherently biased; we make dangerous assumptions and jump to conclusions about the information we receive. As Marius in Anne Rice’s The Vampire Lestat says: “Very few beings really seek knowledge in this world. Mortal or immortal, few really ask. On the contrary, they try to wring from the unknown the answers they have already shaped in their own minds -- justifications, confirmations, forms of consolation without which they can't go on. To really ask is to open the door to the whirlwind. The answer may annihilate the question and the questioner.” - Peter A. Facione, Critical Thinking: What It Is and Why It Counts Michael Bolton describes critical thinking as "thinking about thinking with the aim of not getting fooled". It’s not hard to see how we can get fooled in software testing. For example: • Developers tell us there are no bugs; • Product Owners tell us requirements are complete and as a tester we take that on face value; • Test managers tells us their process is best practice and we accept that without question; • A product works in one environment and we assume it works in all environments; • A product works with one set of data and we think it works for all sets of data; • A product works as described in the specification and we assume it works in all situations. 31" TESTING TRAPEZE | APRIL 2014 TEACHING CRITICAL THINKING ANNE-MARIE CHARRETT SYDNEY, AUSTRALIA

- 33. We need to become more adept at challenging assumptions, questioning our premises, being deliberate in our reasoning, and understanding our biases. Critical thinking is “self-directed, self-disciplined, self-monitored, and self-corrective thinking” (The Critical Thinking Community). Critical thinking is tacit knowledge making it hard to describe and hard to teach, so how do we teach our testers to think more critically? The coaching model that James Bach & I have developed is an exercise in critical thinking. A tester is given a task to perform. This task may be to test something, it may be a dilemma posed to the student, or it may be a specific task such as creating a bug report. The coach then works with the tester to explore in a Socratic way the thinking behind the task. Selecting the task is important. It needs to be sufficiently challenging and energizing for the tester. A fundamental part of a coaching session is to 'discover' where a student's energy lies (inspired by Jerry Weinberg’s idea of ‘follow the energy’). Through understanding and following a student's energy, a coaching session is more likely to leave a student inspired, self- motivated and encouraged to discover more. It’s part of a coach’s role to discover where a student’s energy is. We've performed hundreds of these coaching sessions and through doing so have identified patterns in both student and coaching behavior. We think these patterns may be useful in coaching. As a coach it can be hard to know how to navigate through a coaching session. What sort of questions should I be asking? How much should I push the tester in their task? How do I know where their energy is? WHAT FOLLOWS IS AN EXAMPLE OF PART OF A COACHING transcript with Simon. Simon is a ‘lone’ tester among 35 developers and has two years testing experience. English is not his first language. Simon wants to be a ‘professional tester’ but is not sure what that is. To begin the following extract, I ask Simon “What is Software Testing?” This is a typical diagnostic task that I might offer a student I’m coaching for the first time. You can see I’m employing Socratic questioning in the coaching session and its clear who is the coach and who is being coached. 32" TESTING TRAPEZE | APRIL 2014 We need to become more adept at challenging assumptions, questioning our premises, being deliberate in our reasoning, and understanding our biases. “ ”

- 34. Simon: In my point of view testing is trying out a system and his functions to see if it works as expected by somebody There’s a lot to look at here. I chose to use a pattern I often employ and that’s ‘drive to detail’. Driving to detail allows me to examine the testers thinking in close detail. Anne-Marie: what do you mean by "see if it works as expected"? Simon: checking against somebodies requirements: that can be a specification, User Stories, Acceptance criteria, prototype..... This is a typical answer where a tester refers to ‘requirements’ to know what’s expected. But what exactly does that mean? There’s lots of ways to interpret this (something I have to keep reminding myself). For instance, it could be he’s got a shallow understanding of oracles or maybe, as English isn’t his first language, it’s a vocabulary problem. Simon: I just read today the "Testing without a map" by MB [Michael Bolton] - so there he explains the ORACLE Interesting. He’s made a point of indicating he has read this article. Why? Perhaps he found the article really interesting and wants to discuss it. Perhaps he sees this as related to what we are talking about. I like that he brought it up though. I am impressed. Anne-Marie: how does that fit into to what we are discussing? I press further. I’m curious to see how he thinks this is related to my question about testing. At the time of coaching I didn’t place much significance on this sentence, but with retrospection, it’s quite a mature answer for a tester with two years experience. Simon: checking against an oracle....which provides the right answer of a requirement from somebody would that be correct? in your view? Note the change of tone. It’s become more questioning, but also there’s a possible hint of uncertainty. What might that say about the tester? What might that say about me as a coach? I’m not really sure what this means. I have many options here. One would be to get him to explain further, e.g “I don’t understand, can you 33" TESTING TRAPEZE | APRIL 2014

- 35. explain this more?” Or I could again drive to detail. I could also have chosen to go back to the definition of software testing, and then get him to test something. Anne-Marie: what is an oracle? I decide to drive to detail: driving to detail is a pressuring technique as well as an investigative technique. Pressuring when someone is uncertain may not be a wise approach; it might have been better for me to ask him to explain more. Simon: source of THE right answer This answer is incomplete (do you know why?). From a coaching perspective, its interesting to note he chose to capitalize “THE”, I wonder why? What can be inferred from that? Does that mean he thinks there is only one definitive answer to a test? Also, what about the word “source”? Why did he choose that? Is an oracle the source of the right answer, or is it more? Look at the word “answer”; I think I would have said ‘information’ instead of “answer”. ‘Answer’ sounds like you have already evaluated - a conclusion has been made. As you can see, there’s a lot to think about in one small sentence. There’s a risk of the coaching session becoming too abstract. I decide to give him a task to perform. I ask him to test the Escapa Website. 34" TESTING TRAPEZE | APRIL 2014

- 36. I give him 10 minutes to test and ask him to describe a test. Simon: I tested if touching the walls or get hit by the square blue rocks by moving around the red square, quits the game and yes it does. So I was testing the description of the game. Is he testing the description of the game or the actual game? It’s a potential coaching topic, but I leave it. Anne-Marie: is your test an important test? Simon: yes Anne-Marie: why? Simon: main functionality in my point of view - hit the wall or get hit by the blue squares - quits the game That’s a fair answer – key functionality is important. And I pursue this avenue no further. I could have looked at what makes a test important, but I want to pursue the oracles topic. This is one of the more challenging aspects of coaching. Being able to let go of potential coaching opportunities and to remain focused on the goal of the coaching session. Anne-Marie: who says it quits the game - how do you know its not a bug? I’m being a little provocative here. I’m pushing to see how he reacts to the pressure. The reason why I’m doing this is to help Simon go beyond the boundaries of his knowledge. I want him to recognize how his thinking can go deeper by logically reasoning through this example. Simon: you are right, could be a bug... He could be showing uncertainty here, but it also could be skepticism, maybe he’s just being polite, or maybe he’s confused and is buying for time. Anne-Marie: what do you think? Is it a bug? I’m adding quite a bit of pressure here, but I want to see how he reacts to the pressure. 35" TESTING TRAPEZE | APRIL 2014

- 37. Simon: I don't know - missing specification ;) It’s good to see he has a sense of humour! And I like his honesty here. He could have responded in many ways. For instance, he could have said, “Of course it’s a bug”, or “Of course it’s not a bug”, in which case I would have asked him why it’s not a bug. I pursue this a little further. Simon concedes it’s a bug and I ask him why. He finds he’s unable to answer this question. Anne-Marie: what we are looking at here is an example of an unidentified oracle I’ve gone into ‘lecture mode’ here to try and help shed some light on the confusion. Simon: ahhh I have to confess, getting the ‘ahh’ in a coaching session is a real thrill for me, and it’s a possible indicator that the student has some insight. Anne-Marie: you think it’s a bug, but you're unable to explain why you think its a bug because you don't know the oracle you are using This is quite common. Often we recognize bugs but find it hard to know why we think it’s a bug. Knowing the oracle you are using helps to give a bug report credibility. Simon: yes Anne-Marie: you mentioned that an oracle was a source of the right answer. It is the source but also it’s how you apply that source in your testing. An oracle is a principle or mechanism used to *recognise* a problem. Requirements on their own are just that - they are a source of knowledge. its only when you compare and evaluate your product against the requirements that they become an oracle. You *use* them to recognise a problem 36" TESTING TRAPEZE | APRIL 2014

- 38. I offer some insights into oracles and give a definition of an oracle. Often I give additional research to do at this point, either pointing to useful blogs and articles on oracles. Being asked to explain definitions of concepts we use in software testing can give a coach a real insight into the knowledge of a tester. But it also helps the student to realize the extent of their knowledge too. COACHING SESSIONS LIKE THE ABOVE EXAMPLE HELP A TESTER GO BEYOND A SUPERFICIAL, conceptual knowledge of testing, to being immersed in the topic in a deep and meaningful way. It takes a tester from “I know what an oracle is” to “I understand and know how to apply oracles”. Thinking critically through the topic - such as oracles - can help a tester appreciate the complexities and nuances at play in software testing. Understanding how our testing can fool us encourages us to be cautious, to be wary of the assumptions in testing and to approach our testing in a scientific way. James Bach will be giving a class in coaching testers at Lets Test Oz on 15th September 2014, in Sydney. Go to the Let’s Test website for details. 37" TESTING TRAPEZE | APRIL 2014 Anne-Marie is a software tester, trainer and coach with a reputation of excellence and passion for the An electronic engineer by trade, software testing chose her when she started conformance testing against European standards. She now consults through Testing Times where she trains and coaches Anne-Marie currently lectures at the University of Technology, Sydney on software testing. She blogs at Maverick Tester and offers the occasional tweet at @charrett. craft of software testing. teams to become powerhouses of testing skill.

- 39. 38" TESTING TRAPEZE | FEBRUARY 2014 Testing Trapeze accepts no liability for the content of this publication, or for the consequences of any actions taken on the basis of the information provided. No part of this magazine may be reproduced in whole or in part without the express written permission of the editor. Image Credits: Cover, Contents, Editorial, Testing Circus, OZWST, Community, Next Time, Trapeze Artists EDITOR KATRINA CLOKIE DESIGN & LAYOUT ADAM HOWARD REVIEW PANEL AARON HODDER ADAM HOWARD BRIAN OSMAN DAVID GREENLEES OLIVER ERLEWEIN THE TRAPEZE ARTISTS: