388 Titanic Project YC

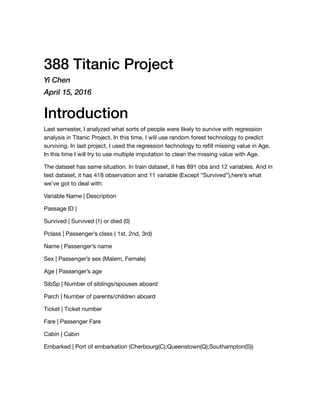

- 1. 388 Titanic Project Yi Chen April 15, 2016 Introduction Last semester, I analyzed what sorts of people were likely to survive with regression analysis in Titanic Project. In this time, I will use random forest technology to predict surviving. In last project, I used the regression technology to refill missing value in Age. In this time I will try to use multiple imputation to clean the missing value with Age. The dataset has same situation. In train dataset, it has 891 obs and 12 variables. And in test dataset, it has 418 observation and 11 variable (Except “Survived”),here’s what we’ve got to deal with: Variable Name | Description Passage ID | Survived | Survived (1) or died (0) Pclass | Passenger’s class ( 1st, 2nd, 3rd) Name | Passenger’s name Sex | Passenger’s sex (Malem, Female) Age | Passenger’s age SibSp | Number of siblings/spouses aboard Parch | Number of parents/children aboard Ticket | Ticket number Fare | Passenger Fare Cabin | Cabin Embarked | Port of embarkation (Cherbourg(C);Queenstown(Q);Southampton(S))

- 2. # Load packages library(car) library(MASS) test<-read.csv("/Volumes/YI/Loyola 2015 Fall/408/Project/test.csv") train<-read.csv("/Volumes/YI/Loyola 2015 Fall/408/Project/train.csv" ) #Add a "Survived" colum in test dataset. test$Survived <- rep(0, 418) #add the "survived" in the test datase t test$Survived <- NA combi<- rbind(train, test) #conbi the train and test dataset summary(combi)

- 3. ## PassengerId Survived Pclass ## Min. : 1 Min. :0.0000 Min. :1.000 ## 1st Qu.: 328 1st Qu.:0.0000 1st Qu.:2.000 ## Median : 655 Median :0.0000 Median :3.000 ## Mean : 655 Mean :0.3838 Mean :2.295 ## 3rd Qu.: 982 3rd Qu.:1.0000 3rd Qu.:3.000 ## Max. :1309 Max. :1.0000 Max. :3.000 ## NA's :418 ## Name Sex Age ## Connolly, Miss. Kate : 2 female:466 Min. : 0. 17 ## Kelly, Mr. James : 2 male :843 1st Qu.:21. 00 ## Abbing, Mr. Anthony : 1 Median :28. 00 ## Abbott, Mr. Rossmore Edward : 1 Mean :29. 88 ## Abbott, Mrs. Stanton (Rosa Hunt): 1 3rd Qu.:39. 00 ## Abelson, Mr. Samuel : 1 Max. :80. 00 ## (Other) :1301 NA's :263 ## SibSp Parch Ticket Fare ## Min. :0.0000 Min. :0.000 CA. 2343: 11 Min. : 0.000 ## 1st Qu.:0.0000 1st Qu.:0.000 1601 : 8 1st Qu.: 7.896 ## Median :0.0000 Median :0.000 CA 2144 : 8 Median : 14.454 ## Mean :0.4989 Mean :0.385 3101295 : 7 Mean : 33.295 ## 3rd Qu.:1.0000 3rd Qu.:0.000 347077 : 7 3rd Qu.: 31.275 ## Max. :8.0000 Max. :9.000 347082 : 7 Max. :512.329 ## (Other) :1261 NA's :1 ## Cabin Embarked ## :1014 : 2 ## C23 C25 C27 : 6 C:270 ## B57 B59 B63 B66: 5 Q:123 ## G6 : 5 S:914 ## B96 B98 : 4 ## C22 C26 : 4 ## (Other) : 271

- 4. I added a “Survived” variable in test dataset and combine this two dataset. We use summary() function to check which variables have missing value. There are 263 passenger’s age missing. Only one “Fare” of ticket and Only two “port of embarked” was missed. I will not think about “Cabin” because there is about 1014 missing value (have large missing value). Also I don’t think the “PassengerId” and “ticket” will effect with “Survival”. I will not add “PassengerId”,“ticket”,“Cabin” in my prediction. Part one: Analiysis current variables @ Train In this part I will use mosaic() function to analysis variables (Pclass, Sex, Age, Fare, SibSp, Parch, Enbarked) influenceing with the odds of a passenger’s survival. #The overview some variable with survivor library(grid) library(vcd) train2<-train #Pclass and Survivor(0=Perished, 1=Survived) mosaicplot(train2$Pclass ~ train2$Survived, main="Passenger Fate by Traveling Class", shade=F, color=TRUE, xlab="Pclass", ylab="Survived ")

- 5. The mosaic plot shows that we preserve our rule that there’s a survival penalty among third paassenger’s class, but a benefit for passengers in 1st class. # Sex and Suevivor(0=Perished, 1=Survived) mosaicplot(train2$Sex ~ train2$Survived, main="Passenger Fate by Gen der", shade=FALSE, color=TRUE, xlab="Sex", ylab="Survived")

- 6. Not surprise. with the order of “women and children first” was given, the persentage of female survived higher than male.So this is very improtance variable to predict survived in test data set. par(mfrow=c(1,2)) #SibSp mosaicplot(train2$SibSp ~ train2$Survived, main="Passenger Fate by SibSp (Number of Siblings/Spouses)", shade=FALSE, color=TRUE, xlab=" SibSp", ylab="Survived") #Parch mosaicplot(train2$Parch ~ train2$Survived, main="Passenger Fate by Parch", shade=FALSE, color=TRUE, xlab="Parch", ylab="Survived")

- 7. This two mosaic plots given me really important information that the family size has high influence of passenger survival. Small family size has high probability to get survival. People will not give the limit survival chance to big family size because the limit seat count in a lifeboat. I will deal with this factor later. par(mfrow=c(1,1)) # Age and Suevivor train2$Age2 <- '60+' train2$Age2[train2$Age < 60 & train$Age >= 40] <- '40-59' train2$Age2[train2$Age < 40 & train$Age >= 18] <- '18-39' train2$Age2[train2$Age <= 17] <- '0-17' mosaicplot(train2$Age2 ~ train2$Survived, main="Passenger Fate by A ge", shade=FALSE, color=TRUE, xlab="Age", ylab="Survived")

- 8. Same situation with “Sex”, the mosaic plot shows that there’s a survival penalty among rang of “60+”, but really a benefit for passengers in age rang of “0-18”. Because under 18 years old is children. So passenger who is children or Adult is important influential factor. In other hand, the passenger between 40 to 59 years old is the second survival. One of the reason, between this age range, people who have children following. When the children got chance to board lifeboats, they can follow their children. I will think about add new variable which is “Mother”.

- 9. #Fare #in our dataset, the fare is total ticket cost per family. So we nee d think about the unit price of a ticket. train2$Familysize<-train2$SibSp+train2$Parch+1 train2$Fareper<-round(train2$Fare/train2$Familysize,4) train2$Fare2 <- '30+' train2$Fare2[train2$Fare < 30 & train2$Fare >= 20] <- '20-30' train2$Fare2[train2$Fare < 20 & train2$Fare >= 10] <- '10-20' train2$Fare2[train2$Fare < 10] <- '<10' mosaicplot(train2$Fare2 ~ train2$Survived, main="Passenger Fate by Fare (fee per tickets)", shade=FALSE, color=TRUE, xlab="fare per tic ket", ylab="Survived") In our train dataset, the fare is total ticket for each family. So I need look for the relationship between fare per ticket and survival. The new variable “Fare2” are: <10, 10- 20, 20-30, 30+. The mosaic plot shows that we preserve our rule that there’s a survival penalty among cheaper ticket fare, but a benefit for passengers have 1st class ticket.

- 10. #Enbarked mosaicplot(train2$Embarked ~ train2$Survived, main="Passenger Fate by Port of Embarkation", shade=FALSE, color=TRUE, xlab="Embarked", y lab="Survived") Following above figure, it shows that almost a half passages boarded Titanic in Southampton. And the height of the leftmost light gray rectangle [representing the proportion of passenger who boarded in “C”(Cherbourg) and survived] and compare it to the shorter light gray rectangle [representing proportion of passenger who boarded in “Q” (Queenstown) &“S” and survived]. Embarked feature will prove useful with predication. Part two:Feature Engineering In the part 1, I use mosaic plots to observe relationship between variables and survival. I think the “Sex”, “Sibsp”,”Parch”, “Pclass” and “Age” are more important factors. In part 2, I will create some new variable (factors) basic on data analysis in last part. And also I

- 11. will break “Name (Passenger name)” down into addtional meaningful variables. title and surname combi$Name <- as.character(combi$Name) # get title from passenger names combi$Title <- gsub('(.*, )|(..*)', '', combi$Name) # Titles with very low cell counts to be combined to "rare" level rare_title <- c('Capt', 'Col', 'Don', 'Dr', 'Major', 'Rev', 'Sir') # Also reassign mlle, ms, and mme accordingly combi$Title[combi$Title == 'Mlle'] <- 'Miss' combi$Title[combi$Title == 'Ms'] <- 'Miss' combi$Title[combi$Title == 'Mme'] <- 'Miss' combi$Title[combi$Title %in% c('Ms','Dona', 'Lady', 'the Countess', 'Jonkheer')] <- 'Mrs' combi$Title[combi$Title %in% rare_title] <- 'Rare Title' combi$Title <- factor(combi$Title) #replace all # Show title counts by sex again table(combi$Sex, combi$Title) ## ## Master Miss Mr Mrs Rare Title ## female 0 265 0 200 1 ## male 61 0 757 1 24 #get surname from passenger name combi$Surname <- sapply(combi$Name, FUN=function(x) {strsplit(x, split='[,.]')[[1]][1]}) Do families together (famaliy size) Now that I have taken care of splitting passenger name into some new variables, for example, some new family variables. # Create a family size variable including the passenger themselves combi$FSize <- combi$SibSp + combi$Parch + 1 # Create a family variable combi$Family <- paste(combi$Surname,as.character(combi$FSize), sep= "_")

- 12. As in part 1 I give my opinion that the family size will have influence for prediction. Here I will use ggplot() function to visualize the relationship between family size & survival base on tranin data set (combi[1:891]). library(ggplot2) # visualization # Use ggplot2 to visualize the relationship between family size & su rvival ggplot(combi[1:891,],aes(x=FSize,fill=factor(Survived)))+geom_bar(po sition='dodge')+scale_x_continuous(breaks=c(1:11))+labs(x="Family Si ze") ## stat_bin: binwidth defaulted to range/30. Use 'binwidth = x' to a djust this. We can see that there’s a survival penalty to singletons and those with family sizes above 4.For now I will collapse this variable into three levels (small, median, large). It will be help for prediction.

- 13. #discretized family size combi$FSlevel[combi$FSize == 1] <- 'singleton' #1 combi$FSlevel[combi$FSize < 5 & combi$FSize > 1] <- 'small' #2-4 combi$FSlevel[combi$FSize > 4] <- 'large' #5+ # Show family size by survival using a mosaic plot mosaicplot(table(combi$FSlevel, combi$Survived), main='Family Size b y Survival', shade=TRUE) It is significant shows that a survival is penalty to large family size, but a benefit for passengers in small families. Agian, With the order of “women and children first” was given, passenger who are mother or children is really important for survivor. So I will add this two variable in the data set after missing data cleaning, it will have benefit for predicted. Part 3. Data Cleaning

- 14. As we noted in first part, there’s a survival penalty among rang of “60+”, but really a benefit for passengers in age rang of “0-18”. “Age” is important factor, but there are quite a few missing values in our data. Last semester, I used the regression to fix the missing value of “Age”. At this time, I’m going to use the mice() package predict missing ages. Let’s clean missing value! cleaning missing “Age” value #loading pakage library(dplyr) # data manipulation library(Rcpp) #"Mice" require library(lattice) #"Mice" require library(mice) # imputation library(VIM) #matrixplot check assumption Before using multiple imputation for “Age”, I need check the assumption that “Age” is MAR. s<-data.frame(combi$Pclass,combi$Age,combi$SibSp,combi$Parch,combi$F are) names(s)<-c("Pclass","Age","SibSp","Parch","Fare") par(mfrow=c(2,2)) matrixplot(s,sortby="Pclass") ## ## Click in a column to sort by the corresponding variable. ## To regain use of the VIM GUI and the R console, click outside the plot region. matrixplot(s,sortby="SibSp") ## ## Click in a column to sort by the corresponding variable. ## To regain use of the VIM GUI and the R console, click outside the plot region.

- 15. matrixplot(s,sortby="Parch") ## ## Click in a column to sort by the corresponding variable. ## To regain use of the VIM GUI and the R console, click outside the plot region. matrixplot(s,sprtby="Fare") ## ## Click in a column to sort by the corresponding variable. ## To regain use of the VIM GUI and the R console, click outside the plot region.

- 16. I want use mutiple imputation to fit the missing value of “Age”. The four plots show that the replationship of missing value of “Age” with “Pclass”,“Sibsp”,“Parch”and “Fare”.In overview, we can saw that the missing value of “Age” is MAR with Pclass. sum(is.na(combi$Age)) ## [1] 263 set.seed(129) factor_vars <- c('PassengerId','Pclass','Sex','Embarked','Title','Su rname','Family','FSlevel') combi[factor_vars] <- lapply(combi[factor_vars], function(x) as.fact or(x)) #using mice imputation, excluding certain less useful variables: mice_mod <- mice(combi[, !names(combi) %in% c('PassengerId','Name',' Ticket','Cabin','Family','Surname','Survived')], method='rf')

- 17. ## ## iter imp variable ## 1 1 Age Fare ## 1 2 Age Fare ## 1 3 Age Fare ## 1 4 Age Fare ## 1 5 Age Fare ## 2 1 Age Fare ## 2 2 Age Fare ## 2 3 Age Fare ## 2 4 Age Fare ## 2 5 Age Fare ## 3 1 Age Fare ## 3 2 Age Fare ## 3 3 Age Fare ## 3 4 Age Fare ## 3 5 Age Fare ## 4 1 Age Fare ## 4 2 Age Fare ## 4 3 Age Fare ## 4 4 Age Fare ## 4 5 Age Fare ## 5 1 Age Fare ## 5 2 Age Fare ## 5 3 Age Fare ## 5 4 Age Fare ## 5 5 Age Fare # Save the complete output mice_output <- complete(mice_mod) To make sure the “imputation age”" data have same situation with original data which we have. I will compare the histogram of two age data par(mfrow=c(1,2)) hist(combi$Age, freq=F, main='Age: Original Data', ylim=c(0,0.04),c ol="lightpink") hist(mice_output$Age, freq=F, main='Age: MICE Output', ylim=c(0,0.04 ),col="tan1")

- 18. combi$Age <- mice_output$Age # Replace Age variable from the mice m odel. sum(is.na(combi$Age)) ## [1] 0 It looks like most match each other, wonderful! Here We have complete “Age” value. passenger are “chidren or Adult” & “Mother or not” Thinking about other factor. With the order of “women and children first” was given, passenger who are mother or children is really important for survivor. In part 1, according train data set analysis, we concluded that there’s a survival penalty among rang of “60+”, but really a benefit for passengers in age rang of “0-18”. And also the percentage of female survived higher than male. So I think if I add this two variable in the data set, it will have benefit for predicted.

- 19. # Create the column child, and indicate whether child or adult combi$Child[combi$Age < 18] <- 'Child' combi$Child[combi$Age >= 18] <- 'Adult' #creat the column Mother, and indicate whether Mother or not combi$Mother <- 'Not Mom' combi$Mother[combi$Sex == 'female' & combi$Parch > 0 & combi$Age > 1 8 & combi$Title != 'Miss'] <- 'Mother' #show the mosaic plot par(mfrow=c(1,2)) mosaicplot(combi$Child ~ combi$Survived, main="Child or Aldult", sh ade=FALSE, color=T,xlab="Child", ylab="Survived") mosaicplot(combi$Mother ~ combi$Survived, main="Mother or Not", sha de=FALSE, color=T,xlab="Mother", ylab="Survived") # Finish by factorizing our two new factor variables combi$Child <- factor(combi$Child) combi$Mother <- factor(combi$Mother) combi$FSlevel <- factor(combi$FSlevel)

- 20. This two plots show that survivar is really a benefit for passengers in Child. And also the percentage of Mother survived higher than Not Morther. cleaning missing “Embarked” and “Fare” value I use regression to fix the missing value of “Fare” and use “C” to replay the two missing value of “Embarked”. #Embarked summary(combi$Embarked) ## C Q S ## 2 270 123 914 which(combi$Embarked == '') #check which 2 are " ". ## [1] 62 830 combi[c(62,830),] ## PassengerId Survived Pclass Name ## 62 62 1 1 Icard, Miss . Amelie ## 830 830 1 1 Stone, Mrs. George Nelson (Martha Evelyn) ## Sex Age SibSp Parch Ticket Fare Cabin Embarked Title Surna me FSize ## 62 female 38 0 0 113572 80 B28 Miss Ica rd 1 ## 830 female 62 0 0 113572 80 B28 Mrs Sto ne 1 ## Family FSlevel Child Mother ## 62 Icard_1 singleton Adult Not Mom ## 830 Stone_1 singleton Adult Not Mom

- 21. ggplot(combi, aes(x = Embarked, y = Fare, fill = factor(Pclass))) +g eom_boxplot() +geom_hline(aes(yintercept=80), colour='red', linetype ='dashed', lwd=2) #replace these two missing value is "C" because both ticket fare is 80 combi$Embarked[c(62,830)] = "C" combi$Embarked <- factor(combi$Embarked) I find both obs 62 and 830 bought the ticket 38 in 1st passange class. And I make a plot between Enbarked and Fare, and fill it by Pclass. The plot shows that when passenage has 1 st class ticket, most of them boarded Titanic at Enbarket “C”. So I replaced the two missing value with “C”. #Fare library(rpart) summary(combi$Fare)

- 22. ## Min. 1st Qu. Median Mean 3rd Qu. Max. NA's ## 0.000 7.896 14.450 33.300 31.280 512.300 1 which(is.na(combi$Fare)) #check the obs ID with "NA" ## [1] 1044 Farefit <- rpart(Fare ~ Pclass+Embarked,data=combi) combi$Fare[is.na(combi$Fare)] <- predict(Farefit,combi[is.na(combi$F are),]) summary(combi) ## PassengerId Survived Pclass Name S ex ## 1 : 1 Min. :0.0000 1:323 Length:1309 femal e:466 ## 2 : 1 1st Qu.:0.0000 2:277 Class :character male :843 ## 3 : 1 Median :0.0000 3:709 Mode :character ## 4 : 1 Mean :0.3838 ## 5 : 1 3rd Qu.:1.0000 ## 6 : 1 Max. :1.0000 ## (Other):1303 NA's :418 ## Age SibSp Parch Ticket ## Min. : 0.17 Min. :0.0000 Min. :0.000 CA. 2343: 11 ## 1st Qu.:21.00 1st Qu.:0.0000 1st Qu.:0.000 1601 : 8 ## Median :28.00 Median :0.0000 Median :0.000 CA 2144 : 8 ## Mean :29.66 Mean :0.4989 Mean :0.385 3101295 : 7 ## 3rd Qu.:38.00 3rd Qu.:1.0000 3rd Qu.:0.000 347077 : 7 ## Max. :80.00 Max. :8.0000 Max. :9.000 347082 : 7 ## (Other) :1261 ## Fare Cabin Embarked Title ## Min. : 0.000 :1014 C:272 Master : 61 ## 1st Qu.: 7.896 C23 C25 C27 : 6 Q:123 Miss :265 ## Median : 14.454 B57 B59 B63 B66: 5 S:914 Mr :757 ## Mean : 33.282 G6 : 5 Mrs :201 ## 3rd Qu.: 31.275 B96 B98 : 4 Rare Title: 25 ## Max. :512.329 C22 C26 : 4 ## (Other) : 271

- 23. All of factor which I will use in prediction are cleaning! PART 4, Prediction In Last part, we clean all of the miss date out. Now I will use RandomForest technology in train data set to find a random forest model, and then I will put all of data in test data set to this model, and get the prediction of passenger survivor. library(randomForest) ## randomForest 4.6-10 ## Type rfNews() to see new features/changes/bug fixes. ## Surname FSize Family FSleve l ## Andersson: 11 Min. : 1.000 Sage_11 : 11 large : 82 ## Sage : 11 1st Qu.: 1.000 Andersson_7: 9 singleton:7 90 ## Asplund : 8 Median : 1.000 Goodwin_8 : 8 small :4 37 ## Goodwin : 8 Mean : 1.884 Asplund_7 : 7 ## Davies : 7 3rd Qu.: 2.000 Fortune_6 : 6 ## Brown : 6 Max. :11.000 Panula_6 : 6 ## (Other) :1258 (Other) :1262 ## Child Mother ## Adult:1125 Mother : 85 ## Child: 184 Not Mom:1224 ## ## ## ## ##

- 24. # Split the data back into a train set and a test set train <- combi[1:891,] test <- combi[892:1309,] # Build the model (variables are used) in train #model:(not include the new model) set.seed(754) rf<- randomForest(factor(Survived) ~ Pclass + Sex + Age + SibSp + Pa rch + Fare + Embarked,ntree=1000,important= T, data = train) par(mfrow=c(1,1)) # Show model error plot(rf, ylim=c(0,0.36),main="Random Forst model") legend('topright', colnames(rf$err.rate), col=1:3, fill=1:3) The first random forest model I did not add any new variable. In the error plot, the black line shows the overall error rate which falls below 20%. The red and green lines show the error rate for ‘died’ and ‘survived’ respectively. This plot does not look good because the error of “survived” line go increase. Let’s try add the new variable:Title, FSlevel, Child, Mother in random forest model (rf1).

- 25. ##model1( except:passenage ID, Name, ticket ,cabin,surname, family) set.seed(754) rf1<- randomForest(factor(Survived) ~ Pclass + Sex + Age + SibSp + P arch + Fare + Embarked + Title + FSlevel + Child + Mother,ntree=100 0,important= T,data = train) par(mfrow=c(1,1)) # Show model error plot(rf1, ylim=c(0,0.36),main="Random Forst model 1") legend('topright', colnames(rf1$err.rate), col=1:3, fill=1:3) #get important importance(rf1)

- 26. ## MeanDecreaseGini ## Pclass 30.914312 ## Sex 53.431991 ## Age 45.013408 ## SibSp 12.807030 ## Parch 8.144535 ## Fare 58.653045 ## Embarked 9.147653 ## Title 74.398566 ## FSlevel 17.158887 ## Child 4.146409 ## Mother 2.151698 varImpPlot(rf1) Now, the error plot look better than “rf” model.I did my first submit to Kaggle with random forest model1 (rf2). The black line shows the overall error rate which falls below

- 27. 20%. The error of “survived” line go down. I got 77.033% in this time, I will continue to try find the higher percentage of correct Survival prediction. In “important variable plot”, we can saw the “title” has the highest relative importance out of all of our predictor variables. I am so surprised. And “passenger class” fell to #5. The least two important variable are Child and Mother. I will try delete these two variables in next model. #model2( except:passenage ID, Name, ticket ,cabin,surname, family,ch ild,mother) set.seed(754) rf2<- randomForest(factor(Survived) ~ Pclass + Sex + Age + SibSp + P arch + Fare + Embarked + Title +FSize+FSlevel,ntree=1000,important= T,data = train) par(mfrow=c(1,1)) # Show model error plot(rf2, ylim=c(0,0.36),main="Random Forst model 2") legend('topright', colnames(rf2$err.rate), col=1:3, fill=1:3)

- 28. #get important importance(rf2) ## MeanDecreaseGini ## Pclass 30.961023 ## Sex 51.229685 ## Age 49.895092 ## SibSp 9.449376 ## Parch 6.794575 ## Fare 60.705717 ## Embarked 9.467288 ## Title 78.556382 ## FSize 17.155367 ## FSlevel 12.175905 varImpPlot(rf2)

- 29. The new random forest model(rf2) have same tree number (ntree=1000), and but I move the “Child” and “Mother” out. We can see that right now we’re much more successful predicting death than we are survival. I will resubmit the prediction of survivor with random forest model 2. In “important variable plot”, we can saw the first four variables are “Title”, “Fare”, “Sex”, and “Age”.The “title” still has the highest relative importance out of all of our predictor variables.

- 30. #choose random forest model 2 prediction <- predict(rf2, test) # Save the solution to a dataframe with two columns: PassengerId and Survived (prediction) myrult8 <- data.frame(PassengerID = test$PassengerId, Survived = pre diction) # Write the solution to file write.csv(myrult8, file = '/Volumes/YI/Loyola 2016 Winter/Stat 388/p roject/myrult8.csv', row.names = F,quote=FALSE) I also try decrease the number of trees in “rf2”, but it does not improve the prediction correct rate. So random forest 2 (Survived ~ Pclass + Sex + Age + SibSp + Parch + Fare + Embarked + Title +FSize+FSlevel) with ntree=1000 is my “best” model for Titanic Project. Then I calculated the survived value in the test dataset. And I submitted the result to Kaggle. My score is 0.78974. In this time, I use random forest technology to predict Passage’s survival. In the future, I will try other way to get high prediction accuracy. Well done!